everythingpossible - Fotolia

Software reliability engineering practices for distributed apps

Learn how these four software reliability engineering concepts -- inventories, monitoring, observability and Pareto analysis -- can aid API management and application performance.

Software reliability engineering aims to improve performance and use comprehensive information to address bugs and prioritize fixes across APIs, feature code and databases. Distributed applications, cloud hosting and connected devices introduce modern challenges to software management, which means reliability engineers need to adopt modern techniques.

There are four core elements of software reliability engineering:

- Know the software inventory you're working with, and make sure data on the software system stays compatible.

- Implement monitoring and test techniques that go beyond software stack performance and track user experience.

- Find new ways to observe software failures and bugs within complex application interactions.

- Plan out development work using data visualization.

Know your inventory

Software comprises a mix of APIs, databases and piles of other software components. Providing your team with a comprehensive view of the overall architecture is a crucial part of software reliability engineering. Even when teams do have access to a high-level view of the architecture, it's not likely to provide the details they need, such as the IP addresses, components connection mappings or any business context.

The primary objective of a system inventory is to fully understand the whole system. This requires up-to-date information that includes both the fine details and the macro view. Create an inventory that team members can drill down into and access detailed data, including lists of all the systems, APIs, sites and servers. They should also have information about the business and technical owners of each component so they know where to go for support.

If your organization has started an inventory of its software, investigate whether the information is accurate and includes everything the engineer needs to know. Once the engineers understand the software, they can improve its reliability.

Implement monitoring and synthetic testing

To make improvements, reliability engineering requires a baseline of software performance -- from the user's perspective.

Most teams have some type of IT monitoring in place -- e.g., server health dashboards. But infrastructure performance isn't indicative of software reliability, because many transactions run every second. Individual performance -- such as how quickly a transaction renders on a server -- changes incredibly quickly and cannot be captured with green or red health status for a server.

To make monitoring more effective, turn to the application inventory to discover the most popular APIs. Make queries and set up alerts on these key APIs. Therefore, if an API's performance is trending toward a problem, the alert will direct attention to resolve it before users experience reliability issues.

But, remember, monitoring system performance can't tell you what the customer experiences when they interact with the software. For that, you need to create synthetic transactions -- a practice known as semantic monitoring.

Functionally, semantic monitoring performs constant login-and-read operations. These synthetic transactions run from an actual client, as opposed to monitoring the IT infrastructure on the server side, and should record user-facing errors, such as server error messages and performance delays. Synthetic monitoring will, at least, show you when something goes generally wrong with all your users.

Optimize observation

To make the most of software reliability engineering, your organization needs to dispense with any dated development practices. For example, distributed services should not collectively perform a single request for search results or login. Instead, service messaging should move back and forth between the client-side app and an in-home network hub using a back-end cloud.

But, along the way, these messages can get lost. While a specific function may work, the application may still tell the user it failed, misleading the user. If server-to-service communication fails of the task, even functions that perform correctly will appear broken to the user.

The trick is to see inside the transaction and figure out what went wrong. Development teams should build these observation capabilities into their application's monitoring and debugging protocols, regardless of whether the developers, operations team or software reliability engineers are responsible for that debugging.

If you can make log files searchable, it's possible to make transaction debugging as simple as performing a programming stack trace. Searchable log files typically provide the user ID, session ID, timestamps, request records and output records. Keep in mind, however, that tracing this information can affect software performance. The team should agree on how they will turn debugging information on and off without inducing any unnecessary performance issues.

Pareto analysis

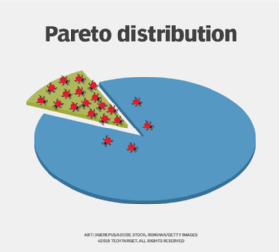

As applications become more complex, resources grow and users demand high reliability. A practice called Pareto analysis can come to your aid in these situations. Named after economics expert Vilfredo Pareto, a Pareto distribution is one where a single value dominates the others, showing a weighted relationship of a cause to effects.

Classically, the Pareto principle expects 80% of the effects to come from 20% of the causes -- e.g., when 80% of the bugs come from 20% of the subsystems in a given software architecture. To conduct a Pareto analysis on software reliability, tally every problem with the whole inventory of the system, and assign each a root cause and a weighted value. Then, add up the weights and sort them in descending order so the largest problems appear on top, exhibiting which issues need to take precedence.

Effective software reliability engineers spend a great deal of time compiling data to learn where the biggest problems exist. Once they resolve issues, engineers can document the root cause that required a corrective action. Over a period of weeks or months, the efforts to improve software reliability can correct those wider, system-level issues, which might mean working with the development group on feature code, or changing the deployment method of services with the operations team.

In many cases, the most effective process simply involves lots of log searches, exports and calculations. Sometimes, the best tool to improve software reliability is a well-organized spreadsheet.