How Azure, AWS, Google handle data destruction in the cloud

Data destruction is a topic that has been poorly covered until recently. Regardless of which cloud service provider you use, this review of the top three CSPs' data destruction documentation should improve your due diligence.

Market research from Cybersecurity Insiders indicates that 93% of organizations have concerns about the security of the public cloud. This healthy distrust likely stems from a lack of information. Cloud service providers know their customers; they understand these concerns and have developed a plethora of documentation and sales collateral to earn our trust. One very welcome documentation improvement by the leading cloud providers is the amount of transparency pertaining to data destruction. Here is a review of this documentation so you can form a more complete picture of what exactly happens when we tell our cloud service provider to delete our data.

To frame the problem, let us take an inductive look at the question of why do we care about data destruction? Well, it is one of many security controls that the Cloud Security Alliance teaches us to review before engaging with a cloud service provider (CSP). When I teach about cloud security, I remind my audience that a given security control only mitigates specific risks and that in cybersecurity, there are no magic security bullets. Hence, it is essential to understand what a particular security control mitigates and what it does not.

Okay, but why is data destruction an important security control? Sensitive data must be destroyed when it is no longer needed to prevent unauthorized access to it. Until data is destroyed, it must be properly secured. How could an unauthorized person access sensitive information in the cloud that was not properly destroyed? They could:

- Use forensic tools to extract data from the cloud service provider's hard drives

- Encounter remnants of data from another tenant

- Use insider privileges at the CSP to access the data

- Recover sensitive data from a backup

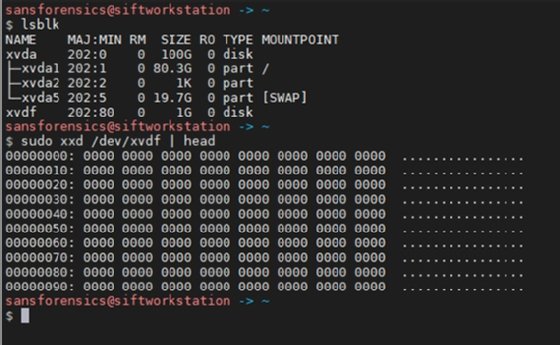

Forensic examination of a hard drive

For many people, the first tactic that comes to mind to gain unauthorized access to sensitive information would be to obtain a hard disk somehow and use forensic tools to extract data from the drive. Now, let's break this down into its two parts -- getting access to a hard disk and then extracting meaningful data from it.

Physical security of hard disks

The large, mature cloud service providers excel at physical security. The only personnel who have access to a CSP data center are the few people who have job duties inside it, and only a subset of those employees is responsible for the lifecycle of the hard disks. Hard disk drives (HDDs) have a limited lifespan, and cloud service providers consume them by the thousands. The CSP uses software to track each HDD by serial number and accounts for its exact location at any point in time. When the drive has reached the end of its useful life, the cloud service provider will shred it or use a similar means of complete physical destruction. Independent audit firms closely scrutinize this process.

Data extraction

If an attacker is somehow able to obtain access to a physical hard drive, they may attempt to use various forensic techniques to extract sensitive data from the device. However, unlike the disk drive in your laptop, each hard drive that cloud service providers use contains fragments of data (called shards) from potentially hundreds of different tenants. Even if these fragments are not encrypted, it would be nearly impossible for an attacker to associate a fragment with a specific tenant. Note that I stated "nearly impossible" because the fragment could contain an identifying data element. Likewise, lacking the mapping information, it would be impossible for an attacker to identify all the drives for a specific target. I'll cover the benefits of encrypting customer data with tenant-specific encryption keys later.

Data remnants from another tenant

Many of us have had the experience of renting an apartment only to find that the previous occupant left us with cleaning supplies, trash and possibly even a lost diamond earring. We certainly do not want that to happen when we become a tenant in the cloud. Amazon Web Services, Microsoft Azure and Google Cloud Platform have designed their cloud systems to prevent this from happening. The "Amazon Web Services: Overview of Security Processes" white paper states:

"When an object is deleted from Amazon S3, removal of the mapping from the public name to the object starts immediately, and is generally processed across the distributed system within several seconds. Once the mapping is removed, there is no remote access to the deleted object. The underlying storage area is then reclaimed for use by the system."

"Amazon EBS volumes are presented to you as raw unformatted block devices that have been wiped prior to being made available for use. Wiping occurs immediately before reuse so that you can be assured that the wipe process completed."

To continue with the apartment building analogy, it is as if the apartment is completely obliterated, (walls, floors, ceiling and all) and the elevator (which provides the access control) no longer stops at that floor. In the case of Amazon Elastic Block Store (EBS), the data is not securely wiped until a new EBS volume is provisioned for a tenant and sized according to the cloud user specifications.

Some readers may be initially concerned that Amazon waits to wipe the data until it is reprovisioned for a new user. However, that is most efficient and preserves the life of the solid-state hard drives. Also, do not inaccurately assume that an EBS volume is hosted on a single physical hard drive. The AWS documentation states, "Amazon EBS volume data is replicated across multiple servers in an Availability Zone to prevent the loss of data from the failure of any single component."

John Molesky, a senior program manager at Microsoft, made a similar statement:

"The sectors on the disk associated with the deleted data become immediately available for reuse and are overwritten when the associated storage block is reused for storing other data. The time to overwrite varies depending on disk utilization and activity but is rarely more than two days. This is consistent with the operation of a log-structured file system. Azure Storage interfaces do not permit direct disk reads, mitigating the risk of another customer (or even the same customer) from accessing the deleted data before it is overwritten."

I appreciate the additional information Microsoft provided in the above blog excerpt because this is the type of disclosure we need from our cloud service providers. I applaud the relatively recent acknowledgments that data is not wiped until it is provisioned by a new customer and appreciate the additional context Azure provided that reminds us that these highly optimized resources are overwritten naturally within days due to high use.

It should be noted that Google Cloud Platform also uses log-structured file systems. I would like to see all cloud service providers supply additional technical details of these systems along with the relevant security implications. Given the fact that the cloud service provider maintains strict physical security over its hard drives, my professional belief is that this data handling is acceptable for any classifications of data suitable for storage in the public cloud.

Insider privileges

Cloud customers expect their data to be protected throughout its lifecycle until the data is destroyed and can no longer be accessed. I have already covered the safeguards in place to protect customer data from external parties prior to its destruction, but what about protecting data from trusted insiders? AWS, Azure and Google Cloud Platform have security documentation that covers the applicable security controls, including background checks, separation of duties, supervision and privileged access monitoring.

The primary concern with insider threats is that employees and contractors have detailed system knowledge and access to lower-level systems that are not exposed to public cloud customers. The CERT National Insider Threat Center has detailed guidance, and cloud customers should explore what controls are in place to protect data that has been deleted and is pending destruction. As thoughtful technical customers ask probing questions of their cloud service providers, en masse, the best cloud providers listen and respond with increasingly transparent documentation.

Encryption is a security control that can mitigate unauthorized insider access when appropriately applied. Unfortunately, encryption is often used as a Jedi mind trick. Some customers stop asking the tough questions once they hear that the service uses encryption. Encryption is a technique to control access. The person or system that controls the encryption key controls the access. For example, with transparent database encryption, the database management system controls the key and therefore controls the access. A database administrator (DBA) can query the data in the clear, but the administrator of the storage system that the database uses can only see the ciphertext. However, if the application controls the key, both the DBA and the storage system administrator can only see ciphertext.

With cryptographic erasure, the only copies of the encryption keys are destroyed, thereby rendering the encrypted data unrecoverable. NIST Special Publication 800-88, Revision 1 recognizes cryptographic erasure as a valid data destruction technique within certain parameters that are readily enforced in modern public cloud environments.

Azure documentation states that encryption is enabled for all storage accounts and cannot be disabled -- the same for Google. However, in AWS, it is a configuration option for services such as S3 and EBS.

Unfortunately, AWS and Azure fail to tout the benefits of the cryptographic erasure technique even though they are using it to destroy customer data. Also, it is often unclear when a CSP is using a tenant-specific encryption key to perform encryption at rest for their various services. When a tenant-specific encryption key is used in conjunction with cryptographic erasure, only the data belonging to a single tenant is destroyed. Cryptographic erasure is a very attractive alternative to overwriting data, especially for customers with hundreds of petabytes of data in cloud storage.

Recover data from backups

The last attack vector involves an adversary attempting to recover sensitive data from a backup. I always caution my clients to not assume that a CSP is backing up your data unless the contract clearly specifies it. Unless stated otherwise, cloud service providers are primarily using backups or snapshot techniques to meet service-level agreements regarding data durability and availability.

If data is being backed up, the backup must be protected with at least the same level of security as the primary data store. Among the top three cloud service providers, Google's documentation, "Data deletion on Google Cloud Platform," provides the most transparency concerning how deleted data expires and is rotated out throughout its 180-day regimen of daily/weekly/monthly backup cycles. To Google's credit, this document even covers the important role of cryptographic erasure in protecting the data until it expires from all the backups.

Without a doubt, the top three cloud service providers have expended great effort to make their system secure. All cloud service providers must balance the need to protect against leaking too much information that would aid an adversary while providing enough transparency to maintain the trust of their customers. As cloud customers speak with their cloud service providers and seek the appropriate information necessary to make intelligent risk decisions, the cloud service providers are improving their messaging that explains their security investments.

About the author

Kenneth G. Hartman is an independent security consultant based in Silicon Valley and a certified instructor for the SANS Institute. Ken's motto is "I help my clients earn and maintain the trust of their customers in its products and services." To this end, he consults on a comprehensive program portfolio of technical security initiatives focused on securing client data in the public cloud. Ken has worked for a variety of cloud service providers in architecture, engineering, compliance, and security product management roles. Ken has earned the CISSP, as well as multiple GIAC security certifications, including the GIAC Security Expert.