darren whittingham - Fotolia

Hire testers with a mix of technical skills and hutzpah

There's a lot at stake when you hire a software tester -- and a lot to consider about the applicants. Matt Heusser shares how to evaluate capabilities and personalities.

There are a number of criteria by which you can evaluate software tester skills. When you hire testers, focus on these several things to see if they're a good fit for your team.

Many managers assess candidates by what they offer in both social and technical areas. On top of that, seek test design skills and the trait of curiosity, especially when you need a tester with experience.

Find a mix of skills

Job descriptions try to convince us that there is a commonality to a certain job title. A software engineer three position looks one way, while software engineer four looks different. All managers appear to do roughly the same thing, and all architects do something else. This approach completely ignores the context of the project for which you hire testers.

If, for example, the software delivery team includes a staff that built the application from the ground up, then you might not need a tester who deeply understands the problem domain. On the other hand, if the business is highly regulated, such as an insurance company, and the team has mostly junior or outsourced programmers, you might demand more expertise from a tester with the same job title. If the team is in danger of groupthink -- in other words, too in-sync to allow for creativity -- you might want a tester comfortable with conflict. However, if the team storms along at a healthy pace, you might consider a hire who is more collaborative than provocative.

Hiring managers should have some idea of what they want for the context, interview candidates who look best on paper and then evaluate how well that person would complement the staff.

When hiring testers, I am interested in complementary -- yet diverse -- skills and personalities. Culture fit, conversely, can lead to everyone thinking the same, which is called a monoculture. James Whittaker, a distinguished engineer at Microsoft, blamed the company's monoculture for its problems with Windows Vista, as developers, testers and managers all had the same operational profile. This lack of diverse thinking blinded the team to end-user behaviors -- a cautionary tale to avoid when hiring additions to a project team.

What good tester skills look like

A capable tester takes a multistep approach to QA. The tester reviews the software, asks some questions of it and then uses those answers to consider the next question. With a potentially infinite set of questions to ask, the tester follows interesting answers down the rabbit hole, then must emerge before time runs out and draw some conclusions about the software.

The tester doesn't prove that the software works. He might demonstrate that it can work, or that it did not fail under certain conditions, at some point in time. But these sorts of statements don't necessarily help teams decide whether the software is ready to go. Good testers must weave in accurate and insightful conclusions about the software to help teams in this task.

Test toolsmiths face a similar problem; they must reduce the set of test scenarios to the powerful few, and then understand how to interpret the results of those tests.

Try an exercise or two

You can combine test design, test execution, learning and reporting into one exercise, which can help you determine how well a job candidate explores software.

For web and mobile software, you can use a palindrome app, which simply takes a word and tells you whether it is a palindrome. When you ask a candidate to test the app, you can determine his skills and ability to probe for requirements. This exercise also indicates what testers do when they get stonewalled, as I don't tell them much and put them under pressure for a ship decision.

If API testing is part of the job, you could ask them to test the API of the Submit button, without giving them the function signature. Many people who claim to have API testing experience or know HTML and JavaScript don't know how to right-click View source and find the API. Other applicants may profess to know Google DevTools, but aren't aware that they can click on the network tab to find where the API call comes from. There may also be a few candidates who can't do any of those things, but can disconnect the wireless access, click Submit, see the answer pop up and realize there is no API involved at all.

These sorts of questions speak to the technical abilities that go beyond strictly software testing skills, such as setting up a database, learning SQL, understanding network packets and reading JSON results. Hiring managers often ignore this sort of technical debugging, but it is an integral part of an effective tester's daily work.

Next, evaluate how well a candidate can code or automate -- which begs for more than a simple yes or no answer. Some programming testers provide their own GitHub repositories as proof of these abilities. Some companies hiring testers ask them to write code to perform an action, such as to search for an item at Amazon and add it to the shopping cart, in any tool they like as part of the interview process. When I see test automation as a skill on a résumé, I like to ask what the automation they implemented actually did.

Challenges with hiring test toolsmiths

A toolsmith, essentially, is a programming tester -- i.e., one who writes test code. In the long term, there are two primary problems with hiring testers who fall into this category. First, if they do their work in a corner, nobody else knows what they work on. Over time, the UI will change to do things the test code doesn't expect. When that happens, either the toolsmith or team must clean up the test code. If the toolsmith does this work alone, it will slow down the feedback loop, perhaps wasting time and certainly not adding value. If the toolsmith leaves, nobody will know how to do the job; the test code simply rots and it is eventually thrown away.

Additionally, with this approach, the test code isn't included in CI, which requires a workaround, and there can be a disconnect between the toolsmith and programmers. The toolsmith must write test code in a language that production programmers understand as part of a collaborative approach, instead of a territorial one. The test suite must either stay small, fast and light, or it must be able to run in parallel, which probably requires the assistance of the programmers.

While you can hire testers who are proficient with various tools, I like to see that expertise spread to the programmers. When you start a test tool infrastructure program, consider bringing in an evangelist who can bridge the gap between testers and programmers.

To see if a candidate will fit on the team, involve programmers in the interview and encourage them to decide whether they can work with this person as a peer. But, don't ask the candidates if they can code. Tell them to show you they can code.

Try before you hire

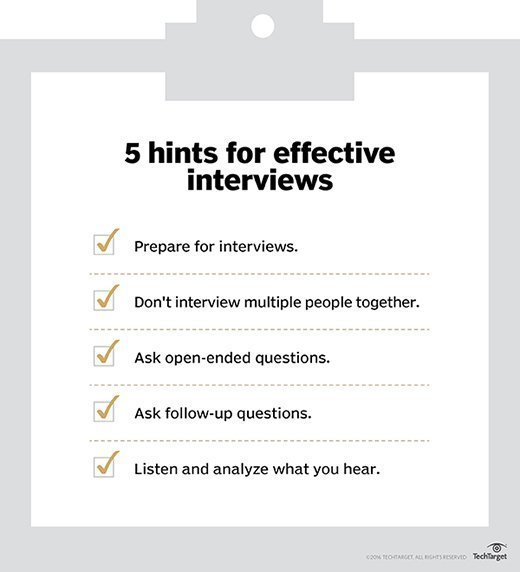

The best interviews are less hypothetical question-and-answer sessions and more like auditions. If you include exercises in an interview, it's possible for that audition to last an hour. But the best audition might be a six-month contract-to-hire. At the end of the six-month interview, you must be willing to let that temporary employee leave if things don't work out.

Finally, make sure you hire testers who can adapt to changing needs over time. Contract-to-hire is one way to make sure they embrace change. For traditional permanent hires, ask experience-based questions, which start with a phrase like "Tell me about a time that you had to ..." in the interview. These questions can make some candidates uncomfortable, but so can working in testing for any length of time.