A Stockphoto - stock.adobe.com

How to develop a test data management strategy

Effective test data management in app development involves defining appropriate data, using activity diagrams to see how the code will perform, and protecting sensitive information.

Test data plays an integral role in developing an application as early and as close to the end product as possible. Teams need a strategy in place to set up, maintain and prepare this test data to analyze results and make decisions based off the information.

To develop a sound strategy, there must be strong communication and collaboration between product and platform engineering teams. This includes establishing goals for what the test environment must achieve and which data is appropriate to use for testing purposes, said Jim Scheibmeir, an analyst at research firm Gartner.

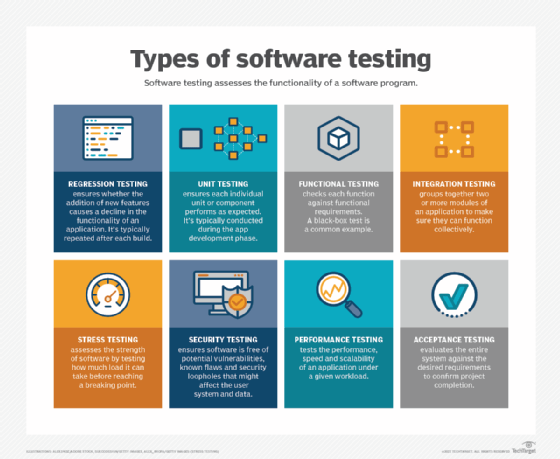

"For example, teams must ask, 'Are we using it for performance testing? Or are we just using it for testing functional characteristics of the software?' The answer will help organizations understand the amount of replication that it has to do," Scheibmeir said.

As important as test data is in the development process, it's often an afterthought at many organizations, said Jim Goetsch, a senior systems engineer at AutoZone, a national automotive parts retailer.

"In my 30-plus years of experience, that's still the case," Goetsch said. "It doesn't have to be. We have volumes and volumes of data, and we have standards or procedures that need to be followed. It's important to have people that will step up and acknowledge or sign off that testing has happened, that test data was created and that data was approved."

Test management strategy best practices

There are three key aspects of developing a strong test management strategy.

1. Identify and define data that is appropriate for use in testing

Simply put, test data is fuel for the process that developers are automating, Goetsch said.

"They need to make sure that data is defined, maybe at a high level, to address their use cases and scenarios," Goetsch said. "And then we need to create specific data that they can use and reuse for their tests -- in an individual system on integration, in a production environment and ideally, in a quality assurance environment later on."

Developers have options when it comes to tools that create test data. They include cloud-native services. If that's where the data lives, Scheibmeir said, the organization can "just lean on our cloud provider. They've got data duplication. They've got data masking services. We can start to tie these together in orchestration."

Unfortunately, "sometimes their data doesn't play," Scheibmeir added. "Then we're back to a do-it-yourself type model, which can be expensive, or we can also look at test data management tools and technologies."

Whether developers use a traditional database or a unstructured database, they must know what's expected of them at the beginning of the test, in setup, at onboarding a new task, as well as what data is necessary at each development step, Scheibmeir said. Some of it might be interactive and put into a script or it might be automated.

"There's a lot of engineering effort that goes in up front," Scheibmeir explained. "Part of that is pinpointing the data that needs to be de-identified [to not be included in test data] -- especially private and confidential data living in the system. It's not always just names and addresses. Things like a pharmacy prescription number can identify a customer, their medications and their overall health. It goes beyond the obvious. And sometimes it takes some business domain expertise as well."

2. Use activity diagrams to understand how new code will perform

Many development teams use Agile practices when it comes to test data, Goetsch said. With Agile practices, activity diagrams are crucial to understanding what specific function a new modification or new piece of code is going to perform.

Then the team must define data to support that use case or set of scenarios, as well as test the environment so it can be used by different individuals, testing teams or development teams.

"Maybe it's as simple as that, with each test with the same data, one group is using customer A and another group is using customer B or C, or something a little more elaborate than that," Goetsch explained. "But it gets very complicated once you move from unit tests to system integration tests. And depending on how many cooks in the kitchen, that can get cumbersome."

It helps if you can break them up by customer, by geography or by a certain product, for example, and then, as best as you can, use the same data, Goetsch said. "That way, when different people are testing, they can talk about the same things, except maybe change their customer name. That helps with … formulating the test."

In addition, the more frequent the testing, the more frequent the release cadence.

"More often, we need fresh test data," Scheibmeir said. "Often, we're writing code in the morning with the intention of deploying it that afternoon with automation. We can't burn our lunch hour trying to manually manipulate the data in the test environment so that we can do a thorough set of investigation and testing."

3. Prevent sensitive or PII data from being used with test data

Where developers must be especially watchful is in not grabbing sensitive or personally identifiable information (PII) data to use in testing.

"Ideally, any company that deals with sensitive data should have a management program in place that can identify critical data elements," Goetsch said. "Often, those critical data elements are required to have a level of sensitivity assigned to them, whether they're PII or some other internal or highly sensitive type of data."

It's important to understand what classification has been assigned to the data being used, Goetsch said. Operational systems will often allow end users to look at one row at a time for one customer account. Maybe it's a banking system, with one customer that has a checking or savings account or a debit card. They're allowed to look at that amount of information that's tested at that time because it's part of their job responsibilities.

But development testers shouldn't really be looking at the data itself, Goetsch stressed.

"You can either create your test data and mask some of those values or create dummy values," Goetsch noted. "There are other more elaborate things you can do. You can tokenize that information, whether that be through a vaulted or a list approach."

Finally, highly sensitive data should be monitored, and only privileged access should be allowed, Goetsch said.

A data steward, administrator or data initiator might need to look at a data set to diagnose a problem, Goetsch said. They should be allowed tools to unmask or unencrypt that data. But it should be on an exception basis, well-tracked and guarded.

It's one thing to create rules allowing people to go after that data only as described. It's another thing to know that person looked at the data or made a change, Goetsch said.