A comprehensive test automation guide for IT teams

This one-stop test automation guide walks through the benefits and challenges, how to craft an automated testing strategy and how to compare tools.

Testing is a critical part of the software development process, allowing developers to validate software functionality, measure performance and identify flaws to remediate. But as software projects become more complex, and project development cycles accelerate, traditional manual quality assurance (QA) testing may not be fast or complete enough to meet testing objectives within acceptable timeframes.

As a result, software developers increasingly turn to automated testing tools and workflows to speed up testing regimens while ensuring better consistency and completeness in the QA process.

Why is automated testing important?

Automated software testing is both a tool and a process. Automated testing tools provide the mechanisms and functions needed to execute tests on a software product. The tests can vary, ranging from simple scripts to detailed data sets and complex behavioral simulations. All the tests aim to validate that the software provides intended functionality and behaves as expected within acceptable parameters. Tools such as Selenium, Appium, Cucumber, Silk Test and dozens more allow for the creation of customized tests that can accommodate the specific needs of the software. Later in this test automation guide, we'll walk through the most popular and effective test automation tools.

From a process perspective, automated testing adds test automation tools and actions to the regular software development workflow. For example, a new build delivered to a repository can automatically be subjected to an automated testing regimen using one or more prescribed tools; testing can be implemented at off hours with little, if any, developer intervention. Automated testing results are carefully recorded, compared to previous test runs and delivered to developers for review. Based on the results, the software can be cycled back to developers for further work or approved as a candidate for deployment. Such examples are particularly relevant for DevOps environments, which depend on continuous integration/continuous delivery pipelines.

While useful, automated software testing has not replaced manual software QA testing. Success demands a high level of maintenance and attention. An automated test process can proceed faster than a manual one, but a realistic and maintainable test automation plan requires a sizable investment of time and effort. Developers must understand the software needs, plan test cases, set testing priorities and ensure that any tests created will yield accurate and meaningful results.

Most software projects will still benefit from the attention of skilled QA testers who can perform tests that are difficult to simulate with automated tools or that are infrequent enough to justify the investment necessary to automate them. Automated and manual testing are often performed together in varying degrees throughout the development cycle.

What are the advantages of test automation?

Automated software testing can provide an array of potential benefits to a development team while also delivering value to the business more broadly. The principal benefits echo those of other automation tools, including accuracy, reporting, scope, efficiency and reusability.

Ideally, automated testing eliminates much of the manual interaction inherent with human testers. The same tests are conducted the same way each time. Mistakes and oversights are eliminated, which yields better testing accuracy. At the same time, automation supports and executes a much larger number of tests than a human tester could handle. Once a test is created, its script, data, workflow and other components can be reused for tests on future builds as well as other software projects. Accuracy, scope and reusability of automated testing will depend on the investment made in planning, creating and maintaining the automated testing suite.

Additional benefits include better logging and reporting capabilities. Manual testers can forget to denote conditions, patterns and results, which leads to incomplete or inaccurate test documentation. Automated testing doesn't miss logging and reporting, which ensures that every result is recorded and categorized for developer review. The result is more comprehensive testing and better bug detection for every test cycle -- especially when results can be compared with previous results to gauge resolution effectiveness and efficiency.

What are the challenges of automated testing?

Automated software testing promises significant benefits, but the technology poses several challenges.

First, automation is not automatic. Simply implementing an automation tool, service, platform or framework is not enough to ensure proper software testing. Developers must decide on test requirements and criteria and then create the detailed test scripts and workflows to execute those tests. Tests can be reused, but that is only valuable for subsequent builds or other software that shares the same requirements and criteria.

Second, software testing may require interactions and results-gathering that simply isn't possible with an automation tool. Consider an application that displays data in a dashboard format. The dashboard elements may be testable: is a given metric calculated correctly? But the dashboard's positioning and visual appeal might be perceivable only to a human tester. Similarly, certain rarely used functions might not justify the investment in automation, leaving human QA testers to perform specific operations.

Traditional manual QA testing continues to share a vital role in software testing. In fact, development teams are increasingly taking advantage of the flexibility that manual testing brings to the development process. One way that manual testing adds value is through the addition of technical notes and documentation that QA personnel create. Such documentation can prove invaluable to supplement test cases, create training materials or build user documentation. For example, deep QA expertise in using software can aid help desk operations.

When deployed together, manual QA and automated testing allow each to focus on its strengths. Automated testing, for example, is often best suited to smoke and regression testing tasks, while new development tasks can benefit from the agility of manual testing. The biggest challenge in such shared responsibility is keeping both automated and manual efforts organized and effective in the face of constantly changing priorities.

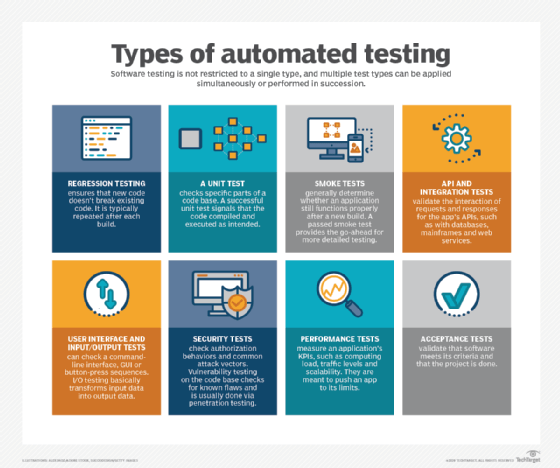

What are the types of automated testing?

Automated software testing can apply a wide range of test types to check integrations, interfaces, performance, the operation of specific modules and security. Testing is not restricted to a single test type, and multiple test types can be applied (layered) at the same time or performed in rapid succession to test a multitude of issues.

Automated testing can perform the following types of tests:

Regression tests. Regression testing is essentially the art of making sure that new code doesn't break any existing code. When new code is added, or when existing code is changed, regression testing verifies that other code or modules continue to operate as expected. Regression testing is typically repeated after each build. It usually offers excellent value for test automation.

Unit tests. A unit test typically checks specific parts of an application's code base, such as a subroutine or module. For example, a unit test might initialize a module, call methods or functions, and then evaluate any returned data in order to validate coding standards, such as the way in which modules and functions are written. Success of a unit test generally means that the code compiled and executed as intended. Unit tests are often part of a test-driven development strategy where success means that an intended function or feature is present as planned or required in the software requirements specification.

Smoke tests. Smoke tests are generally simple go/no-go tests designed to ensure that an application still functions properly when a new build is completed. The tests often are used to determine whether the most important features or functions of the application operate as expected and if the application is suitable for further, more detailed testing. For example, a smoke test might determine whether the application launches, an interface opens, buttons work or dialogs open and so on. If a smoke test fails, the application may be too broken to justify further testing. At that point, the application returns to the developers for retooling. Smoke tests are often referred to as build-verification tests or build-acceptance tests.

API and integration tests. Communication and integration are vital aspects of modern software. API testing is used to validate the interaction of requests and responses for the application's APIs. These can involve various endpoints, including databases, mainframes, UIs, enterprise service busses, web services and enterprise resource planning applications. Not only does API testing look for reasonable requests and responses, it also checks unusual or edge cases and evaluates potential problems with latency, security and graceful error handling.

API testing is often included in integration testing. This provides more comprehensive testing of the application's modules and components to ensure that everything operates as expected. For example, an integration test might simulate a complete order entry process that takes a test order from entry to processing to billing to shipping and beyond so that every part of the application is involved from start to finish.

User interface and input/output tests. The user interface (UI) represents the front end of any application, allowing the user to interact with the app. The UI itself may be as simple as a command line interface or a well-designed graphical user interface (GUI). UI testing can be an intricate and highly detailed effort; the number of possible button-press sequences or command-line variations can be staggering.

Input/output (I/O) testing basically transforms input data into output data. For example, an application intended to perform calculations and derive an output might use a sample data set and check the output to ensure that the underlying processing functions correctly. I/O testing is often connected to UI testing because the data set is frequently selected through the UI, and results may be graphed or otherwise displayed in the UI.

Security and vulnerability tests. Security testing helps to ensure that the application -- and its constituent data -- remains secure in the wake of application faults and deliberate attempts at unauthorized access. Security tests can check authorization behaviors as well as common attack vectors such as SQL injection and cross-site scripting.

Vulnerability testing is often performed on the code base before a build is executed. This checks the code for known flaws such as missing error handling in a subroutine or an insecure configuration setting. Vulnerability testing is usually associated with penetration, or pen, testing as a means of checking the security readiness of an application or data center environment.

Performance tests. An application may pass functional tests properly but still fail under stress. Performance tests are intended to measure an application's key performance indicators, which can include computing load, traffic levels and scalability. In effect, performance tests are meant to simulate real-world conditions, often pushing the application beyond its requirements until it fails. Such evaluation provides a baseline for further development and a benchmark for adding limits or warnings to prevent unexpected problems.

Acceptance tests. Software is developed using a software requirements specification (SRS). The SRS contains acceptance criteria that outline the features and functionality expected of the application. Acceptance tests are typically used to validate that the criteria are in accordance with the SRS or other client documentation. In other words, acceptance tests determine when the project is done. Because acceptance tests can be extremely difficult to automate, they are generally reserved for late in a project's development cycle.

How to perform automated testing

The goal of any automation is to decrease the cost and time needed to build a product or conduct an activity -- while preserving or improving the product's quality. This concept should guide organizations as they implement automated software testing.

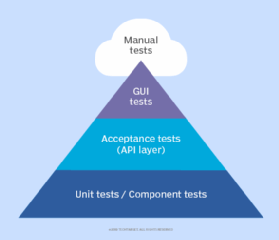

But there are many types of tests, and each presents challenges and demands for developers and QA professionals. Organizations should be judicious in the use of automation, which is most readily justified when the return on investment is highest. This typically occurs in testing activities that are high in volume and narrow in scope.

A common agile test automation pyramid illustrates this concept in the form of test-driven development unit tests in which small segments of code are tested repeatedly -- sometimes several times a day. Conversely, testing that demands a high degree of subjective opinion or criteria and that cannot be readily codified may be ill-suited to automation. A common example here is GUI testing where scripts can test buttons and other physical elements of a UI but cannot determine if the UI looks good.

Developers and software QA/testing professionals are typically tasked to prepare tests, and test code is often indistinguishable from other segments of code. In most cases, test code takes the form of scripts intended to execute certain behaviors in a prescribed order. Some tests can also be generated automatically. One popular example of this is record and playback testing tools, which create tests based on user actions or behaviors.

Generally, record and playback tools match the user activities to a library of objects that classify the behavior and then translate the object into code. That code forms the basis of a script or other test data set. Once the tool generates a test, the test can be reused, edited or merged with other tests.

Record and playback testing helps QA teams develop tests that simulate user activities. These could be UI tests, regression test or integration tests that implement and repeat complex sequences of actions. Such tools can also be used to check for performance issues, such as to ensure that a feature or function responds within an adequate timeframe.

Numerous automated software testing tools have record and playback capabilities, including Ranorex, Selenium and Microsoft Visual Studio. Selection of a tool will depend on an organization's existing development needs and framework.

An organization that chooses to adopt automated software testing usually grapples with scope -- defining exactly what automated testing is and what types of testing need automation.

Successful automated testing requires careful consideration of a broader test strategy. Not every test requires automation or is worth the investment in automation tools -- especially when tests are one-time events or answers to questions asked by others, such as: Does the software do X for us? Automating an answer to every such question is expensive, time-consuming and yields little benefit.

Even with a clear test automation strategy mapped out, test development will depend on additional strategic elements, such as best practices intended to maximize test coverage while minimizing test cases. These tests should ideally be singular, autonomous and versatile. They should run quickly and handle data properly. And even with the best automated test platforms and cases, there is still a place for manual testing in broader test strategies, such as complex integration and end-to-end test scenarios.

Automated testing can be enhanced with a variety of well-planned considerations. For example, automating the deployment of a new test environment for each test cycle can help to ensure fresh, updated content without the delays of refreshing, reloading or troubleshooting. Minimize the repeated use of variables or objects, and focus on creating scripts or test data with common objects defined and used just once. This means changes can be implemented by updating just a single entry at the start of a file rather than making multiple changes throughout the test.

Also, pay close attention to versioning and version control. New builds often need new tests, and it is critical to maintain control over test versions. Otherwise, new test cycles can fail or produce unusable results because the tool is implementing older -- and now invalid -- test scripts of data. In practice, tests should observe the same version control as any other codebase.

Even with the best tools and strategies, don't expect to fully automate testing for every test requirement within a software project. Automation does many things well, and it brings measurable value to the business, but people are also a crucial part of the software QA process. When implementing automated testing, adopt a tool or platform that supports the widest range of capabilities and scenarios possible. This puts maximum value in the tool and minimizes the number of tools that developers and QA testers must master. Look for logging capabilities that allow a comprehensive analysis of test results.

To minimize the learning curve, avoid the use of proprietary languages in test scripts and other coding. Plus, use of those languages makes it difficult, if not impossible, to migrate to an alternate tool later without the need to rebuild all the scripts and other test data from scratch. Test tools should ideally support common programming languages, such as Ruby, Python or Java.

Adoption of automated software testing is incomplete without a discussion of test automation maintenance. As with most automation, automated testing is not automatic -- the tool changes over time through updates and patches, and test cases will change as the software project evolves and grows. Simply creating a script and running it through a tool does not end the investment in test automation. Eventually, the checks that tests perform will begin to return errors. Investigation will reveal that these are false errors, but it underscores the need to include test case development and version control as a regular and recurring part of the software QA process.

There is no one way to implement such maintenance, but emerging tools seek to democratize test automation knowledge by simplifying test creation. Tools such as Eggplant Functional employ visual models, so testers need only minimal knowledge of modeling and scripting to use them. They are also versatile for editing and easy reporting. The goal is to make the tool and its tests far easier for newer users to understand and create with -- and effectively allow more developers and testers to participate in the software testing process. Tooling is usually augmented with processes and policies that accommodate various team members in test creation and implementation.

Test automation frameworks

Automated testing does not occur in a vacuum. The selection and implementation of an automated testing tool won't be enough to deliver results. Successful test automation demands careful attention to the guidelines, coding standards, reporting, processes and workflows, and other groundwork involved in the test execution environment. This mixture of concepts is referred to as the test automation framework.

When implemented properly, a test automation framework can help developers and testers create, execute and report on test automation events efficiently and uniformly across projects and business units. Other benefits of a well-considered framework include better code reusability, opportunities to automate testing across more code (modules, components and even entire projects), easier maintenance and support of the test automation tools, and less human intervention for manual QA testing.

Despite the potential benefits, automated software testing can pose serious challenges for an organization. It is critical to craft a test automation framework that defines and optimizes tests so that they can run with minimal human intervention.

A successful test automation framework depends on a plan that documents the way in which tests are developed, stored (protected) and executed. Such a plan typically defines the available resources, tools, languages, reporting and test storage or retention goals involved in test creation. This can require considerable effort in deciding who codes and executes tests, who maintains the tools, the time and circumstances when specific test types are executed, where test media will be stored and how test versions are managed.

The test automation framework requires strong policies for reporting, logging and maintenance. This helps developers know where to quickly find test result reports or execution logs, plus it speeds the fixing and remediation work to be done for the next build. The tool often produces error logs in response to script problems -- not necessarily application problems -- so accessing those related logs can over time help script and test maintenance. Ultimately, a strong framework needs regular testing to ensure that the tests are adequate and appropriate for the applications being developed.

How to choose an automated testing tool

Automated software testing tools can accelerate the test phase of any new build by driving repetitive or time-consuming test operations, but these tools are not all alike. Some offer detailed focus on specialized areas, such as bug tracking or test comparisons.

Earlier in this test automation guide, we cited just a few of the dozens of tools available in this area. It's easy to become distracted or overwhelmed by their sheer number and mix of capabilities, but there are some considerations that can help an organization select an automated testing tool. Tool options are both commercial and open source, though there are serious tradeoffs in price, maintenance and support, and feature-integration roadmap.

First, understand what problem the testing tool is intended to solve, and narrow the field to the particular tools that can perform the required tests. Every tool is built to address specific problems or perform certain tests, be it UI, documentation, API or regression testing. It is possible that a tool can solve more than one problem. In fact, some are promoted as all-in-one testing tools, while others are classified as stand-alone products.

Next, determine whether the prospective tool fits the current development framework of OSes and application languages. Also, be sure it integrates with other tools, such as version-control systems, along the software development workflow. For example, one tool might not support a Java application in a web browser, while another tool might claim to support any platform -- as long as it's a Windows platform. Still other tools might demand the addition of a web server, database or other supporting application. Don't just consider what is being developed today; also think ahead to decide if the tool will support future platforms.

Third, examine less-desirable factors. As one example, a tool can be much easier to use when it supports a developer-native language, such as Ruby, Python, .NET or Java. If the tool requires a unique or proprietary language, then users must learn yet another language for testing. That requires more time, and it will likely introduce errors into the test process. Similarly, consider how the tool is licensed, paid for, installed and secured.

Finally, take the automated tool for a test drive. Chances are the tool vendor offers a free trial. Although the functionality may be limited, developers and testers should be able to determine whether the prospective tool meets the team's testing needs. It's a good idea to use the tool under consideration in parallel with existing tools, enabling a team to compare test results.

Ultimately, an organization will adopt more than one automated testing tool. This allows developers and testers to create a toolbox capable of handling a multitude of test types across a wide variety of software project requirements, from websites to mobile apps to traditional desktop and client-server applications.

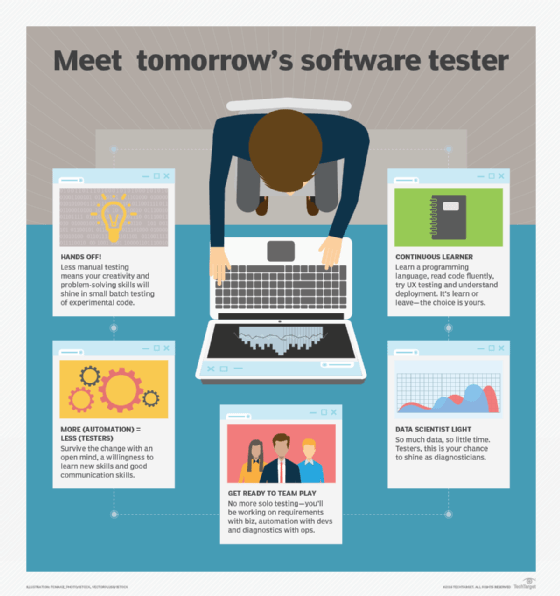

The future of test automation

Automated software testing tools continue to evolve, systematically adding artificial intelligence and machine learning capabilities that help tools autonomously create suitable test cases. Intelligence helps tools focus testing on areas that are most relevant to the software in development, freeing developers and testers to create scripts and more traditional test media for edge cases and strategic testing for performance, security and other priorities.

Tools have begun to feature autonomous capabilities around test creation, test data management and analytics. Eventually, tools may arrive with the ability to scan code and derive test coverage or create simulations and models that are impossible to implement manually with existing tools. In addition, AI can help to locate gaps in testing -- and even suggest ways to fill them.

Another avenue of evolution for automated software testing is robotic process automation, or RPA, which is designed to perform repetitive operations as needed. For software development, RPA technologies can mimic user actions and test the interactions between disparate systems. The goal is to translate complex, multistep, multisystem actions into repeatable and scripted processes. RPA, in effect, can bolster end-to-end testing that evaluates customer activities and expectations for software behaviors. One emerging use of RPA is in low-code software development platforms, in which the tool stitches together existing templates to generate and then perform more complex business functions.

Test automation, AI, RPA and low-code technologies are still in their infancy. The future for these technologies will ultimately rest in their business value and the creativity with which they solve business problems. Ironically, success will depend on deep human understanding of the business and its inner workings in order to find inefficient or tedious tasks that will benefit from tomorrow's testing platforms.