AWS storage changes the game inside, outside data centers

Long-term influence of Amazon storage centers on S3 API for object systems and extends to pricing, data durability and IT infrastructure planning and decision-making.

The impact of Amazon storage on the IT universe extends beyond the servers and drives that store exabytes of data on demand for more than 2.2 million customers. AWS also influenced practitioners to think differently about storage and change the way they operate.

Since Amazon Simple Storage Service (S3) launched in March 2006, IT pros have re-examined the way they buy, provision and manage storage. Infrastructure vendors have adapted the way they design and price their products. That first AWS storage service also sparked a raft of technology companies -- most notably Microsoft and Google -- to focus on public clouds.

"For IT shops, we had to think of ourselves as just another service provider to our internal business customers," said Doug Knight, who manages storage and server services at Capital BlueCross in central Pennsylvania. "If we didn't provide good customer service, performance, availability and all those things that you would expect out of an AWS, they didn't have to use us anymore.

"That was the reality of the cloud," Knight said. "It forced IT departments to evolve."

The Capital BlueCross IT department became more conscious of storing data on the "right" and most cost-effective systems to deliver whatever performance level the business requires, Knight said. The AWS alternative gives users a myriad of choices, including block, file and scale-out object storage, fast flash and slower spinning disk, and Glacier archives at differing price points.

"We think more in the context of business problems now, as opposed to just data and numbers," Knight said. "How many gigabytes isn't relevant anymore."

Capital BlueCross' limited public cloud footprint consists of about 100 TB of a scale-out backup repository in Microsoft's Azure Blob Storage and the data its software-as-a-service (SaaS) applications generate. Knight said the insurer "will never be in one cloud," and he expects to have workloads in AWS someday. Knight said he has noticed his on-premises storage vendors have expanded their cloud options. Capital BlueCross' main supplier, IBM, even runs its own public cloud, although Capital BlueCross doesn't use it.

Expansion of consumption-based pricing

Facing declining revenue, major providers such as Dell EMC, Hewlett Packard Enterprise and NetApp introduced AWS-like consumption-based pricing to give customers the choice of paying only for the storage they use. The traditional capital-expense model often leaves companies overbuying storage as they try to project their capacity needs over a three- to five-year window.

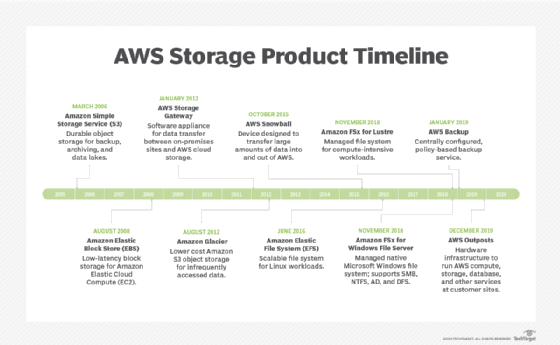

While the mainstream vendors pick up AWS-like options, Amazon continues to bolster its storage portfolio with enterprise capabilities found in on-premises block-based SAN and file-based NAS systems. AWS added its Elastic Block Store (EBS) in August 2008 for applications running on Elastic Cloud Compute (EC2) instances. File storage took longer, with the Amazon Elastic File System (EFS) arriving in 2016 and FSx for Lustre and Windows File Server in 2018.

AWS ventured into on-premises hardware in 2015 with a Snowball appliance to help businesses ship data to the cloud. In late 2019, Amazon released Outposts hardware that gives customers storage, compute and database resources to build on-premises applications using the same AWS tools and services that are available in the cloud.

Amazon S3 API impact

Amid the ever-expanding breadth of offerings, it's hard to envision any AWS storage option approaching the popularity and influence of the first one. Simple Storage Service, better known as S3, stores objects on cheap, commodity servers that can scale out in seemingly limitless fashion. Amazon did not invent object storage, but its S3 application programming interface (API) has become the de facto industry standard.

"It forced IT to look at redesigning their applications," Gartner research vice president Julia Palmer said of S3.

Palmer said when she worked in engineering at GoDaddy, the Internet domain registrar and service provider designed its own object storage to talk to various APIs. But the team members gradually realized they would need to focus on the S3 API that everyone else was going to use, Palmer said.

Every important storage vendor now supports the S3 API to facilitate access to object storage. Palmer said that, although object systems haven't achieved the level of success on premises that they have in the cloud, the idea that storage can be flexible, infinitely scalable and less costly by running on commodity hardware has had a dramatic impact on the industry.

"Before, it was file or block," she said. "And that was it."

Object storage use cases expand

Because of higher performance storage emerging in the cloud and on premises, object storage is expanding beyond the original backup and archiving use cases to workloads such as big data analytics. For instance, Pure Storage and NetApp sell all-flash hardware for object storage, and object software pioneer SwiftStack improves throughput through parallel I/O.

Enrico Signoretti, a senior data storage analyst at GigaOm, said he fields calls every day from IT pros who want to use object storage for more use cases.

"Everyone is working to make object storage faster," Signoretti said. "It's growing like crazy."

Major League Baseball (MLB) is trying to get its developers to move away from files and write to S3 buckets, as it plans a 10- to 20-PB open source Ceph object storage cluster. Truman Boyes, MLB's SVP of infrastructure, said developers have been working with files for so long that it will take time to convince them that the object approach could be easier.

"From an application designer's perspective, they don't have to think about how to have resilient storage. They don't have to worry if they've copied it to the right number of places and built in all these mechanisms to ensure data integrity," Boyes said. "It just happens. You talk to an API, and the API figures it out for you."

Ken Rothenberger, an enterprise architect at General Mills, said Amazon S3 object storage significantly influenced the way he thinks about data durability. Rothenberger said the business often mandates zero data loss, and traditional block storage requires the IT department to keep backups and multiple copies of data.

AWS storage challengers

By contrast, AWS S3 and Glacier stripe data across at least three facilities located 10 km to 60 km away from each other and provide 99.999999999% durability. Amazon technology VP Bill Vass said the 10 km distance is to withstand an F5 tornado that is 5 km wide, and the 60 km is for speed-of-light latency. "Certainly none of the other cloud providers do it by default," Vass said.

Startup Wasabi Technologies claims to provide 99.999999999% durability through a different technology approach, and takes aim at Amazon S3 Standard on price and performance. Wasabi eliminated data egress fees to target one of the primary complaints of AWS storage customers.

Vass countered that egress charges pay for the networking gear that enables access at 8.8 terabits per second on S3. He also noted that AWS frequently lowers storage prices, just as it does across the board for all services.

"You don't usually get that aggressive price reduction from on-prem [options], along with the 11 nines durability automatically spread across three places," Vass said.

Amazon's shortcomings in block and file storage have given rise to a new market of "cloud-adjacent" storage providers, according to Marc Staimer, president of Dragon Slayer Consulting. Staimer said Dell EMC, HPE, Infinidat and others put their storage into facilities located within close proximity of AWS compute nodes. They aim to provide a "faster, more scalable, more secure storage" alternative to AWS, Staimer said.

But the most serious cloud challengers for AWS storage remain Azure and Google. AWS also faces on-premises challenges from traditional vendors that provide the infrastructure for data centers where many enterprises continue to store most of their data.

Cloud vs. on-premises costs

Jevin Jensen, VP of global infrastructure at Mohawk Industries, said he tracks the major cloud providers' prices and keeps an open mind. But at this point in time, he finds that his company is able to keep its "fully loaded" costs at least 20% lower by running its SAP, payroll, warehouse management and other business-critical applications in-house, with on-premises storage.

Jensen said the cost delta between the cloud and Mohawk's on-premises data center was initially about 50%, leaving him to wonder, "Why are we even thinking about cloud?" He said the margin dropped to 20% or 30% as AWS and the other cloud providers reduced their prices.

Like many enterprises, Mohawk uses the public cloud for SaaS applications and credit card processing. The Georgia-based global flooring manufacturer also has Azure for e-commerce. Jensen said the mere prospect of moving more workloads and data off-site enables Mohawk to secure better discounts from its infrastructure suppliers.

"They know we have stuff in Azure," Jensen said. "They know we can easily go to Amazon."