Fotolia

Panasas storage revs up parallelization for HPC workloads

Panasas storage software focuses on novel method to boost capacity and efficiency. Dynamic Data Acceleration in PanFS shuttles data to disk or flash based on file size.

Panasas upgraded its NAS platform to ease data management associated with AI and high-performance computing.

The update includes the addition of Dynamic Data Acceleration (DDA) on PanFS, the operating system for Panasas ActiveStor storage nodes. The enhanced PanFS provides a single tier of disk and flash optimized for mixed file workloads.

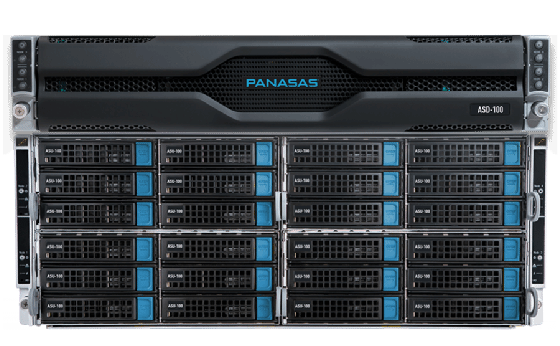

Panasas storage hardware separated from PanFS two years ago, when the vendor introduced ActiveStor Ultra nodes as part of a product rebranding. However, the PanFS software is not available as a software-only license. Panasas sells PanFS exclusively on commodity-based ActiveStor hardware nodes that support standard Linux hardware drivers.

Placement by file size

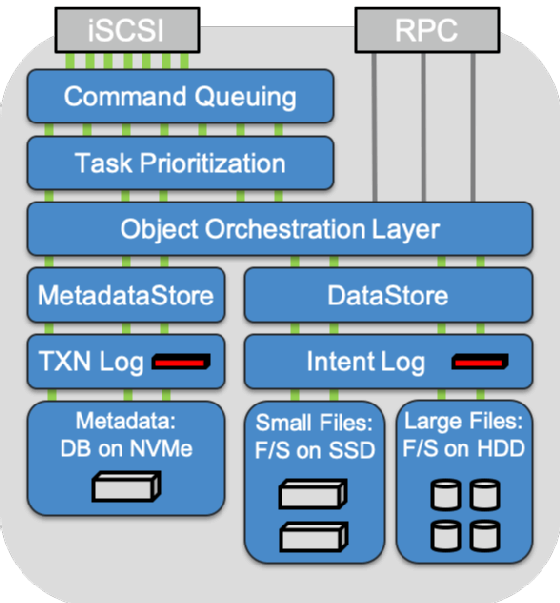

Parallel file systems typically rely on storage tiering to move data between devices. Tiering algorithms determine if data should go on flash or disk, based on how frequently it gets accessed. Panasas said DDA acceleration does not use tiering, although the architecture is operationally similar.

DDA intrinsically assigns large files to disk and smaller files to SSDs. NVDIMMs handle intent logs and NVMe drives optimize the performance of metadata. The Panasas storage software dynamically adjusts to maximize capacity and performance as workloads change. PanFS layers Portable Operating System Interface (POSIX) standards over an object storage back end for scale out, using erasure coding as part of data protection.

"With the newest PanFS, Panasas is basically saying all data is hot, and they divide the tiers instead by the size of the file. With a few additional optimizations, such as changing the block lengths to better suit the file sizes, they claim a boost in performance on both sides, without demoting data to cold status," said Addison Snell, CEO of Intersect360 Research, an IT analyst firm specializing in high-performance computing (HPC) data trends.

"Another way to look at it: This architecture optimizes for IOPS on small files and bandwidth on large files as a methodology for improving overall scalability," Snell said.

Curtis Anderson, a Panasas software architect, said HPC users struggle to balance accelerated performance against fixed storage budgets. He said the new Panasas storage technique is designed to eliminate manual tuning and control storage costs.

"The challenge in HPC is that it's too costly to buy all-flash, and a hybrid system may give you the capacity but not the performance you need. We deliver software and hardware [optimized] for NFS that delivers performance without archiving," Anderson said.

HPC market in flux

Panasas said each ActiveStor Ultra node contains 16 GB of NVDIMM capacity, 2 TB of NVMe SSD storage, 8 TB of traditional SSD capacity and six 16 TB HDDs, combined with 32 GB of DRAM and dual 25 Gigabit Ethernet connectivity.

HPC storage has typically been reserved for research labs and the like, but mainstream enterprises are exploring HPC for AI, big data, data science and machine learning, Snell said.

Snell said COVID-19 adversely affected HPC storage, with sales expected to drop in 2020 before a "catch up" in 2021. By separating PanFS from the hardware, Snell said Panasas storage could help organizations improve the scalability of data management without an expensive overhaul.