Getty Images/iStockphoto

Composable architecture: Future-proofing AI expansion

Data center admins should adopt a composable architecture to improve resource utilization, reduce costs and enhance AI workload efficiency for faster deployment and adaptability.

Composable architecture enables more adaptable and responsive AI ecosystems and IT resource delivery.

Emerging technologies and digital demands far exceed legacy data centers' compute, storage and networking capabilities.

However, composable architecture's modular, flexible nature enables IT teams to streamline new technology integrations into the stack. With composable architecture, administrators can exchange and reuse independent, API-driven modules without compromising the rest of the IT system.

Learn how composable architecture can maximize resource utilization, reduce AI deployment CapEx/OpEx, ease IT management and support diverse workloads.

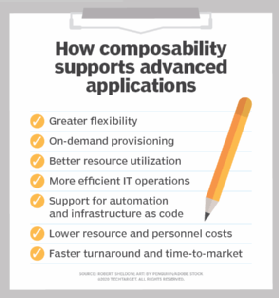

Composability: Key benefits

Today, enterprises increasingly struggle to maintain modern workloads that are dynamic, data-intensive and constantly evolving. The difficulty is often due to siloed resources and fragmented data limitations. However, administrators, analysts and engineers can use composable architecture to improve their management tasks and perform the extensive data analyses that intelligent workloads require, such as machine learning (ML) training and LLM integration. Composability also significantly reduces performance latencies.

Scalability

As business demands change, companies require on-demand scalability and the means to group IT infrastructure into shared resource pools for accelerated delivery. Research from Gartner found that by 2026, organizations adopting composable architectures will outpace competitors by 80% in the speed of new feature implementation. This includes advantages such as faster configurations, code reusability in application development, and individual and multiple component testing and performance verifications.

Adaptability

A rack-and-blade approach to high-density computing works adequately for predictable workloads in legacy data centers. However, in the digital era, IT infrastructure must adapt and respond at the nanosecond speeds demanded by AI workloads. Composable architectures enable companies to adopt these transformative technologies more quickly and future-proof infrastructure to deliver the high-throughput performance necessary to power emerging use cases, from on-premises AI to quantum computing.

Cost-efficiency

Composable resource efficiency and maximum utilization can also reduce long-term costs. For example, organizations can reduce OpEx through greater customization of AI and ML deployments, such as replacing individual components and re-integrating alternate modules related to data ingestion, agentic AI, model evaluation and performance monitoring.

Maximizing AI workloads

Inefficient IT processing slows ML training, causes inference lags and negatively affects overall AI productivity. Composability counters these inefficiencies and maximizes AI workloads by delivering massive parallel compute potential, independent scaling and GPU acceleration. Component communication using composable APIs ensures integration and interoperability across multiple services and platforms.

AI workloads require just-in-time hardware reconfigurations and dynamic, instantaneous resource provisioning. Unified, open APIs function as the connective tissue between microservices, ensuring programmatic IT control. They also provide flexible management across different projects, reducing downtime as technicians rearrange or add new components. The high reusability within a composable architecture also means that administrators and engineers can quickly repurpose components across multiple deployments, saving time and further conserving resources.

Transition approaches and challenges

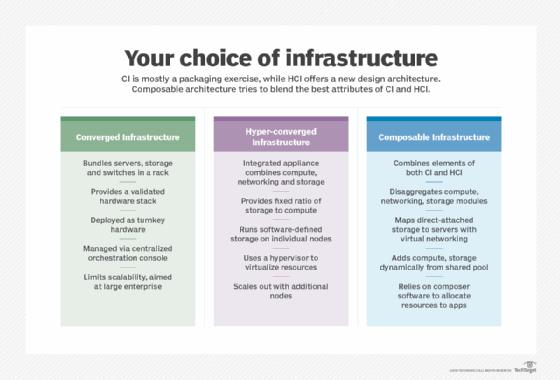

Migrating legacy IT infrastructure into a composable architecture involves breaking down monolithic and siloed compute, storage and networking into independent components that administrators can orchestrate and manage through software.

Organizations can choose from one of three transitioning approaches: Convert APIs to a central hub, replace the entire legacy stack or incrementally decommission the legacy stack.

Convert APIs

IT teams could also choose a replacement model that offers an API-first conversion with a central hub for managing all component communications. This approach integrates old and new technologies while keeping the monolithic structure in place.

Replace the legacy stack

Engineers and IT teams in small companies and start-ups can employ a replatforming approach, which involves wholesale replacement of legacy stack functionality with cloud-native, modular elements. Rapid and high-risk, full replatforming requires business continuity throughout the transition, which can be challenging to maintain and disruptive to operations.

Incremental decommissioning of legacy stack

A more gradual approach to transitioning to a composable architecture is to incrementally decommission and replace each piece of the legacy stack with modular services. IT teams replace legacy equipment until the entire infrastructure becomes composable.

The strategic necessity of composability

While a modular approach is crucial for efficient and high-performing AI and ML deployments and emerging technologies, it can also pose testing, security and workflow challenges.

Combining multiple services, managing vendor relationships and dealing with unforeseen technical debt requires substantial IT resources and considerable CapEx/OpEx. To gauge deployment effectiveness, IT teams must apply thorough testing and standardized QA to numerous independent, dynamic components with changing configurations.

Multiple components rely on diverse sources, such as databases, storage pools, IoT and cloud, and have unique security requirements that can pose vulnerabilities. Organizations of all sizes require knowledgeable IT teams and security specialists who can perform regular assessments and vulnerability scans across the infrastructure.

Comprehensive cybersecurity should include end-to-end encryption, airtight access control, user and device authentication, and comprehensive data safeguards and policies. Data should also remain consistent and accurate across all components. Without such guarantees, content integrity and reliability can decline, impacting responses, ML training, model inferencing and innovation.

Kerry Doyle writes about technology for a variety of publications and platforms. His current focus is on issues relevant to IT and enterprise leaders across a range of topics, from nanotech and cloud to distributed services and AI.