Getty Images/iStockphoto

Explore edge computing services in the cloud

Discover the powerful advantages of edge computing over cloud computing, delivering faster performance and significantly lowering data transfer costs. Dive into the essential services provided by leading industry players.

Is it time to push your workloads to the edge? Edge computing can help organizations achieve faster workload performance, while reducing data transfer costs and security risks.

AWS, Microsoft Azure and Google Cloud offer services that simplify workload deployment at the edge, while integrating them with a provider's cloud platform. Other platforms, such as Red Hat OpenShift, could also be viable for organizations to set up and manage edge architectures.

Let's explore how edge computing works, its relation to the cloud and what the major vendors offer for edge computing deployment and management.

What is edge computing?

Edge computing includes storage and/or compute resources close to the data source or user. When organizations use edge computing, they deploy workloads in physical locations near places where data is produced or consumed.

A retailer could set up an edge computing infrastructure to host payment processing applications within physical stores. Any payment data the applications need to collect and process is available on the same local infrastructure. Since data doesn't need to be transferred to a remote data center, the result is a faster, smoother checkout process for customers.

Retail payment processing is an example of an edge computing use case where rapid processing is not necessarily critical. Situations that require real-time data processing need edge computing functionality.

Consider autonomous vehicles. A self-driving car would have to collect and transfer data to a cloud data center for processing before reacting to sudden changes in road conditions. By processing data at the edge, autonomous vehicles can analyze real-time data and react almost instantaneously.

Edge computing vs. cloud computing

The concept of edge computing became popular following the shift toward cloud-centric infrastructures starting in the late 2000s. Edge computing can solve some of the core performance, reliability and security challenges associated with the cloud. Unlike edge, the cloud relies on central data centers that are typically not physically close to end users or data sources.

Latency

Cloud computing platforms must transfer data over the internet before it is processed or stored within cloud data centers. The data can also be sent back out over the internet to end users after it has been processed. Because the internet is slow compared to local networks, it usually has higher latency rates.

In the cloud, it can take a few seconds for an application to receive a user's request, process it and send back the result. On edge infrastructure, response times can be reduced to mere milliseconds by not relying on the internet.

Note that a content delivery network (CDN) is one way to reduce cloud latency without adopting an edge strategy. However, CDNs typically cost more than edge networks, and they might still not deliver the same level of performance.

Reliability

If a cloud data center becomes unavailable, so do the applications and data it hosts. Although cloud outages are rare, they can happen due to issues like network routing problems or damage to a cloud provider's infrastructure.

Edge computing offers the advantage of local storage of critical data, where it is more available to the workloads that need it. It's important to note that edge infrastructure can also fail. Often, small edge data centers lack the physical security and resilience capabilities of large cloud data centers.

Security

Data transferred between remote locations and cloud data centers is exposed to potential security risks when it moves over insecure public networks. Anyone who can access the networks could eavesdrop on traffic passing through them. Encrypting the data makes it unreadable to eavesdroppers in most cases. However, network traffic isn't always encrypted by default, and when it is, there is a risk that attackers could break encryption keys.

By minimizing the need to transfer data across public networks, edge computing lessens potential security risks, regardless of whether the data is encrypted in transit. Edge computing can still rely on a public network. But the data doesn't need to move as far, so there is a lower risk of eavesdropping.

Edge computing use cases

Edge computing can support almost any use case where it's important to minimize latency and/or reduce data security risks. Here are some examples of how businesses in various industries can benefit from edge architectures or devices:

- Healthcare. Internet of medical things devices can collect and process patient data close to the source, enabling real-time reaction to potential health issues.

- Industrial. Edge computing helps to automate manufacturing operations using data streams to control manufacturing equipment.

- Finance. Payment processing and fraud detection performed with edge processing can catch fraud before thieves react and reduce the risk of exfiltration of sensitive data over the network.

- Retail. Edge infrastructure can process point-of-sale data to enable faster checkout.

- Automotive. Autonomous vehicles rely on edge processing to provide real-time reactions to data about environmental conditions.

In all these cases, success requires swift and efficient data processing. Moving data from a point of origin into cloud data centers and back to the original device is too slow.

Edge computing services in cloud

Each major cloud vendor offers multiple services for organizations that want to build an edge architecture and integrate it with public cloud services. These offerings fall into three main categories: hybrid cloud platforms, network optimization, and IoT deployment and management.

Hybrid cloud platforms

Each major public cloud provider has a hybrid cloud platform, such as AWS Outposts, Azure Local, Azure Stack Edge, Google Cloud Anthos and Google Distributed Cloud. These services aren't specifically edge computing services, but can serve any hybrid cloud computing need. IT teams can use such offerings to deploy public cloud services within a private data center or another local site that hosts edge infrastructure.

If a retailer wants to deploy a local payment processing application inside stores, while still managing workloads using public cloud tools, it could do so on private, local servers managed through a platform like Azure Local or Google Cloud Anthos.

Network optimization

Cloud providers offer services that can optimize network performance for workloads that need to connect to public cloud data centers. Some examples are AWS Local Zones, Azure ExpressRoute and Google Cloud Interconnect. These services work in different ways; some place cloud infrastructure closer to the edge to reduce latency, while others provide private network connections to boost speed, regardless of infrastructure location.

However, they have the potential to minimize latency for localized workloads that need to connect to the cloud. They can help optimize network performance for edge architectures with a public cloud component.

IoT deployment and management

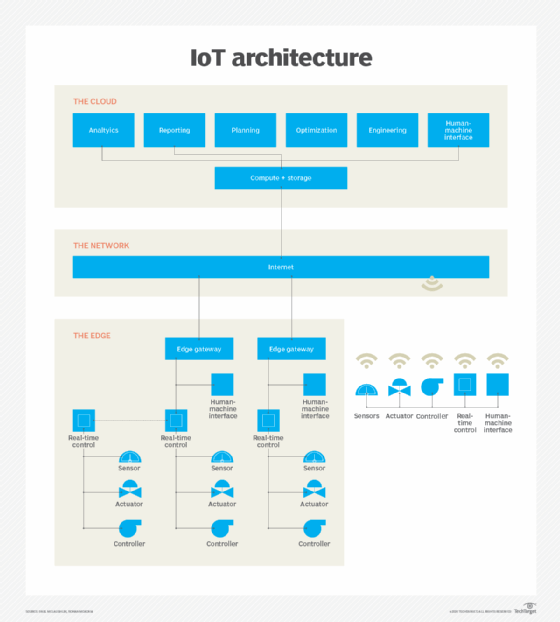

Although not all edge computing use cases involve IoT devices, IoT and edge architectures tend to go hand in hand. IoT devices are often deployed in scattered locations. Because these devices have minimal compute and storage resources, they might need to connect to a remote data center to process or store generated data. They could use a conventional cloud data center for this, but edge infrastructure offers the advantage of minimized network bandwidth and latency.

All public clouds offer IoT services to help deploy and manage IoT devices, such as AWS IoT, Azure IoT and Google Cloud IoT Core. Although these services aren't limited to edge computing use cases, businesses that want to manage IoT networks through the public cloud can add such services as part of an edge management strategy.

Edge computing platforms beyond public cloud

Public cloud services aren't necessary to set up an edge architecture.

One option is Red Hat OpenShift, the Kubernetes-based application management platform. OpenShift's multi-cluster management support can deploy and manage container-based workloads at multiple edge locations, with each location running its own cluster. Any Kubernetes distribution can help set up and manage edge workloads, although not all Kubernetes platforms are designed for edge use cases.

Users can manually deploy and manage workloads across multiple edge locations without the help of automated orchestration and management services. Stand up physical servers at multiple edge sites, and then provide them with the OSes and workloads to run at the edge. However, this approach is difficult to pull off at scale due to the complexity of management.

Editor's note: This article was updated to include additional information on edge computing services.

Chris Tozzi is a freelance writer, research adviser, and professor of IT and society. He has previously worked as a journalist and Linux systems administrator.