Getty Images/iStockphoto

6 edge monitoring best practices in the cloud

When it comes to application monitoring, edge workloads are outliers -- literally and metaphorically. Learn what sets them apart and how to implement monitoring best practices.

Edge workloads can be a convenient asset for businesses, and they can also complicate certain strategies.

Some complications include geographical adjacency to an organization's IT estate and edge security monitoring. Limited edge device resources and potential data syncing issues are some of the bigger roadblocks to sound edge security. Despite these challenges, monitoring edge applications is as critical as monitoring those that reside in traditional cloud data centers.

Keep reading for tips on how to develop an edge-to-cloud monitoring strategy that covers the entirety of your IT estate.

What is edge monitoring?

Edge monitoring is the process of collecting and analyzing logs, metrics and other data from edge workloads and devices. It is critical for the same reasons that monitoring any application or infrastructure is essential. Organizations can only detect performance and security issues through monitoring.

Without adequate edge monitoring in place, performance issues that affect edge devices, such as device failures or high latency rates, can go unnoticed -- that is, until they disrupt a critical service or users start to complain. Likewise, security breaches that start on edge devices can remain undetected until they escalate into larger attacks.

Monitoring at the edge helps teams to get ahead of issues like these so they can mitigate them proactively. Equally important is the role of this practice in providing critical context that can inform a more comprehensive monitoring strategy that extends to traditional cloud environments.

For example, consider an autonomous vehicle that is tracked by an application hosted in a cloud data center. It relies on local edge sensors to collect and process data to guide the vehicle. If the vehicle were to become unreachable by the cloud-based application, monitoring data from the edge environment could be crucial for troubleshooting the problem.

Edge monitoring challenges

While edge monitoring is crucial, it can be a challenge. The fundamental processes behind edge monitoring are the same as those of cloud monitoring. Yet, carrying out edge monitoring processes can be difficult due to several factors that are inherent to edge devices and networks, including the following:

- Resource limitations. Edge devices can have limited CPU, memory and storage resources. As a result, they are unable to store or process as much monitoring data as conventional infrastructure.

- Unique data formats. Some edge workloads generate logs and metrics in unique formats that conventional, cloud-based monitoring software might not support.

- Lack of monitoring data. Edge devices don't always generate monitoring data. They might have been designed without monitoring in mind.

- Intermittent network connectivity. Edge devices aren't continuously connected to the network, which makes it challenging to collect monitoring data from them. It is possible to collect the data after a device reconnects, but real-time monitoring is impossible with this approach.

- Latency and syncing issues. Data syncing issues can occur when integrating monitoring data from multiple edge devices. Due to network latency, some devices can upload data faster than others. This makes it a challenge to determine when two simultaneous events occurred on different devices, complicating the detection of performance or security problems.

- Large data volumes. Edge devices produce a large volume of data, which makes collecting, integrating and correlating all the information in the devices more difficult. Even if the logs and metrics from each edge device are small, comparing and merging discrete data sources from thousands of devices is no simple feat.

- Lack of edge-focused monitoring tools. Most monitoring and observability tools are for conventional infrastructure and workloads. Their default data collection and anomaly detection rules are unlikely to work well with edge devices because they don't address considerations that are unique to the edge.

6 best practices for monitoring from edge to cloud

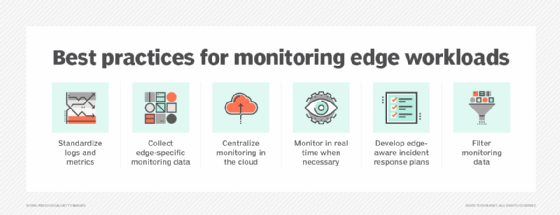

Despite these challenges, it's possible -- and essential -- to develop a monitoring strategy that covers all assets, from edge environments to central cloud data centers. Best practices for monitoring edge workloads include the following.

1. Standardize logs and metrics

To the extent possible, standardize the types and structures of logs, metrics and other data sources. The more consistent data sources are, the easier it is to ingest them into monitoring tools and detect anomalies effectively.

2. Collect edge-specific monitoring data

In addition to collecting standard logs and metrics, be sure that a comprehensive edge-to-cloud monitoring strategy also includes data that is critical for effective edge monitoring. Consider the importance of tracking uptime on a device-by-device basis. Knowing the latency rates of individual edge devices is critical, too. Network throughput should be monitored in a granular way so that admins can detect local network problems before disruption.

3. Centralize monitoring in the cloud

In general, it's best to pull monitoring data from edge devices into the cloud, where it can be processed on resource-rich servers. Some businesses might rely on monitoring software at the edge. These include organizations that need to detect anomalies in real time and can't tolerate the latency delays when moving monitoring data into the cloud.

Imagine an admin who needs to monitor the brake controls in a self-driving car so that the vehicle's computer can instruct it to switch to a backup brake if the main brake fails. Having to send brake sensor monitoring data into the cloud, process it and wait for a response might take too long. Monitoring the sensors locally could activate the backup brake system in real time.

4. Monitor in real time when necessary

Monitoring in real time is the only effective way to mitigate issues that require real-time response, like the failure of a critical device or the onset of a DDoS attack. But not all data requires immediate reaction.

Sometimes, it makes more sense to process certain types of monitoring data in batches rather than in real time. This batch processing can save resources and mitigate some of the challenges related to latency deviations. It is also a more feasible approach when working with edge devices that aren't continuously reachable over the network.

For instance, rather than monitoring edge device storage availability in real time, it might be more effective to check storage metrics only once every 10 minutes. Storage resources are unlikely to become exhausted without warning.

5. Develop edge-aware incident response plans

Monitoring is only valuable if teams are ready to take action in response to problems. To that end, it's important to include edge workloads in incident response plans. These are the procedures that organizations develop to coordinate responses to outages or attacks.

Incident response plans that don't address the unique requirements of edge environments might prove ineffective amid a response or recovery procedure. If a response plan assumes that all devices are locally accessible from a data center, it is ineffective for managing an incident that impacts edge devices located far from data centers.

6. Filter monitoring data

Data filtering -- the process of removing or reformatting data -- can help reduce the amount of monitoring organizations need to work with. This facilitates more effective monitoring by reducing the volume of information that needs to move over the network, eliminating redundant events. However, filtering at the edge requires processing power. Admins should consider whether their devices can perform advanced filtering operations prior to sending their data to the cloud.

In the context of edge monitoring, data filtering could include practices like sampling log events and metrics. Rather than reporting every event and metric to the centralized monitoring service, an edge device might report every fifth data point to reduce data volumes. Likewise, redundant log events could be consolidated prior to sending the data to the cloud for processing.

Chris Tozzi is a freelance writer, research adviser, and professor of IT and society. He has previously worked as a journalist and Linux systems administrator.