What is edge AI?

Edge artificial intelligence (edge AI) is a paradigm for crafting AI workflows. It's part of centralized cloud data centers and edge devices outside the cloud that are closer to humans and physical things.

This is in contrast to the more common practice of developing and running AI applications entirely in the cloud, which is being called cloud AI. It also differs from older AI development approaches in which people craft AI algorithms on desktops and deploy them on desktops or special hardware for tasks such as reading check numbers.

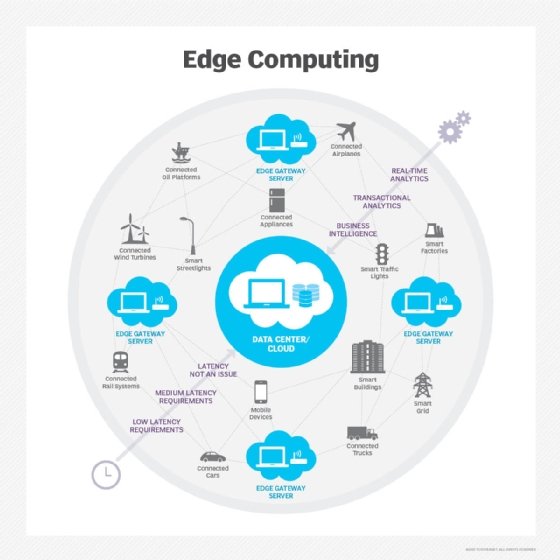

The concept of the edge is often characterized as a physical thing, such as a network gateway, smart router or an intelligent 5G cell tower. However, this misses the edge AI's value to devices, such as cellphones, autonomous cars and robots. A more helpful way to understand the importance of edge environments is to think of them as a means to extend digital transformation practices innovated in the cloud to the world.

Other types of edge innovation improved the efficiency, performance, management, security and operations of computers, smartphones, vehicles, appliances and other devices that use cloud best practices. Edge AI focuses on best practices, architectures and processes for extending data science, machine learning and AI outside the cloud.

How edge AI works

Until recently, most AI applications, such as expert systems or fraud detection algorithms, were developed using symbolic AI techniques that hardcoded rules into them. In some cases, nonsymbolic AI techniques -- for example, neural networks -- were designed for applications such as optical character recognition for check numbers or typed text.

Over time, researchers discovered ways to scale deep neural networks in the cloud for training AI models and generating responses based on input data. Edge AI extends AI development and deployment outside of the cloud.

Edge AI is generally used for inferencing, whereas cloud AI trains new algorithms. Inferencing algorithms require less processing capabilities and energy than training algorithms. As a result, well-designed inferencing algorithms are sometimes run on existing central processing units or even less capable microcontrollers in edge devices. In other cases, highly efficient AI chips improve inferencing performance, reduce power or both.

Benefits of edge AI

Edge AI has several benefits over cloud AI, including the following:

- Reduced latency and higher speeds. Inferencing is performed locally, eliminating communication delays with the cloud and waiting for the response.

- Reduced bandwidth requirements and cost. Edge AI reduces the bandwidth and associated costs for shipping voice, video and high-fidelity sensor data over cellular networks.

- Increased data security. Data is processed locally, reducing the risk of sensitive data being stored in the cloud or intercepted in transit.

- Improved reliability. AI can continue to operate even if the network or cloud service goes down. This is critical for applications such as autonomous technology, particularly autonomous cars and industrial robots.

- Reduced power. Many AI tasks can be performed with less energy on the device than is required to send the data to the cloud, thus extending battery life.

Edge AI use cases and examples

Common edge AI use cases include speech recognition, fingerprint detection, facial identity, fraud detection and autonomous driving systems. Edge AI combines the power of the cloud with the benefits of local operation to improve the performance of AI algorithms over time.

For example, an autonomous navigation system trains AI algorithms in the cloud, but the inferencing is run on the car to control the steering, acceleration and brakes. Data scientists develop better self-driving algorithms in the cloud and push these models to the vehicle.

In some cars, these systems continue to simulate controlling the car even when a person is driving. The system notes when the human does something the AI didn't expect, captures relevant video and uploads it to the cloud to improve the algorithm. Input from all the vehicles in the fleet helps enhance the main control algorithm, and these enhancements are pushed out in the next update.

The following are a few other places where edge AI is being used:

- Speech recognition algorithms transcribe speech on mobile devices.

- Google AI generates realistic background imagery to replace people or objects removed from a picture.

- Wearable health monitors assess heart rate, blood pressure, glucose levels and breathing locally using AI models developed in the cloud.

- A robot arm gradually learns a better way of grasping a specific package type and shares it with the cloud to improve other robots.

- Amazon Go is a cashierless service trained in the cloud that automatically counts items placed into a shopper's bag using edge AI without a separate checkout process.

- Smart traffic cameras automatically adjust light timings to optimize traffic.

Edge AI vs. cloud AI

The distinction between edge AI and cloud AI starts with how these ideas evolved. There were mainframes, desktop computers, smartphones and embedded systems long before a cloud or an edge. The applications for all these devices were developed slowly using Waterfall development practices. Developers tried to cram as much functionality and testing as possible into annual updates.

The cloud has brought attention to ways data center processes could be automated. This lets development teams adopt more Agile development practices. Some large cloud applications now get updated dozens of times a day, making it easier to develop application functionality in smaller pieces. Edge AI provides a way to extend these modular development and deployment practices beyond the cloud to edge devices, such as mobile phones, smart appliances, autonomous vehicles, factory equipment and remote edge data centers.

There are different degrees of this. At a minimum, an edge device like a smart speaker might send all the speech to the cloud. More sophisticated edge AI devices, such as 5G access servers, could provide AI capabilities to nearby devices. The Linux Foundation's LF Edge group includes light bulbs, mobile devices, on-premises servers, 5G access devices and smaller regional data centers as edge devices.

Edge AI and cloud AI work together with contributions from the edge or the cloud in training and inferencing. At one end, the edge device sends raw data to the cloud for inference and waits for a response. In the middle, the edge AI might run inference locally on the device using models trained in the cloud. At the other end, edge AI plays a larger role in training AI models.

Difference between edge AI and distributed AI

Edge AI runs AI processes directly on local devices at the network's edge, such as internet of things (IoT) sensors. This reduces reliance on cloud connectivity, leading to faster support responses and less bandwidth use. Edge AI is best suited for environments where latency and autonomy are critical.

Distributed AI spreads its work across multiple nodes, typically within cloud infrastructures. Distributed AI is best suited for centralized systems with strong connectivity; it performs poorly in low-bandwidth or high-latency environments.

The most important difference between edge AI and distributed AI is where they process data. Edge AI processes it locally, allowing for real-time decisions without external dependencies. Distributed AI processes data across interconnected systems, using these systems to prioritize scalability. Edge AI is best suited for remote and real-time applications, such as self-driving vehicles and industrial automation. Distributed AI is best in scenarios requiring collaborative processing, scalability and centralized control.

The future of edge technology

Edge AI is an emerging field that's growing fast. Consumer devices, including smartphones, wearables and intelligent appliances, comprise the bulk of edge AI use cases. But enterprise edge AI will likely grow with the expansion of cashierless checkout, intelligent hospitals, smart cities, Industry 4.0 and supply chain automation.

AI technology, such as federated deep learning, is improving the privacy and security of edge AI. Traditional AI pushes a relevant subset of raw data to the cloud to enhance training. In federated learning, the edge device makes AI training updates locally. Model updates, rather than the data, are pushed into the cloud, reducing privacy concerns.

Another significant change could be improved edge AI orchestration. Most edge AI algorithms run local inferencing against data seen by the device. In the future, more sophisticated tools might run local inferencing using data from sensors adjacent to the device.

The development and operations of AI models are in an earlier stage than the DevOps practices for creating applications. Unlike traditional application development practices, data scientists and data engineers face numerous data management challenges. These are complicated when managing edge AI workflows that involve orchestrating data, modeling and deployment processes that span edge devices and the cloud.

Over time, the tools for these processes are likely to improve. This will make it easier to scale edge AI applications and explore new AI architectures. For example, experts are already looking at ways to deploy edge AI in 5G edge data centers closer to mobile and IoT devices. New tools could let enterprises explore new kinds of federated data warehouses with data stored closer to the edge.

Edge AI is an emerging edge computing technology. Learn how to deploy AI in edge computing environments.