Getty Images/iStockphoto

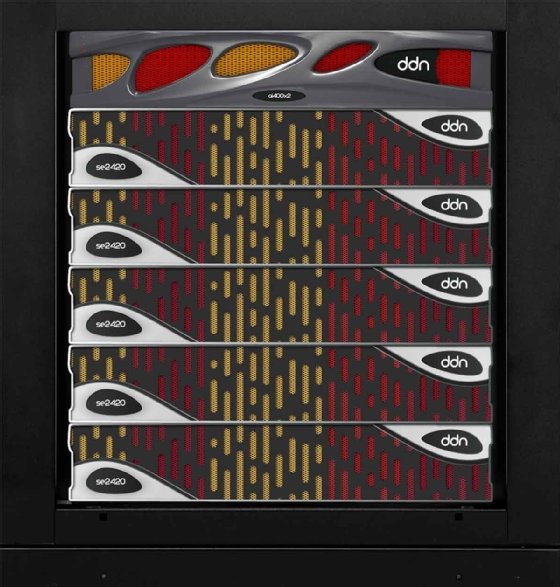

DDN launches all-QLC ExaScaler

The all-QLC ExaScaler scale-out parallel file system from DDN slots in between performance and costs. It comes at a time when AI workloads are on the rise.

DataDirect Networks released an all-quad-level cell version of its high-performance storage offering to continue riding the generative AI wave.

DDN ExaScaler is a scale-out parallel file system, with the AI400X2 version used in AI supercomputing infrastructure, according to the vendor. While previous versions of the parallel file system were either triple-level cell (TLC) flash or a combination of TLC and HDDs, the new version utilizes quad-level cell (QLC), which is priced lower than the TLC but offers more performance than the hybrid, as the demand for systems to train large language models is growing.

"Generative AI workloads leverage HPC infrastructure, architecture and methodologies," said Mark Nossokoff, an analyst at Hyperion Research, a firm that specializes in HPC.

DDN said this month that it has sold more AI storage appliances in the first four months of 2023 than it had in the entirety of 2022. Nossokoff said the increased interest is driven by the growing adoption of generative AI.

More price options

AI workloads require high performance and high capacity. The QLC version of ExaScaler "sits in a goldilocks area" and offers both, according to Mitch Lewis, an analyst at Futurum Group.

Customers will have a slight trade off in performance but at a better price, Lewis said. While concerns around QLC degradation over time persist, success stories from other vendors show it can work with proper architectural approaches, such as adding a high-endurance media for a write buffer.

Before the introduction of the QLC media, customers had the choice of SSDs or HDDS. Flash is more expensive than spinning disks despite discussions about SSDs crossing the price point of HDDs, according to Alf Wachsmann, head of DigIT infrastructure and scientific computing at Helmholtz Munich. The life science and environmental health researcher is part of Helmholtz Association, Germany's largest research organization, and recently selected DDN for its storage in AI workloads.

"Purchasing storage hardware is expensive. If QLC gets me over the threshold [of high price], I will definitely buy that," Wachsmann said.

Helmholtz Munich uses ExaScaler as the central file storage for its scientific data, Wachsmann said. It chose ExaScaler over a scale-out NAS because of its diverse workloads serving lot of data to GPUs, where the distributed file system serves its needs well. ExaScaler also provides the ability for massive capacity scaling with high performance, which Wachsmann believes he wouldn't get with a NAS.

Wachsmann said that using the lower-cost, all-QLC ExaScaler option is appealing. "With some applications, performance is key no matter what the cost," he said. "But for large bulk data, [choosing storage] becomes more about cost per performance."

DDN uses client-side data compression to achieve faster performance, according to Mike Matchett, an analyst at Small World Big Data, an IT analyst firm. The software streamlines storage, pushing data directly to the appropriate storage node and skipping over the metadata node of a scale-out file system.

"There is no buffering, no caching in the TLC node. There is no tiering," Matchett said of client-side data compression.

Customers would have to install client-side software when building a cluster -- something not needed in a scale out NAS, he said. But client-side software also makes networking less complex, requiring fewer switches and improving efficiency.

Client-side data compression can help save on costs by removing writing and reading cycles conducted for traditional data compression, he said. Data for the all-QLC ExaScaler comes in compressed and can be written directly to QLC media.

60 TB SSDs

On top of compression, DDN will be using 60 TB QLC SSDs by the end of the year. The vendor stated that these are commodity SSDs and not purpose-built flash modules.

With large drives comes potentially large rebuild times. But Nossokoff said data is distributed in the system in such a way that there is no one spot to be concerned about.

"There are parallel rebuilds going on in conjunction with parallel access," he said. Parallelism relieves concern around drive failures.

If enough redundancy is built in, end users wouldn't experience a disruption, and storage administrators would be alerted to swap out the failed drive, Wachsmann said.

"That is the beauty of [ExaScaler] -- the integration of everything from the hot spares to the failover," he said. "It is really well designed."

Adam Armstrong is a TechTarget Editorial news writer covering file and block storage hardware and private clouds. He previously worked at StorageReview.com.