Getty Images/iStockphoto

AI dominates 2025 Future of Memory and Storage conference

Techs discussed at the Future of Memory and Storage event included high-capacity and high-performance SSDs, all against the backdrop of AI. Explore expert analysis from the show.

How will today's AI boom affect storage and new technologies? Earlier this month, the Future of Memory and Storage conference, formerly Flash Memory Summit, sought to address those questions.

For the most part, the different nature of AI workloads combined with the significant size of the market creates needs for new ways of moving data between processing and memory. Here's a look at presenters' descriptions of AI's importance to the storage market and some of the technologies that could accommodate the shift from conventional to AI workloads.

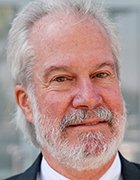

Hyperscaler spending drives the market

More than 200 speakers gathered in Santa Clara, Calif., at the Future of Memory and Storage. To set the stage, many presenters showed their versions of a chart dramatizing hyperscale data centers' enormous spending on AI hardware, similar to the one below. Some estimate that AI spending could surpass $4 trillion by 2030. The most recent quarter's spend was $99 billion, indicating that 2025 annual spending should exceed $400 billion.

Nearly everyone highlighted the power requirements of such systems and the difficult effect they might have on the grid. Some even spoke of small nuclear power plants that certain hyperscalers are said to consider as an alternative to purchasing energy from established firms.

But something that seems to be widely overlooked is the sustainability of this level of spending. In a presentation of my own, I reasoned that it's perfectly justifiable for spending to increase as long as revenue increases justify that move. However, I then illustrated that hyperscaler spending for six leading firms -- Alibaba, Alphabet, Amazon, Apple, Meta and Microsoft Azure -- has risen from a relatively steady 12% to 14% of revenue to roughly double that at 25% of revenue in the most recently reported quarter.

It's hard to imagine that this pace can be offset by today's layoffs of engineers whose jobs are to be replaced by AI. It's more reasonable to expect to see a cooling-off period soon where these companies ease back on capital spending, which is likely to lead to a downturn in the storage and chip businesses. Typically, all these companies tend to increase or decrease capital spending together, leading to relatively serious supply and demand mismatches when they do.

What about technology?

Vendors revealed many new technologies at the Future of Memory and Storage show because AI manages data differently than conventional workloads.

Monster SSDs

Nearly every SSD maker either demonstrated or disclosed 100 TB and larger SSDs. Samsung presented a behemoth of greater than 200 TB, and Sandisk promised a 512 TB SSD in 2027. Many of these SSDs will ship in the new E1.L "ruler" form factor for reasons of both capacity and heat dissipation.

The motivation behind these monster SSDs is that Meta published their finding that performance could be improved by inserting a new layer of QLC SSDs between their TLC SSDs and their HDDs. It's somewhat ironic that this new layer will have a lower total capacity than the HDD layer but will be made from SSDs whose individual capacity will be an order of magnitude larger than the HDDs they cache.

AI-optimized SSDs

Other vendors at the Future of Memory and Storage showed unique approaches to AI. Two that I found particularly interesting were Solidigm's new approach to a hot-swappable liquid-cooled SSD and Phison's SSD designed specifically to reduce cost for large language model (LLM) systems.

Users can insert Solidigm's SSD into a front-panel slot, just like its air-cooled counterpart, using a special third-party rack that presses liquid-cooled plates against the SSDs. The SSDs are designed to take as much advantage as possible by incorporating internal heat management that balances the heat dissipation between the front and back of the SSD.

The Phison Pascari SSD has been designed for an extraordinarily heavy write load, boasting endurance of 100 drive writes per day. AI training workloads are very write-intensive.

Phison's engineers reasoned that GPU performance scales with memory size, meaning high-bandwidth memory (HBM) DRAM, and proper management of this memory along with the use of cheaper NAND flash, can support large models with a lower number of GPUs than normal. Phison's combined hardware and software approach to this problem, dubbed "aiDAPTIV+," allows a single GPU to support LLMs at a somewhat slower performance than a multiple-GPU system at a significantly lower cost.

100 million IOPS SSDs

A couple of companies also shared plans to introduce high-performance AI-specific SSDs boasting 100 million IOPS throughputs. Some questioning revealed that this target, apparently presented to them by their hyperscale customers, was not the standard 4K IOPS that is normally used to compare today's SSDs against one another.

Instead, since AI deals with smaller data granularity, the new AI-specific SSDs will feed GPUs with 100 million 512-byte IOPS, which would presumably be easier to achieve within a given I/O bandwidth. Note that I didn't say that it would be easy, just easier than supplying 100 million 4K IOPS. The leading SSD suppliers are accepting the challenge, though, which implies that they expect to achieve that goal in production volumes.

High-bandwidth flash

Sandisk illustrated a different approach to the size and cost challenges posed by HBM. The company is working on a new high-bandwidth flash (HBF), which should replace some, but not all, of the DRAM-based HBM stacks within a GPU complex.

While today's NAND chips are optimized for cost, HBF will be optimized for read bandwidth. It will still have a decided cost advantage over DRAM, with a similar read bandwidth. The laws of physics prevent write bandwidth from improving, so HBF will be a good platform for inference but not for training, because training has a relatively balanced number of HBM reads and writes, while inference is read-intensive.

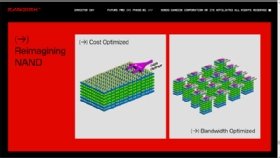

In a February investor conference, when this technology was first disclosed, Sandisk shared the image below.

Although the diagram on the right of the image indicates that a single HBF NAND die should behave like 16 individual chips, Sandisk representatives told me that they weren't disclosing what the actual number might be. The company did share that their models show the performance of a GPU with an unlimited amount of HBF NAND flash came within about 3% of the performance of a similar GPU with an unlimited amount of DRAM-based HBM.

These are exciting times

In the long run, new technologies will fuel today's amazing AI growth and a pipeline of new ideas will help improve AI in the future. All the industry needs to do now is deliver those offerings and protect itself from any pause in AI spending that might happen in coming quarters.

Expect to see these technologies in the future, either sooner, as long as AI spending keeps up, or later, should there be a correction.

Jim Handy is a semiconductor and SSD analyst at Objective Analysis in Los Gatos, Calif.