Getty Images/iStockphoto

High-capacity SSDs positioned to tackle AI onslaught

More AI means more data, and organizations need a way to accommodate it all. For that, flash vendors are looking to high-capacity SSDs as a critical layer in the storage hierarchy.

Everything about today's AI is big, particularly the SSDs that contain the data used for training it.

In 2024, leading NAND flash vendors -- including Kioxia, Micron Technology, Samsung, SK Hynix and Western Digital Corp. (WDC), now operating as SanDisk -- were all telling their investors that they were sampling very large, 100-terabyte SSDs to their hyperscale data center customers. But what is driving the need for such gigantic SSDs?

Some might question the wisdom of constraining an enormous amount of internal NAND flash bandwidth behind an interface that can't come close to serving all of that bandwidth to the processor. This evolution is not about bandwidth, though; it's about AI's gargantuan data sets and the latency advantage that flash provides over HDDs.

AI's domino effect on high-capacity SSDs

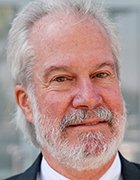

According to WDC, hyperscalers now account for 87% of the company's revenue. This data center growth, along with the advent of colossal AI builds, has had a profound effect on the average capacity of WDC's HDDs over time (see Figure 1).

Hyperscale data centers are procuring enormous volumes of the highest-capacity HDDs that they can, but they want more speed, so they have started to add a new SSD layer to their storage hierarchy. Like HDDs, SSDs are very good at packing a lot of capacity into a small space, but they provide a much better read latency for sequential data than HDDs can. Naturally, though, hyperscalers want these SSDs to have extreme capacities, and NAND flash enables them to do that.

For specifics, we can turn to Meta, which has published a white paper that compares quad-level cell (QLC) flash SSDs to HDDs in nearline storage. This study has led the company to propose adding a very high-capacity QLC SSD tier between an HDD tier and the triple-level cell SSDs already in use. Since data centers have significant space and energy concerns, QLC flash was considered to reduce the space requirements while minimizing power simply by using fewer chips.

How big is big?

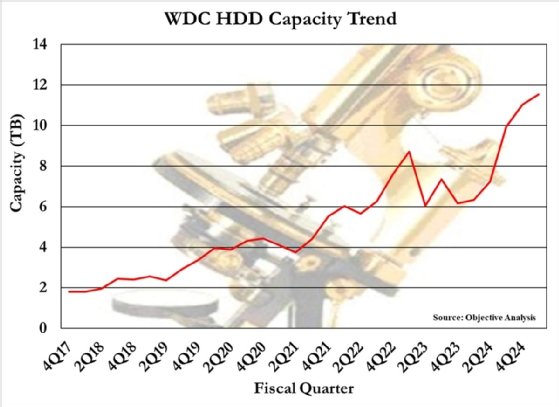

So, just exactly how big are these enormous SSDs? In early 2025, SanDisk projected the arrival of 128 TB, 256 TB and 512 TB SSDs by 2027, with a 1-petabyte (PB) SSD at some unspecified future point (see Figure 2).

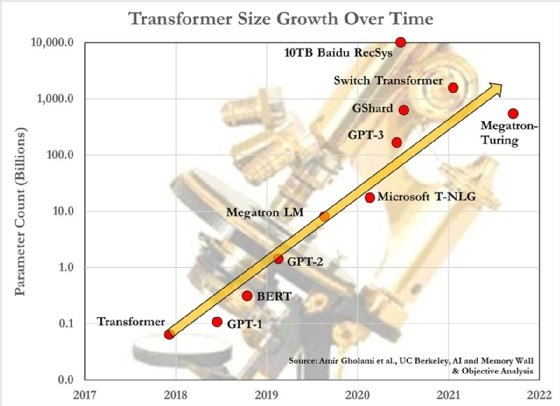

While it's amazing to consider the huge capacity of these devices and to wonder where all of that data might come from, large language model training sets have grown at an almost alarming rate. Researchers from the University of California, Berkeley, found in their paper, "AI and Memory Wall," that the number of parameters in large transformer models is increasing exponentially by a factor of 410 every two years (see Figure 3).

If large transformer models continue to follow this trend, then the orange arrow should extend to 10 quadrillion parameters by 2025. If each parameter uses 4 bytes, that's 40 quadrillion bytes -- or over 300 of SanDisk's 128 TB SSDs. No doubt, many more will be required for housekeeping tasks, such as checkpointing and temporary storage.

Now, multiply this by the number of systems that will be running this large of a model, and the numbers become enormous.

But with SanDisk's roadmap only showing SSD capacity doubling every year, the people charged with building AI systems will need to find ways to reduce these models' sizes if they don't want to have to increase their SSD count -- not to mention cost and energy use -- by 10 times every year.

Huge SSDs won't be cheap

What would a 128 TB SSD sell for? Some people have tried to estimate by multiplying the price they have paid for smaller SSDs to get to 128. That's a fair thing to do because, unlike some other arcane technologies -- such as high-bandwidth memory (HBM) DRAM, which adds a phenomenal level of technical complexity to squeeze a huge memory into a very tiny package -- the 128 TB SSD is not expected to use anything out of the ordinary that would make it more challenging to assemble than most other SSDs.

Taking that approach, it's reasonable to put the price somewhere around $40,000. Of course, there will be considerations that might increase the price, but expect something roughly in that range for the time being. It's a pricey item but one that has a lot of appeal, particularly to hyperscalers, who will find that it works within their cost models to bring down their systems' TCO.

What about physical size?

In its white paper, Meta rationalized that very high-capacity QLC SSDs can be made by using today's leading 2-terabit (Tb) QLC NAND flash chips packaged in 32-die stacks. These stacks are nothing new; NAND flash makers have been using this size and smaller to squeeze several gigabytes into a microSD format for decades.

Still, a 100 TB SSD will use more than 400 of these 2 Tb NAND flash chips. That's a lot of silicon for a single SSD, consuming about half of an entire 3D NAND wafer. Packaging them in 32-die stacks, though, cuts the package count down to just 13 packages, making this something easy to fit into even a small 2.5-inch SSD or a U.2 form factor.

Others who plan to use more widely available 8-die stacks will need a form factor that can house four times as many packages, or around 50 of them. In this case, there's always the standard E1.L ruler format, allowing sufficient room for this many packages.

Other applications

There's another AI application showing promise for these high-capacity SSDs. Some on-premises data centers handle sensitive data that must not be trusted to the cloud. Naturally, these data centers want to protect their AI data, both training and inference, with the same level of security as their other data. According to SK Hynix, some of the demand for its Solidigm line of high-capacity QLC SSDs is coming from these data centers.

Given that various nations have built local AI data centers to protect the sovereignty of their AI data sets, it's no surprise that individual businesses and other smaller organizations would take similar measures.

Both Samsung and SK Hynix noted on-premises inference as another big demand driver for supersized SSDs. Hosting inference applications on-premises helps to reduce the latency of the inference process. With local inference, the application gains a more immediate response to recent stimuli. Some inference applications will need the high capacities offered by these enormous SSDs.

Matching SSDs to GPUs

Since AI data sets are so huge, it pays to focus on how to reduce any wasted effort when moving the data from storage to the GPU. How does a designer best match the SSD's performance to the needs of the GPUs it will feed?

One approach to this is the aiDAPTIV+ AI SSD system from NAND flash technology vendor Phison, which lets budget-minded AI projects use AI-specific SSDs to reduce the number of GPUs used in an AI training system. The Phison SSDs had to be specially designed to satisfy two requirements unique to AI: They had to provide extreme endurance, and the SSD's serial data stream needed to match the structure of the GPU's HBM data fills. Phison designed specialized SSDs to target those parameters, resulting in a system that provides a price/performance point that is compelling for many workloads.

The concept of matching the SSD's I/O stream to the GPU's requirements is sure to become a popular one as time progresses.

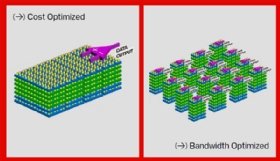

SanDisk has taken the idea in a different direction -- not with an SSD, but by creating specially designed NAND flash chips to replace some or even all of the HBM DRAM usually found in a GPU complex (see Figure 4). In essence, large NAND flash chips, which are typically designed to optimize cost per gigabyte, are instead subdivided into several smaller blocks that perform as if they were an array of independent lower-density NAND chips. This multiplies the NAND chip's bandwidth without changing either its read latency or its slow and clumsy approach to writes. Is this even useful? The fact that hyperscalers have joined SanDisk to define this product indicates that such chips could solve certain AI problems.

SanDisk claimed that a GPU with eight 24 GB HBM stacks, totaling 192 GB, could replace six of those eight HBMs with 512 GB high-bandwidth flash (HBF) stacks to have 3,120 GB, or perhaps even could replace all eight HBMs with HBF to have a total memory size of 4,096 GB. No doubt, some recoding will be required to enable such a system to perform optimally with the new memory type, but since inference uses considerably fewer memory writes than training does, NAND flash might well be the way to go in inference systems.

While this last example does not automatically involve a gargantuan SSD, a large SSD might well complement it. Through either path, though, it's clear that the amount of NAND flash-based storage in large-scale AI systems is poised to grow at a faster rate over the next few years, and storage administrators will certainly find that their task list will grow as a result.

Jim Handy is a semiconductor and SSD analyst at Objective Analysis in Los Gatos, Calif.