Getty Images

Compare EKS vs. self-managed Kubernetes on AWS

AWS users face a choice when deploying Kubernetes: run it themselves on EC2 or let Amazon do the heavy lifting with EKS. See which approach best fits your organization's needs.

AWS users who want to deploy Kubernetes on AWS have two main options: go it alone or let Amazon do the heavy lifting. Each approach has its pros and cons.

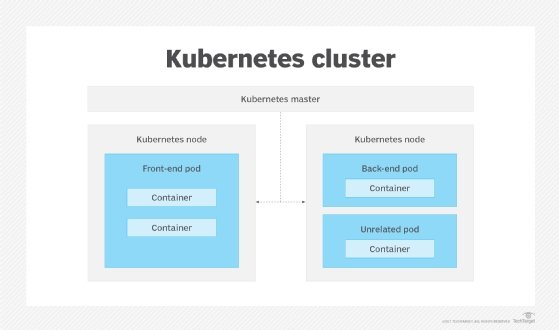

Kubernetes, an open source container orchestrator, simplifies automation, deployment, scaling and operation of containerized applications. It enables companies to reap the benefits of containers without having to deal with the complexity and overhead that previously went with them.

AWS has a fairly successful managed container orchestration service of its own --Amazon Elastic Container Service. Alternatively, users could run their own Kubernetes clusters on EC2. Eventually, however, Amazon couldn't ignore the demand for a managed Kubernetes offering, and in late 2017, Amazon added Elastic Container Service for Kubernetes (EKS).

To choose between EKS and self-managed Kubernetes, carefully consider the benefits and drawbacks of each option.

Kubernetes on AWS: Know your options

With EKS, Amazon fully manages the control plane -- including components like etcd and Kubernetes API server with integration to other AWS services, such as Identity and Access Management (IAM).

The entire management infrastructure runs behind the scenes, across multiple availability zones. AWS automatically replaces any unhealthy nodes in order to maintain high availability. It also handles all the upgrades and patching. For users, the EKS control plane is essentially a black box.

For the data plane, there are three options for EKS users: self-managed nodes, EKS-managed node groups and AWS Fargate.

Self-managed nodes

With the self-managed nodes, users need to create and manage nodes with manual configuration or via the AWS CloudFormation templates provided by EKS. When the EC2 VMs are up and running, they join the Kubernetes control plane. Then, the Kubernetes control plane assigns pods to the nodes and runs containers. In addition, users need to handle the updating of the OS and Kubernetes version of the data-plane nodes.

EKS-managed node groups

With the EKS-managed node groups, EKS automatically creates and updates data-plane nodes. Users need to click only a couple of buttons on the web console to add new nodes and update the OS or Kubernetes versions.

AWS Fargate

AWS Fargate is the managed service to create on-demand compute capacity for containers. With it, users can take advantage of the serverless platform for their Kubernetes data plane. When the Kubernetes control plane creates new pods, they will run as containers in Fargate without any Kubernetes node creation and configuration.

A developer can deploy an EKS cluster using eksctl. This third-party tool can provision an entire Kubernetes infrastructure, including the managed control plane and unmanaged worker nodes. It can also make subsequent modifications when required. Use kubectl to run actual commands against a Kubernetes cluster and manage the containerized resources within it. This is a standard tool, regardless of the Kubernetes version.

Alternatively, IT teams can run a self-managed Kubernetes environment on an EC2 instance. Deploy with tools like kops, which help create and manage the Kubernetes cluster. In this case, the control plane is visible and available to the users, who will be able to see components running on dedicated EC2 instances. Admins will also have to patch and maintain everything by hand, which can be inconvenient in some cases.

Kubernetes on AWS: EKS vs. self-managed

When an organization must decide how it will deploy Kubernetes on AWS, choosing the right option comes down to the use case and specific development requirements. The available features and the simplicity -- or lack thereof -- will push organizations one way or the other. Key features to consider are availability, security and cost.

Availability and management

Although EKS runs Upstream Kubernetes and is certified Kubernetes-conformant, it has some drawbacks. It's not available in all AWS regions, and its release velocity is slow. As of publication, EKS is only running up to Kubernetes version 1.22, even though version 1.24 is already out.

On the other hand, EKS certainly cuts the overhead of Kubernetes deployment and maintenance. This is one of its main advantages. Many companies don't have the resources or the willingness to manage a self-hosted Kubernetes cluster, so interest in running Kubernetes in the cloud rose significantly with the release of EKS.

While it's not easy to create a scalable and highly available self-managed Kubernetes cluster, EKS always ensures the control plane is up and running. In addition, EKS with Fargate helps users create a scalable data plane with minimal overhead.

Security and updates

Security and updates of the control plane and data plane are essential to have a secure and reliable Kubernetes installation. With the unmanaged Kubernetes installation, users need to update control plane components and the nodes. With the managed EKS service, the control plane will be the responsibility of AWS, while there are different approaches for the data plane.

- Managed EKS nodes. Users can update the nodes easily with one click in the console.

- Self-managed nodes. Security and update of the nodes are completely the user's responsibility.

- AWS Fargate. Fargate will run the containers in an up-to-date and secure environment.

Cost

Cost is another factor. EKS charges $0.10 per hour for each Amazon EKS cluster, which comes out to $73 per month in the U.S. East (Ohio) Region. In addition to the control plane, organizations pay for the worker nodes on top of that. If users need a high quantity of Kubernetes clusters with smaller development environments, control plane costs of $73 could pile up.

On the other hand, these costs will be negligible for the larger production workloads consisting of nodes with enormous computation resources. When you run self-managed Kubernetes clusters, you pay for the worker nodes as well as the control plane nodes. For a reliable Kubernetes API, separate control plane nodes. The total cost will depend on the instance types and size of the cluster as well.