vectorfusionart - stock.adobe.co

How multi-agent systems are reshaping cloud design

Existing cloud architectures struggle to support AI-native workloads, high cost and operational gaps. Enterprises must modernize six key layers: compute, orchestration, FinOps, data platform, security and observability.

As enterprises adopt foundation models, autonomous agents and GenAI, many are discovering that existing cloud architectures were not designed to support these workloads at scale.

Industry research already points to mounting cost and operational pressure. According to Gartner, organizations piloting GenAI frequently report infrastructure and operational costs exceeding initial estimates, driven by unmanaged inference behavior, data movement and GPU utilization.

Cost and risk signals are becoming increasingly visible at the enterprise level. The FinOps Foundation's 2024 State of FinOps Report found that 45% of large cloud spenders -- $100M+ annually -- now see AI and machine learning workloads materially affecting their cost governance practices, with nearly half citing rapid growth in AI-related spend.

For CIOs and CTOs, the implication is clear, while current cloud environments can support short-lived AI experiments, they often struggle to operate autonomous, multi-agent systems reliably, predictably and within budget.

Gartner further forecasted that atleast 30% of GenAI initiatives will be abandoned after proof of concept due to poor data quality, inadequate risk controls, escalating costs and unclear business value. As a result, architects proposing changes in cloud strategy are increasingly framing the discussion around sustainability and risk -- recognizing that architectures built for stateless, deterministic workloads break down as autonomy, scale, and decision complexity increase.

This article examines:

- Why AI-native workloads expose fundamental gaps in existing cloud design

- Six architectural layers enterprises must modernize to support multi-agent systems.

- Guidance for architects when proposing AI-driven cloud changes to executive leadership.

Enterprise architects can use this as a blueprint for preparing cloud environments for AI-native applications at scale.

AI-native workloads expose gaps in cloud architecture

Traditional cloud architecture was built for stateless microservices, predictable request patterns and fixed workflows. Multi-agent AI systems break these assumptions. They require:

- Persistent context.

- Dynamic task decomposition.

- Autonomous decision-making.

- Multi-modal data access.

- Reasoning traceability.

- Human-in-the-loop pathways.

AI-native workloads behave less like applications and more like adaptive systems. This shift is already visible in enterprise environments where agent-based workflows generate non-deterministic execution paths, variable resource consumption and long-lived reasoning states.

As a result, enterprises frequently encounter higher operational complexity and unexpected cost growth when cloud environments are not designed with AI-native patterns in mind. Addressing these gaps requires foundational changes across compute, data, orchestration, security and observability.

6 layers enterprises must modernize

AI-native workloads represent the largest shift in cloud architecture since the emergence of microservices. Enterprises that attempt to retrofit these systems into traditional cloud designs are already experiencing cost overruns, operational blind spots and governance challenges.

Organizations that modernize their cloud architectures to support autonomous, context-rich rich and dynamically orchestrated agent systems will be best positioned to scale AI safely across mission-critical functions. Below are six layers that organizations should plan to modernize.

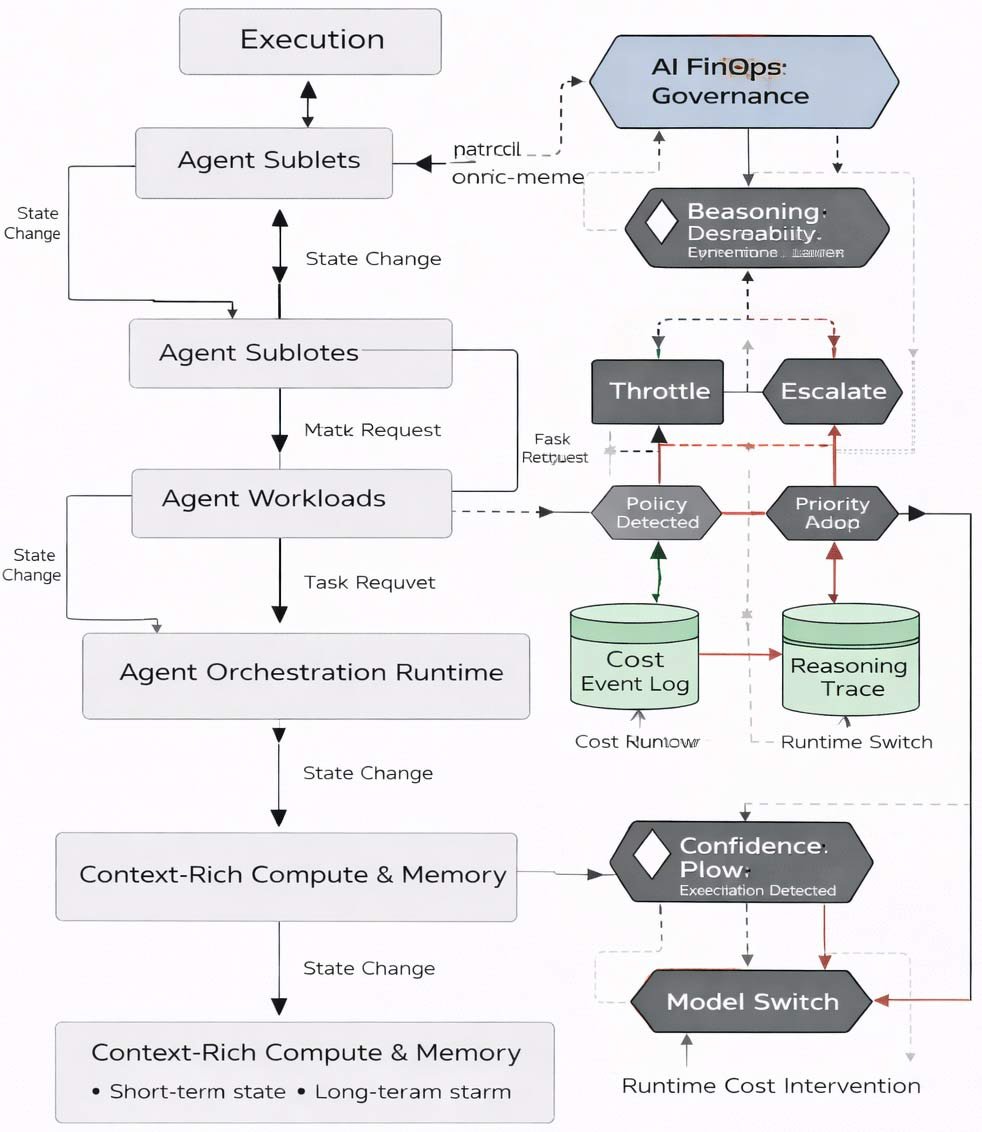

A high-level AI-native enterprise architecture illustrating how context, orchestration, governance, security, and observability layers interact to support autonomous, multi-agent systems at scale.

A high-level AI-native enterprise architecture illustrating how context, orchestration, governance, security, and observability layers interact to support autonomous, multi-agent systems at scale.

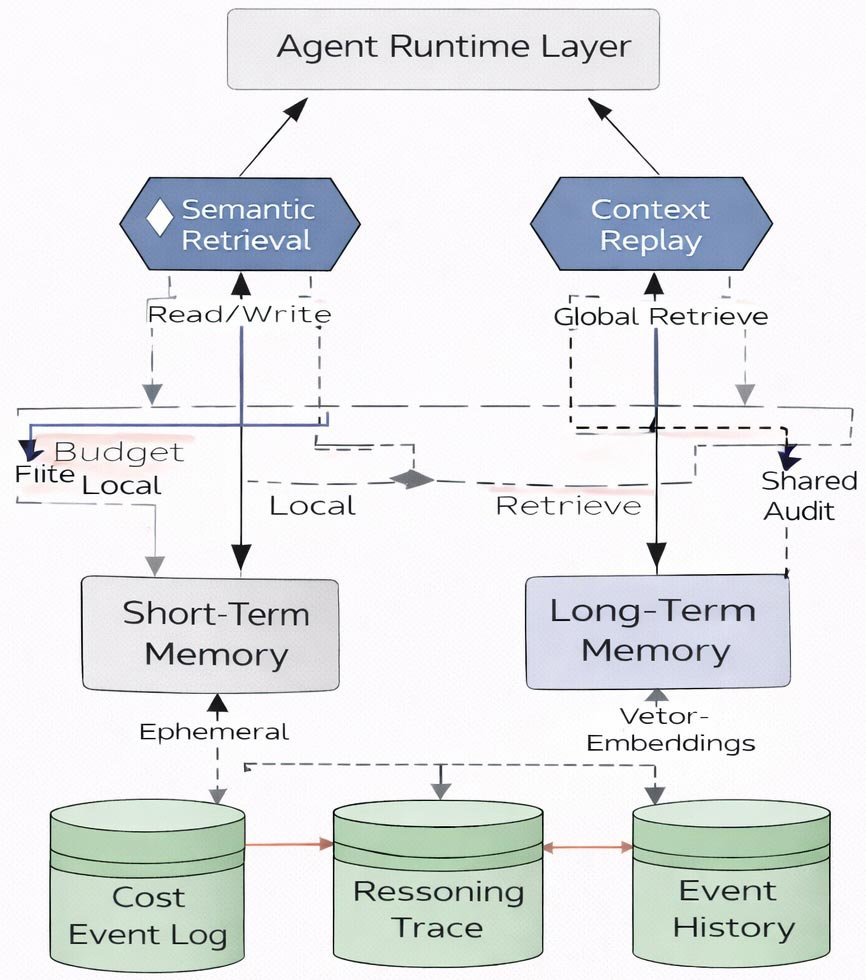

1. Context-rich compute

Multi-agent systems require storing and retrieving context across steps. While today’s cloud compute emphasizes statelessness, AI-native workloads need memory tiers, including the following:

- Short-term memory. In-memory key-value stores for ephemeral reasoning artifacts.

- Long-term memory. Vector databases for durable embeddings.

- Event history. Streaming logs for auditability and replay.

Architects should introduce a context fabric that provides consistent APIs for memory access across compute runtimes. Context retention reduces hallucinations, maintains agent continuity and improves reasoning quality.

Agent context fabric illustrating short-term memory, long-term vector memory, and durable event history supporting multi-step reasoning and auditability.

Agent context fabric illustrating short-term memory, long-term vector memory, and durable event history supporting multi-step reasoning and auditability.

In enterprise environments that introduced shared context layers and durable memory, cloud teams commonly observe fewer redundant inference calls, more consistent agent behavior across steps, and improved response latency in multi-stage workflows. While implementations vary, the shift from stateless execution to context-aware designs consistently reduces operational friction during scale-out.

2. Agent orchestration

Traditional workflows follow fixed Directed Acyclic Graphs (DAGs). Multi-agent systems require dynamic execution paths because agents call each other, request new tools, adapt steps based on context and escalate tasks to humans.

Cloud requirements include the following:

- Graph-based orchestration engines.

- Policy-driven routing.

- Real-time collaboration across agents.

Enterprises should evaluate orchestrators based on runtime adaptability, reasoning traceability, and governance controls, rather than fixed pipelines.

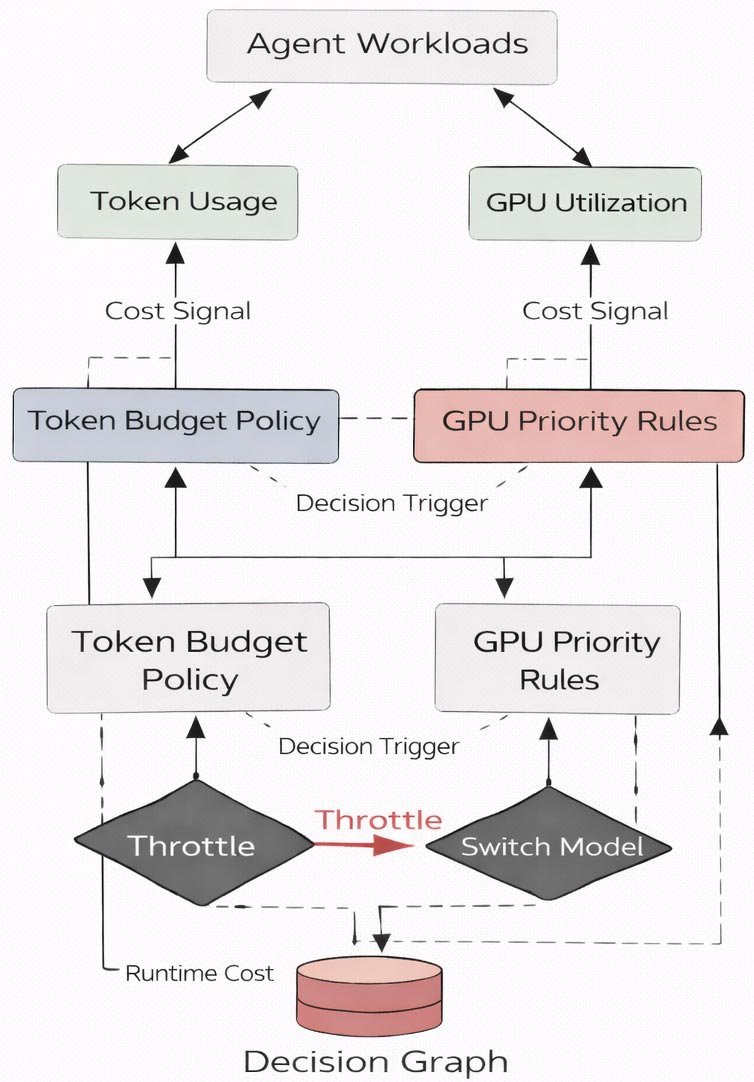

3. AI FinOps

AI-native workloads operate in non-deterministic execution patterns. Without cost governance, inference cycles can exceed budgets quickly. Architects should introduce real-time AI cost governors that enforce policies during inference instead of after billing periods.

Real-time AI FinOps governance layer enforcing token budgets, model routing policies, and GPU prioritization across autonomous agent workloads.

Real-time AI FinOps governance layer enforcing token budgets, model routing policies, and GPU prioritization across autonomous agent workloads.

Key capabilities include the following:

- Token-level spend tracking.

- Dynamic model switching (cost vs. precision).

- GPU allocation and quotas.

- Workload prioritization.

In enterprise pilots, cloud teams frequently find that traditional FinOps processes -- designed for predictable workloads -- fail to keep pace with autonomous AI execution. Gartner notes that cost overruns in GenAI environments are rarely caused by single workloads, but by cumulative inference cycles and coordination between agents that lack real-time governance.

4. Data platforms

Agents consume structured data, unstructured documents, logs, images and real-time events. Organizations should adopt an "agent data mesh" in which each domain exposes vector indexes, policies, and retrieval services to support autonomous agents safely.

Modernization components include the following:

- Unified retrieval layer.

- Semantic indexing and embedding APIs.

- Data freshness guarantees.

- Embedding governance.

- Access control at semantic boundaries.

5. Behavioral security

Traditional cloud security does not account for AI-driven decisions or actions. This layer protects organizations from unsafe agent behavior, manipulation and emergent patterns.

New security controls should include the following:

- Pre- and post-inference safety filters.

- Policy-based tool access management.

- Memory integrity checks.

- Decision anomaly detection.

6. Observability

Traditional observability tools capture logs, traces and metrics -- but not why an AI system took a particular action. Observability must include reasoning, not just events. Existing observability platforms should be extended to capture reasoning chains to support debugging, compliance and safety.

Required enhancements include the following:

- Reasoning traces.

- Agent decision graphs.

- LLM span tracking.

- Semantic-level error classification.

IDC research highlights that as AI systems become more autonomous, traditional observability approaches provide insufficient insight into system behavior, leading to longer incident resolution times when engineering teams cannot reconstruct AI reasoning paths.

What cloud teams should do in the next 90 days

Evaluate memory & compute needs. Introduce memory-aware infrastructure designs for pilot workloads.

Introduce AI governance early. Define policies for model access, tool invocation and data boundaries.

Extend observability. Capture metadata for reasoning and agent interaction flows.

Modernize retrieval capabilities. Implement vector indexes and semantic search APIs.

Establish AI FinOps practices. Create dashboards and policy engines to control spending in real time.

Conclusion

AI-native workloads represent the largest shift in cloud architecture since the emergence of microservices. Enterprises that attempt to retrofit these systems into traditional cloud designs are already experiencing cost overruns, operational blind spots and governance

challenges.

Organizations that modernize their cloud architectures to support autonomous, context rich and dynamically orchestrated agent systems will be best positioned to scale AI safely across mission-critical functions.

Varun Raj is a cloud and AI engineering executive specializing in enterprise-scale cloud modernization, AI-native architectures, and large-scale distributed systems. His work focuses on designing and operationalizing cloud- and AI-native platforms, including generative AI and multi-agent systems, in highly regulated industries such as healthcare and financial services.