Multiprotocol Label Switching (MPLS)

What is Multiprotocol Label Switching (MPLS)?

Multiprotocol Label Switching (MPLS) is a switching mechanism used in wide area networks (WANs).

MPLS uses labels instead of network addresses to route traffic optimally via shorter pathways. MPLS is protocol-agnostic and can speed up and shape traffic flows across WANs and service provider networks. By optimizing traffic, MPLS reduces downtime and improves speed and quality of service (QoS).

History of MPLS

As the internet grew in popularity, organizations looked for an efficient way to perform packet forwarding. Bandwidth demands increased, but label-switching mechanisms struggled to handle the load. Traditional methods, such as IP switching and tag switching, require each router to independently determine a packet's next hop by inspecting its destination IP address before consulting its routing table. This slow process involves hardware resources and introduces the potential of degraded performance for real-time applications, such as voice and video. Traditional routers needed to scale more effectively to meet the bandwidth needs of the modern internet and avoid slow speeds, jitter and packet loss.

In 1997, the Internet Engineering Task Force (IETF) Multiprotocol Label Switching working group formed to create standards to help fix the issues around internet traffic routing. MPLS was developed as an alternative to multilayer switching and IP over asynchronous transfer mode (ATM). MPLS routers don't look up routes in routing tables, which helps boost the speed of network traffic. As MPLS techniques were developed and adopted throughout the early 2000s, the protocol became widely adopted.

MPLS can work in a multiprotocol environment, such as ATM, frame relay, Synchronous Optical Network and Ethernet. MPLS continues to evolve as backbone network technologies evolve, and the IETF working group still works on MPLS protocols and mechanisms. MPLS also played a significant role in the support of legacy network technologies, as well as newer technology based on IP networks.

This article is part of

What is SD-WAN (software-defined WAN)? Ultimate guide

Components of MPLS

MPLS is defined by its use of labels instead of network addresses. This factor drives the flexibility and efficiency of MPLS.

A label is a four-byte -- 32-bit -- identifier that conveys the packet's predetermined forwarding path in an MPLS network. While a network address specifies an endpoint, a label specifies paths between endpoints. This latter capability enables MPLS to decide the optimal pathway route of a given packet. Labels can also contain information about QoS and a packet's priority level.

MPLS labels consist of the following four parts:

- Label value: 20 bits.

- Experimental: 3 bits.

- Bottom of stack: 1 bit.

- Time to live: 8 bits.

MPLS is multiprotocol, which means it can handle multiple network protocols. MPLS is highly versatile and unifying, as it provides mechanisms to carry a multitude of traffic, including Ethernet traffic. One of the key differentiators between MPLS and traditional routers is it doesn't need specialized or additional hardware.

Below is an overview of MPLS:

- It forwards using labels, as opposed to network addresses.

- The label contains the service class, as well as the destination, of the packet.

- It operates between Layers 2 and 3 of the Open Systems Interconnection (OSI) model.

- It guarantees the bandwidth of paths.

- ATM switches can act as routers, so no additional hardware is needed.

How an MPLS network works

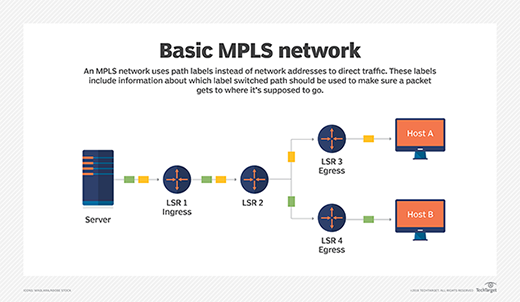

In an MPLS network, packets are labeled by an ingress router -- a label edge router (LER) -- as they enter a service provider's network. The first router to receive a packet calculates the packet's entire path upfront. It also conveys a unique identifier to subsequent routers using a label in the packet header.

Every prefix in a routing table receives a unique identifier, and the MPLS service tells routers exactly where to look in the routing table for a specific prefix. This mechanism speeds up communication and traffic hopping.

MPLS works between the following OSI model layers:

- Layer 2. The data-link layer, or switching level, which uses protocols such as Ethernet.

- Layer 3. The routing layer, which covers traffic routing.

MPLS label traffic is sent via a label-switched path (LSP) inserted between the Layer 2 and Layer 3 headers. Label switch routers (LSRs) interpret the MPLS labels -- not the full IP address of any traffic. MPLS forwards data packets to Layer 2 of the OSI model, rather than passing to Layer 3. For this reason, MPLS is informally described as operating at Layer 2.5.

MPLS routing terminology

Label edge routers. LERs are the ingress or egress routers or nodes when an LSR is the first or last router in the path, respectively. LSRs label incoming data -- the ingress node -- or pop the label off the packet.

Label-switched paths. LSPs are the pathways through which packets are routed. An LSP enables service providers to decide the best way to flow certain types of traffic within a private or public network.

Label switch routers. LSRs read the labels and send labeled data on identified pathways. Intermediate LSRs are available if a packet data link needs to be corrected.

Pop. This mechanism removes a label and is usually performed by the egress router.

Push. This mechanism adds a label and is typically performed by the ingress router.

Swap. This mechanism replaces a label and is usually performed by LSRs between the ingress and egress routers.

Steps of an MPLS network traffic pathway

Here is an example of how a packet travels through an MPLS network:

- A packet enters the network through an LER.

- The packet is assigned to a forwarding equivalence class (FEC). The FEC assignment depends on the type of data and the destination. FECs are used to identify packets with similar or identical characteristics.

- The LER -- or ingress node -- applies a label to the packet and pushes it inside an LSP. The LER decides on which LSP the packet takes until it reaches its destination address.

- The packet moves through the network across LSRs.

- When an LSR receives a packet, it carries out the Push, Swap and Pop actions.

- In the final step, the LSR -- or egress router -- removes the labels and then forwards the original IP packet toward its destination.

Benefits of MPLS

Router hardware has improved significantly since the development of MPLS, but MPLS still offers important benefits.

QoS controls and reliability

Services need to be able to meet service-level agreements that cover traffic latency, jitter, packet loss and downtime. Service providers and enterprises use MPLS to implement QoS by defining LSPs that can meet the specific needs of a service. For example, a network might offer three service levels, each prioritizing different types of traffic -- e.g., one level for voice, one for time-sensitive traffic and one for best-effort traffic.

VPNs

MPLS supports traffic separation and the creation of virtual private networks (VPNs), virtual private local area network services and virtual leased lines.

Agnostic protocol support

MPLS is not tied to any specific protocol or transport medium. MPLS supports transport over IP, Ethernet, ATM and frame relay. An LSP can be created using any protocol. Generalized MPLS extends MPLS, which manages time-division multiplexing, and other classes of switching technologies beyond packet switching.

Reduced latency and improved performance

MPLS is ideal for latency-sensitive applications, such as those handling videos, voice and mission-critical data. In addition, MPLS reduces latency by routing data more quickly using shorter path labels.

To optimize performance, different types of data can be preprogrammed with other priorities and classes of service. Organizations can assign different bandwidth percentages for various kinds of data to ensure optimal delivery and access.

Scalability

MPLS networks are scalable. Companies can provision and pay for only the bandwidth they need until their requirements change.

MPLS and security

If MPLS is correctly configured, the security is comprehensive. In addition, MPLS connections are over a private, dedicated network, which creates customer isolation and helps ensure privacy.

MPLS traffic isn't usually encrypted, but packets' labeling improves security through unique identifiers and isolation.

Organizations should use additional security measures to ensure MPLS networks are secured. Extra security best practices should include a defense-in-depth approach that uses measures such as denial-of-service prevention, firewalls to filter out malicious packets and authentication protocols to limit access. A best practice is to use a VPN tunnel between the provider edge routers and customer edge routers.

MPLS vs. SD-WAN

Many experts have debated the relevance of MPLS with the rise of software-defined WAN (SD-WAN) technology. SD-WAN enables organizations to use cheaper WAN connectivity options, such as broadband, sometimes replacing more expensive MPLS links.

SD-WAN offers several advantages over traditional MPLS networks.

No bandwidth penalties

SD-WAN does not have bandwidth penalties. Consequently, SD-WAN clients can easily upgrade by teams adding new links without making changes to the network or infrastructure.

Less costly

MPLS can be more expensive than SD-WAN. Many companies connect retail locations and branch offices to the central data centers via hub-and-spoke WAN models that depend on individual MPLS connections. Consequently, all data, workflows and transactions, including internet or cloud services access, must backhaul to the data center to be processed and redistributed.

SD-WAN cuts costs by offering optimized, multipoint connectivity through distributed, private data traffic exchange and control points. This gives users local and secure access to the services they need from the network or the cloud, while securing direct access to the internet and cloud resources.

Encrypted traffic

While MPLS networks are typically safe, MPLS does not use end-to-end encryption, making it vulnerable to cyber attacks. In addition, SD-WAN is a component of Secure Access Service Edge security, which offers significant benefits, including zero trust and endpoint protection.

But MPLS has some benefits over SD-WAN, including the following:

- Packet control. MPLS provides more granular control than SD-WAN, and packets always follow the defined path.

- Excellent isolation capabilities. This isolation enhanced privacy and security.

Hybrid MPLS and SD-WAN

Organizations can use a hybrid combination of SD-WAN and MPLS to provide a comprehensive WAN strategy.

SD-WAN enables real-time application traffic steering over any link, including MPLS. A hybrid SD-WAN-MPLS strategy uses a mix of traditional MPLS with internet connectivity from an SD-WAN.

Typical hybrid use cases employ a single MPLS line with an SD-WAN connection per site. Each instance is monitored for packet loss and jitter, and failover is automatically used if a line fails.

The future of MPLS

The future of networking is about reducing complexity, minimizing risk and optimizing spend. While many experts speculated about the demise of MPLS, saying SD-WAN will overtake it, MPLS has persisted, albeit at lower uptake levels. According to Market Growth Reports, the managed MPLS market is expected to grow until 2027 at a CAGR of 6.73%. One of the main reasons MPLS will see continued growth as a managed service is the guaranteed optimized performance of apps, such as voice over IP, through better QoS via a managed service provider (MSP).

SD-WAN is the proposed alternative to MPLS, as it offers some benefits above and beyond MPLS. SD-WAN security is enhanced using a framework of integrated security services. Gartner predicted that, by 2024, 80% of SD-WAN deployments will incorporate security service edge requirements. This security framework requires expertise and skills to implement and manage. For SMBs, this will most likely be achieved using an MSP.

In the next few years, most analysts expect that MPLS will continue to be a viable option, especially for connecting regional offices. However, the hybrid model of MPLS and SD-WAN is likely to see traction, especially as a managed service.