What is quality assurance (QA)?

Quality assurance (QA) is any systematic process of determining whether a product or service meets specified requirements. QA establishes and maintains set requirements for developing or manufacturing reliable products.

A quality assurance process is meant to increase customer confidence and a company's credibility while improving work processes and efficiency. It also helps a company compete in its market.

The International Organization for Standardization (ISO) is a driving force behind QA practices and mapping the processes used to implement QA. QA is often paired with the ISO 9000 international standard. Many companies use ISO 9000 to ensure their QA system is in place and effective.

The concept of QA as a formal practice started in the manufacturing industry. It has spread to most industries, including software engineering.

Importance of quality assurance

There are three primary reasons why effective QA implementation is essential:

- Customer satisfaction. QA helps a company create products and services that meet customers' needs, expectations and requirements.

- Public trust. QA yields high-quality product offerings and services that build trust and loyalty.

- Product quality. QA programs define standards and procedures that proactively prevent product defects and other issues before they arise.

Quality assurance methods

QA processes are built using one of three methods:

- Failure testing. This approach continually tests a product to determine if it breaks or fails. For physical products that need to withstand stress, this could involve testing the product under heat, pressure or vibration. For software products, failure testing might involve placing the software under high use or load conditions.

- Statistical process control. SPC is a methodology based on objective data and analysis. It was developed by Walter Shewhart at Western Electric Company and Bell Telephone Laboratories in the 1920s and 1930s. This methodology uses statistical methods to manage and control the product production.

- Total quality management system. TQM applies quantitative methods as the basis for continuous improvement. It relies on facts, data and analysis to support product planning and performance reviews.

Quality assurance vs. quality control

Some people may confuse the term QA with quality control (QC). The two concepts are similar, but there are important distinctions between them.

QA provides guidelines that can be used anywhere. QC promotes a production-focused approach to processes. QA is any systematic process for ensuring a product meets specified requirements, whereas QC addresses other issues, such as individual inspections or defects.

In software development, QA practices seek to prevent malfunctioning code or products. QC implements testing and troubleshooting and fixes code.

QA standards

QA and ISO standards have changed and been updated over time to stay relevant to today's businesses.

ISO 9001:2015 is the latest standard in the ISO 9000 series. It provides a stronger customer focus, top management practices, and guidance on how management practices can change a company. ISO 9001:2015 also includes improvements to the standard's structure and more information for risk-based decision-making.

ISO 9001 helps organizations improve and optimize processes, efficiently resolve customer queries and complaints, and increase overall customer satisfaction. The standard encourages organizations to regularly review their QA processes to identify areas for improvement.

Quality assurance in software

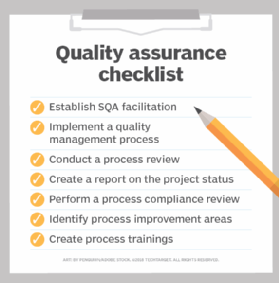

Software quality assurance (SQA) systematically identifies patterns and the actions needed to improve development cycles. However, finding and fixing coding errors can have unintended consequences; fixing one issue can cause problems with other features and functionality.

SQA has become an important way for developers to avoid errors before they occur, saving development time and expenses. But even with SQA processes in place, an update to software can break other features and cause defects -- known as bugs.

There have been numerous SQA strategies. For example, the Capability Maturity Model is a performance improvement-focused SQA model. CMM ranks maturity levels of areas within an organization and identifies optimizations that can be used for improvement. Rank levels range from being disorganized to being fully optimal.

Software development methodologies that rely on SQA include Waterfall, Agile and Scrum. Each development process seeks to optimize work efficiency.

Waterfall

This methodology takes a traditional linear approach to software development. It's a step-by-step process that typically involves gathering requirements, formalizing a design, implementing code, code testing, remediation and release. It's often seen as too slow, so alternative development methods were developed.

Agile

This approach is team-oriented, with each step in the work process treated as a sprint. Agile software development is highly adaptive but less predictive because the scope of the project can easily change.

Scrum

This method combines the Waterfall and Agile approaches. Developers are split into teams to handle specific tasks, and each task is separated into multiple sprints.

The first step in choosing a QA methodology is to set goals. Then, consider the advantages and tradeoffs of each approach, such as maximizing efficacy, reducing cost or minimizing errors. Management must be willing to implement process changes and work together to support the QA system and establish quality standards.

QA team

SQA career options include SQA engineer, SQA analyst, SQA manager and SQA test automation. Software quality engineers monitor and test software through development. An SQA analyst monitors the implications and practices of SQA over software development cycles. SQA test automation pros create programs to automate the SQA process.

These programs compare predicted outcomes with actual outcomes. They ensure software quality standards are consistently met. An SQA manager oversees the entire process and examines outcomes to verify that the software is production-ready. Therefore, having a QA team in place means a comprehensive approach to quality assurance.

SQA tools

Software testing is an integral part of software QA. Testing saves time, effort and cost, and it facilitates the production of a quality end product. Developers can use numerous software tools and platforms to automate and orchestrate testing to facilitate SQA goals.

Selenium is an open source software testing program that runs tests in various popular software languages, such as C#, Java and Python.

Jenkins is another open source program that enables developers and QA staff to run and test code in real time. It's suited for a fast-paced environment because it automates tasks related to the building and testing of software.

For web apps or application programming interfaces, Postman automates and runs tests. It's available for Mac, Windows and Linux and supports Swagger and RAML formatting.

QA uses by industry

The following are examples of how QA is used in various industries:

- Manufacturing. Manufacturing formalized the QA discipline. Manufacturers must ensure that final products have no defects and meet the defined specifications and requirements.

- Food production. Food production uses X-ray systems, among other techniques, to detect physical contaminants in the food production process. The X-ray systems ensure that contaminants are removed and eliminated before products leave the factory.

- Pharmaceutical. Healthcare and pharmaceutical companies use different QA approaches during each drug development stage. QA processes include reviewing documents, approving equipment calibration, examining training and manufacturing records, and investigating market returns.

QA vs. testing

Multiple aspects of QA are different from testing. They include the following:

- Purpose. QA is more focused on process improvement and procedures to ensure a product meets quality requirements, while testing is focused on the logistics of examining a product to find defects. QA is broader than testing; it encompasses testing as well as other processes, such as iteratively improving products. Meanwhile, testing is specific, revolving around the tactical process of validating a product's fundamental function and identifying issues.

- Length of time required. Testing examines one version of a product and fixes immediate defects: it's a short-term process with a short-term focus. QA codifies procedures and processes to empower teams to prevent issues before they arise in the long term.

- Teams involved. Specific employees and smaller teams are designated product testers. A larger product development team uses QA. It includes project managers and others who can provide unique perspectives.

- Deliverables. Testing documentation is helpful in the short term as it reports and logs the results of examining one product version at a time. QA deliverables are transcribed procedures that all employees can use indefinitely to ensure the quality of current and future products.

Pros and cons of QA

The quality of products and services is a critical competitive differentiator. QA ensures organizations create and ship products that are free of defects and meet customers' needs and expectations. High-quality products result in good customer experiences, which leads to customer loyalty, repeat purchases, upselling and advocacy.

QA also cuts the costs of remediating and replacing defective products and mollifying dissatisfied customers. Defective products mean higher customer support costs when receiving, handling, and troubleshooting problems. They also result in additional service and engineering costs to address the defect, test any fixes required and ship the replacement product to customers.

QA requires an investment in people and processes. QA team members must define a process workflow and oversee its implementation. This can be a time-consuming process that affects product delivery dates. With few exceptions, the disadvantage of QA is more of a requirement -- a necessary step that must be undertaken to ship a quality product. More serious issues arise without QA, such as product bugs and customer dissatisfaction.

History of ISO and QA

Although simple QA concepts can be traced back to the Middle Ages, these practices became important in the U.S. during World War II. High volumes of munitions had to be inspected before they could be sent to the battlefield.

After World War II ended, the ISO opened in Geneva in 1947 and published its first standard in 1951 on reference temperatures for industrial measurements. The ISO gradually grew and expanded its scope of standards. The ISO 9000 family of standards was published in 1987; each 9000 number offers different standards for different scenarios.

Computer hardware was inspected throughout the mid-20th century, but SQA standards began when the groundwork for Waterfall was laid in the 1970s. Although not called by that name, the approach of separating software development into distinct phases was established and followed for many years.

Agile was first used in 2000 as a different approach to more efficient software development and delivery. It ultimately led to modern DevOps practices that emerged in the late 2000s.

The future of QA

AI, machine learning, and low- and no-code tools have gained traction recently and are playing roles in QA and quality improvement. Whether in SQA or manufacturing processes, AI and machine learning are used to inspect products and machinery during performance monitoring or predictive maintenance. Algorithms are used to analyze real-time data and make predictions. This is helping developers and industrial workers identify quality issues before they arise.

Because testing is integral to QA, low- and no-code platforms are being increasingly used to automate and streamline what were once tedious testing processes. When the right tools are in place to simplify and automate as many QA processes as possible, employees with programming expertise can spend more time on meaningful tasks.

New technologies aren't typically easy to integrate into existing processes. Learn how to gradually incorporate AI into software testing.