enterprise storage

What is enterprise storage?

Enterprise storage is a centralized repository for business information that provides common data management, protection and sharing functions through connections to computer systems.

Because enterprises deal with heavy workloads of business-critical information, enterprise storage systems should be scalable for workloads of hundreds of terabytes or even petabytes without relying on excessive cabling or the creation of subsystems. Other important aspects of an enterprise storage system are unlimited connectivity and support for multiple platforms.

Approaches to enterprise storage

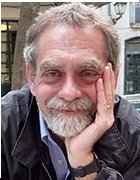

A storage area network (SAN) is a dedicated high-performance network or subnetwork dedicated to storage that is independent of an organization's common user network. It interconnects pools of disk or solid-state storage and shares it to multiple servers so each one can access data as if it were directly attached.

The three principle components that enable the interconnectedness of a SAN are cabling, host bus adapters (HBAs), and Fibre Channel (FC) or Ethernet switches attached to servers and storage. Admins centrally manage all the storage in a SAN with benefits such as high availability, disaster recovery, data sharing, efficient and reliable backup and restoration functions, centralized administration and remote support. Through the SAN, multiple paths are created to all data, so failure of a server never results in a loss of access to critical information.

Network-attached storage (NAS) enables multiple client devices and users to access data from a central pool of disk storage. Users access the shared storage of NAS, which appears as a node with its own Internet Protocol address on the local area network over an Ethernet connection. Ease of access, low cost and high capacities characterize NAS.

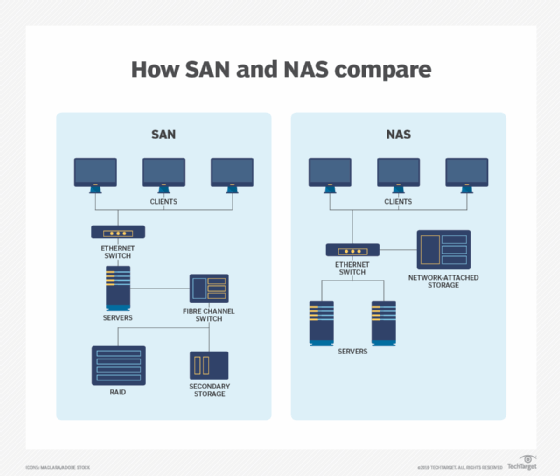

Direct-attached storage (DAS) is hard disk drives (HDDs) or solid-state drives (SSDs) connected directly inside or outside to a single computer or server that cannot be accessed by other computers or servers. Unlike NAS and SAN, DAS is not networked through Ethernet or FC switches.

Because DAS data isn't networked, it offers better performance for its attached server. However, that also means DAS data can't be pooled and shared among servers. And, unlike NAS or SAN, the number of expansion slots in a server and the size of the DAS enclosure limit storage capacity. DAS also lacks more advanced storage management features, such as snapshots and remote replication, which are available in SAN and NAS devices.

Major trends

Newer approaches and technologies in enterprise storage that have trended upward over the last several years include cloud storage, hyperconverged storage and flash technologies, such as non-volatile memory express (NVMe).

Storage for containers and enterprise storage based on composable and disaggregated infrastructure are also trends.

In addition, artificial intelligence (AI) and machine learning in the area of predicative analytics, as well as storage-class memory (SCM), should continue to have a major impact on enterprise storage.

Cloud storage

Enterprise cloud storage consists of storage capacity purchased from a cloud service provider. Enterprises often turn to cloud storage to reduce or eliminate excessive on-premises storage costs, reduce the complexity of managing storage and upgrade data center infrastructure.

The three major public cloud storage providers are Amazon Web Services (AWS), Google Cloud and Microsoft Azure. Others include Alibaba Cloud, IBM, Oracle and Rackspace, as well as a host of regional providers.

Systems integrators and managed providers also offer cloud services, and many traditional software companies now offer cloud storage services for their applications and services. Lastly, cloud gateway vendors, like Panzura, have made enterprise cloud storage a part of their hybrid architectures, as have storage management vendors that integrated management as a service into their products.

Hyperconverged and converged infrastructure

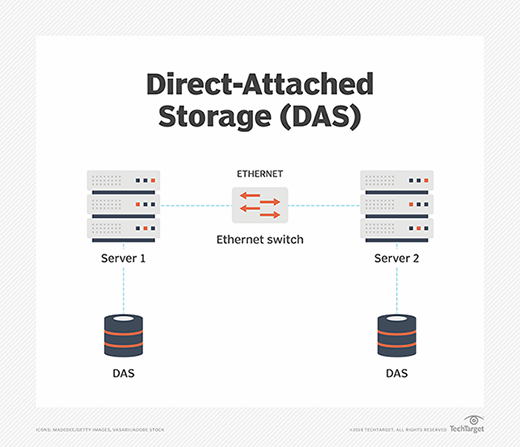

Hyperconverged storage integrates storage, compute and networking in a single unit. It adds a virtualization layer to the mix with storage managed through the hypervisor. That makes hyper-convergence a type of software-defined storage that enables all the storage in each node to be pooled across a cluster to give administrators more control of storage provisioning in a virtual server environment.

This gives hyperconverged storage a much higher degree of horizontal scalability because users simply plug a new node into the infrastructure to add more storage and compute resources.

A hyperconverged infrastructure (HCI) is different from a converged infrastructure (CI) that bundles the traditional SAN components -- storage, compute and networking -- into a preconfigured SKU that is sized and tuned before the sale. HCI moves those capabilities into a single box that can be clustered with other similar nodes.

CI is a staple of enterprise storage, while HCI began mainly as an efficient way to run heavily virtualized loads, such as virtual desktop infrastructure. HCI has made its way into the enterprise, and now, HCI clusters typically run multiple workloads.

NVMe, storage-class memory and AI

The storage industry developed the NVMe host controller interface and storage protocol to speed up data transfer between servers and SSDs over the Peripheral Component Interconnect Express (PCIe) bus. It greatly reduces latency, removing communication between compute and fast SSD storage as a bottleneck, resulting in a significant increase in IOPS. The purpose of the related NVMe over Fabrics (NVMe-oF) specification is to extend the benefits of NVMe over network fabrics, like FC, Ethernet and InfiniBand.

The move toward widespread adoption of NVMe is a step toward another industry transition: SCM. SCM includes new types of non-volatile memory that combine the best of traditional storage and memory technologies. It closes the performance gap between dynamic random access memory (DRAM) and flash storage in non-volatile storage packages.

Slower than DRAM, SCM is more scalable than standard memory and delivers 10 times the IOPS of NAND flash drives. Furthermore, unlike flash drives, SCM can be addressed at the byte rather than block level, which could eliminate I/O overhead for even better performance.

AI and machine learning have permeated many different aspects of IT management, including storage. Machine learning algorithms are starting to be used for predictive storage analytics and storage management. For example, they enable storage management processes to learn and alter settings and operations to optimize workloads, broker storage infrastructure, manage large-scale data sets, and root out the causes of problems and abnormalities and fix them.

Disaggregated and composable storage

Some observers view composable infrastructure and disaggregated infrastructures as the next stages in the evolution of HCI. They retain the benefits of hyper-convergence -- such as physically combining computer resources into an easily scalable framework through nodes -- while making it easier to add more storage and compute hardware independently.

Disaggregation separates these computer components into discrete individual pools of CPU, cache, fabric, memory and storage resources than can be served on demand to specific applications. It combines these individual resources at the hardware level and assembles them at the software level using APIs.

Composable infrastructure creates or composes consumable sets of hardware resources and combines them into a virtualized whole that a software entity operates.

Container storage

Unlike standard monolithic applications, containerized applications can consist of hundreds or thousands of individual related containers, each hosting isolated and separate scalable processing modules of the overall application process. Containers were designed to let users easily develop and deploy stateless microservice layers of an application as a type of agile middleware, with no persistent data storage required.

One reason containerized application design has grown in popularity with enterprises over the last several years is the design's support for Agile application development and deployment. Containers can quickly scale up as needed in a production environment and then go away when no longer needed. It is the efficiency, scalability, agility, cloud-friendliness and lower costs of this approach that have enterprises looking to container architectures for purposes beyond microservices.

As a result, container vendors, such as Docker and Kubernetes, started to bring some level of persistent data storage support to containers. In addition, startups such as Portworx have addressed this issue, often more thoroughly, by enabling container storage volumes to move with their containers.

Container data, like all enterprise data, needs protection. Containers by their nature cannot provide backup data to standard backup applications directly. They require an underlying orchestration platform at the storage or host layer. Vendors, such as Portworx by Pure Storage and Kasten by Veeam, have stepped up and enabled enterprises to back up container data by offering this orchestration layer.