5 NVMe-oF implementation questions answered

Want to know more about implementing NVMe over fabrics, where it's useful and what to consider? Get answers to these and other questions surrounding this technology.

NVMe over Fabrics is making its way into the enterprise, enabling organizations to extend NVMe's high-performance, low-latency advantages across their infrastructure.

An NVMe-oF implementation maps commands and responses between an NVMe host and shared memory, facilitating communication. It extends the distances over which NVMe devices can be accessed and enables scaling to multiple devices.

For most organizations, the first step into NVMe technology is an NVMe all-flash array using traditional network connections to the storage network. More than 60% of all-flash arrays will use NVMe storage media by 2022, according to G2M Research predictions. From there, a move to NVMe-oF may follow, depending on performance needs. G2M also estimated that more than 30% of AFAs will use NVMe-oF to connect to hosts or expansion shelves in the next three years.

NVMe-oF isn't without its complications and challenges, however. You must gather a lot of information and answer a number of questions before making the move. What follows is a look at five important NVMe-oF implementation questions to answer before deploying this new and critical technology.

What are the NVMe-oF implementation options?

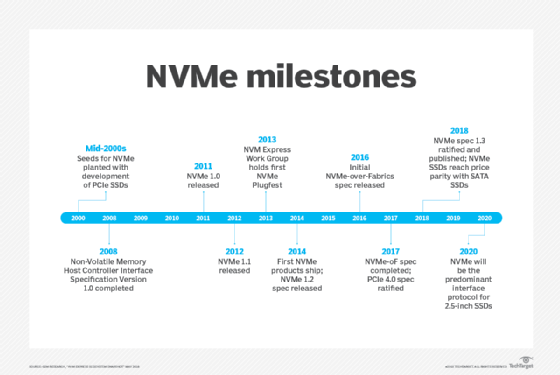

When the initial NVMe-oF spec came out in 2016, it provided support for Fibre Channel (FC) and remote direct memory access (RDMA) fabrics, with InfiniBand, RDMA over Converged Ethernet and Internet Wide Area RDMA Protocol included under the RDMA umbrella. The NVMe-oF 1.1 spec, released in November, 2018, added TCP as a fabric option.

With FC and RDMA, data can be transferred from a host to an array and back without being copied to memory buffers and I/O cards as must be done with a standard TCP/IP stack. This approach reduces the CPU load and data transfer latency, speeding up storage performance. However, FC and RDMA are more complex than TCP and can require special equipment and configurations when implementing NVMe-oF.

Enterprises can use TCP NVMe-oF transport binding over standard Ethernet networks, as well as the internet, making it simpler to implement. It doesn't require any reconfiguration changes or special equipment, eliminating many of the complications involved in using NVMe over FC or RDMA.

What factors must you consider when implementing NVMe-oF?

NVMe-oF is designed to use an organization's existing storage fabric, but that assumes the existing storage infrastructure is compatible with NVMe-oF. Some vendors don't strictly adhere to the NVMe-oF standard and, instead, use a proprietary approach that can lead to compatibility issues.

Another area to consider with NVMe-oF implementation is ensuring the storage infrastructure has adequate throughput. If the connectivity between the system's initiator and the target doesn't keep up with the physical NVMe storage device, system latency can increase. It's also important to check that storage system software is up to date. Old device drivers and OS kernels can cause issues.

Where does NVMe-oF leave rack-scale PCIe?

Before NVMe-oF appeared, it looked like data centers were headed toward rack-scale, switched PCIe fabric. DSSD, a company EMC acquired and then dismantled, developed technology that used the PCIe switch to share a block of flash across hosts in a rack. At the time, DSSD's 100 microsecond (µs) latency compared favorably to all-flash array vendors' 1 millisecond latency.

But then NVMe-oF came on the scene, matching DSSD's 100 µs latency while letting customers use their existing standard Ethernet and FC networks, a development that shelved rack-scale PCIe for the time being. There's room for that to change, though, given the Gen-Z Consortium's focus on developing a rack-scale infrastructure that shares GPU and memory along with storage and I/O cards.

How does NVMe-oF help with scale-out storage?

Tools to scale-out a storage architecture use extra servers or nodes to increase its performance and capacity. In the past, scale-out tools tended to be complex to implement. But NVMe-oF is changing all that by skirting the limitations and complexities of traditional storage architecture.

NVMe-oF's advantage is in creating a more direct path between the host and the storage media. As a result, data isn't transmitted through a centralized controller. This more direct I/O path reduces the length of the path and enables a single host to talk to many drives and vice versa. This approach reduces storage system latency and increases scale-out capability.

Do NVMe and NVMe-oF solve all storage performance issues?

NVMe technology greatly enhanced performance between the server or storage controller CPU and attached flash SSDs over SAS and SATA SSDs. And NVMe-oF has reduced latency and improved the performance of shared storage. However, NVMe and NVMe-oF have also exposed another performance challenge: the CPU chokepoint in the server or the storage controller.

The latest processors support increasing numbers of SSDs. And as more drives are used, the supporting hardware gets increasingly complicated, with more CPUs, drives, drive drawers, switches, adapters, transceivers and cables for IT to cope with and manage. More hardware also results in diminishing marginal returns on overall performance.

The cause of this NVMe-oF implementation performance challenge is storage software not designed for CPU efficiency. One way to address this problem is to rewrite storage software to make it more efficient. Some vendors, such as StorOne, take that approach. Others are trying alternative solutions, such as adding more CPUs, putting dynamic RAM caching in front of NVMe SSDs and using computational storage to put more processors and RAM on NVMe drives.