markrubens - Fotolia

Public cloud storage use still in early days for enterprises

IT organizations are still in the early stages of their cloud storage journeys as they figure out the best use cases beyond backup, archive and disaster recovery.

Enterprise use of public cloud storage has been growing at a steady pace for years, yet plenty of IT shops remain in the early stages of the journey.

IT managers are well aware of the potential benefits -- including total cost of ownership, agility and unlimited capacity on demand -- and many face cloud directives. But companies with significant investments in on-premises infrastructure are still exploring the applications where public cloud storage makes the most sense beyond backup, archive and disaster recovery (DR).

Ken Lamb, who oversees resiliency for cloud at JP Morgan, sees the cloud as a good fit, especially when the financial services company needs to get an application to market quickly. Lamb said JP Morgan uses public cloud storage from multiple providers for development and testing, production applications and DR and runs the workloads internally in "production parallel mode."

JP Morgan's cloud data footprint is small compared to its overall storage capacity, but Lamb said the company has a large migration plan for Amazon Web Services (AWS).

"The biggest problem is the way applications interact," Lamb said. "When you put something in the cloud, you have to think: Is it going to reach back to anything that you have internally? Does it have high communication with other applications? Is it tightly coupled? Is it latency sensitive? Do you have compliance requirements? Those kind of things are key decision areas to say this makes sense or it doesn't."

Public cloud storage trends

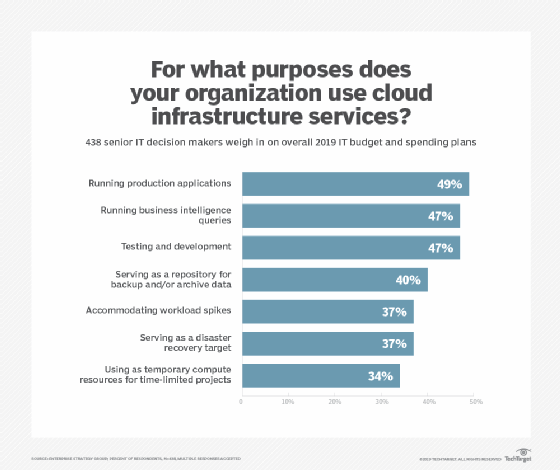

Enterprise Strategy Group research shows an increase in the number of organizations running production applications in the public cloud, whereas most used it only for backups or archives a few years ago, according to ESG senior analyst Scott Sinclair. Sinclair said he's also seeing more companies identify themselves as "cloud-first" in terms of their overall IT strategy, although many are "still beginning their journeys."

"When you're an established company that's been around for decades, you have a data center. You've probably got a multi-petabyte environment. Even if you didn't have to worry about the pain of moving data, you probably wouldn't ship petabytes to the cloud overnight," Sinclair said. "They're reticent unless there is some compelling need. Analytics would be one."

The Hartford has a small percentage of its data in the public cloud. But the Connecticut-based insurance and financial services company plans to use Amazon's Simple Storage Service (S3) for hundreds of terabytes, if not petabytes, of data from its Hadoop analytics environment, said Stephen Whitlock, who works in cloud operations for compute and storage at The Hartford.

One challenge The Hartford faces in shifting from on-premises Hortonworks Hadoop to Amazon Elastic MapReduce (EMR) is mapping permissions to its large data set, Whitlock said. The company migrated compute instances to the cloud, but the Hadoop Distributed File System (HDFS)-based data remains on premises while the team sorts out the migration to the EMR File System (EMRFS), Amazon's implementation of HDFS, Whitlock said.

Finishing the Hadoop project is the first priority before The Hartford looks to public cloud storage for other use cases, including "spiky" and "edge" workloads, Whitlock said. He knows costs for network connectivity, bandwidth and data transfers can add up, so the team plans to focus on applications where the cloud can provide the greatest advantage. The Hartford's on-premises private cloud generally works well for small applications, and the public cloud makes sense for data-driven workloads, such as the analytics engines that "we can't keep up with," Whitlock said.

"It was never a use case to say we're going to take everything and dump it into the cloud," Whitlock said. "We did the metrics. It just was not cheaper. It's like a convenience store. You go there when you're out of something and you don't want to drive 10 miles to the Costco."

Moving cloud data back

Capital District Physicians' Health Plan (CDPHP), a not-for-profit organization based in Albany, NY, learned from experience that the cloud may not be the optimal place for every application. CDPHP launched its cloud initiative in 2014, using AWS for disaster recovery, and soon adopted a cloud-first strategy. However, Howard Fingeroth, director of infrastructure architecture and data engineering at CDPHP, said the organization plans to bring two or three administration and financial applications back to its on-premises data center for cost reasons.

"We did a lot of lift and shift initially, and that didn't prove to be a real wise choice in some cases," Fingeroth said. "We've now modified our cloud strategy to be what we're calling 'smart cloud,' which is really doing heavy-duty analysis around when it makes sense to move things to the cloud."

Fingeroth said the cloud helps with what he calls the "ilities": agility, affordability, flexibility and recoverability. CDPHP primarily uses Amazon's Elastic Block Storage for production applications that run in the cloud and also has less expensive S3 object storage for backup and DR in conjunction with commercial backup products, he said.

"As time goes on, people get more sophisticated about the use of the cloud," said John Webster, a senior partner and analyst at Evaluator Group. "They start with disaster recovery or some easy use case, and once they understand how it works, they start progressing forward."

Evaluator Group's most recent hybrid cloud storage survey, conducted in 2018, showed that disaster recovery was the primary use case, followed by data sharing/content repository, test and development, archival storage and data protection, according to Webster. He said about a quarter used the public cloud for analytics and tier 1 applications.

Public cloud expansion

The vice president of strategic technology for a New York-based content creation company said he is considering expanding his use of the public cloud as an alternative to storing data in SAN or NAS systems in photo studios in the U.S and Canada. The VP, who asked that neither he nor his company be named, said his company generates up to a terabyte of data a day. It uses storage from Winchester Systems for primary data and has about 30 TB of "final files" on AWS. He said he is looking into storage gateway options from vendors such as Nasuni and Morro Data to move data more efficiently into a public cloud.

"It's just a constant headache from an IT perspective," he said of on-premises storage. "There's replication. There's redundancy. There is a lot of cost involved. You need IT people in each location. There is no centralized control over that data. Considering all the labor, ongoing support contacts and the ability to scale without doing capex [with on-premises storage], it's more cost effective and efficient to be in the cloud."