edge device

What is an edge device?

An edge device is any piece of hardware that controls data flow at the boundary between two networks. Edge devices fulfill a variety of roles, depending on what type of device they are, but they essentially serve as network endpoints -- entry or exit points. Some common functions of edge devices include the transmission, routing, processing, monitoring, filtering, translation and storage of data passing between networks. Enterprises, service providers, and government and military organizations use edge devices.

Cloud computing and the internet of things (IoT) have elevated the role of edge devices, ushering in the need for more intelligence, computing power and advanced services at the network edge. This concept, where processes are decentralized and occur in a more logical physical location, is referred to as edge computing. Part of the point of edge devices is to put more processing power out into networks themselves so that hardware located far from a processing center -- either on premises or in the cloud -- is relieved of the burden of taking on that device's workload.

Types of edge devices

One of the most common types of edge devices is an edge router. Usually deployed to connect a campus network to the internet or a wide area network (WAN), edge routers chiefly function as gateways between networks. A similar type of edge device, known as a routing switch, can also be used for this purpose, although routing switches typically offer less-comprehensive features than full-fledged routers.

WAN devices are designed to extend networks across large areas and can even be global in scope. They enable such widely distributed networks to function seamlessly despite great physical distances.

Firewalls can also be classified as edge devices, as they sit on the periphery of one network and filter data moving between internal and external networks.

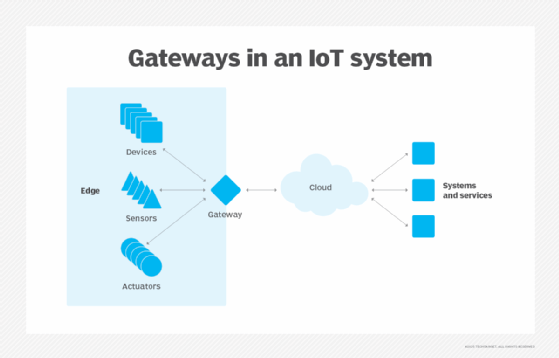

In the context of IoT, edge devices encompass a much broader range of device types and functions. These might include sensors, actuators and other endpoints, as well as IoT gateways. Medical devices, scientific instruments, automated vehicles, machine-to-machine and many other device types also qualify as edge devices.

Within a local area network, switches in the access layer -- that is, those connecting end-user devices to the aggregation layer -- are sometimes called edge switches.

Multiplexers -- also known as integrated access devices -- merge data collected from many sources into a single signal. This bolsters the efficiency of automated systems and advanced IoT networks.

Edge device use cases

Although the primary function of edge devices is to provide connectivity between disparate networks, the edge has evolved to increasingly support advanced services. These might include the following:

- Wireless capabilities. Wireless access points (wireless APs) act as edge devices, as they typically provide wireless clients with access to the wired network.

- Security functions. Edge devices, such as wireless APs or virtual private network (VPN) servers, commonly include integrated security capabilities designed to block a malicious user or device connection.

- Dynamic Host Configuration Protocol (DHCP) services. DHCP is a service that's commonly used in conjunction with edge devices, such as wireless APs and VPNs. When a client connects to such edge devices, it requires an Internet Protocol (IP) address that's local to the network it's accessing. The DHCP server provides the required IP address. In many cases, DHCP services are integrated into edge devices.

- Domain Name System (DNS) services. When an external client accesses a network through an edge device, the client must be able to resolve fully qualified domain names on the network. When the DNS services lease an IP address to the client, it typically also points the client to a DNS server that provides name resolution services for the network.

Cloud computing and IoT have demonstrated the value for pushing intelligence to the periphery of the network. If an enterprise has thousands of IoT devices, it's not efficient for them all to try to communicate with the same resource at once. Edge devices can collect, process and store data in a more distributed fashion and closer to endpoints -- hastening response times, reducing latency and conserving network resources.

How does an edge device work?

An edge device is essentially a bridge between two networks. These can be two on-premises networks, but an edge device can also be used for cloud connectivity. The important thing to keep in mind is that the two networks otherwise aren't connected to one another and might have major architectural differences.

For example, at one time, it was common for organizations to use Systems Network Architecture networks to communicate between remote users and the mainframe. As PCs and other devices became more prevalent, however, edge devices were used to tie Ethernet networks -- or other network types -- to existing networks.

Regardless of the use case, there are two basic things that an edge device must do. First, the edge device must provide physical connectivity to both networks. Second, it must allow traffic to traverse the two networks when necessary. Depending on the nature of the edge device, this might mean simply forwarding an IP packet. In the case of architecturally dissimilar networks, however, the edge device might need to perform protocol translation.

Benefits and challenges of edge devices

There are both benefits and challenges associated with the use of edge devices. Some of these include the following:

- Expanded access. The primary benefit of edge devices is that they enable client devices to access networks and resources that would otherwise be inaccessible. Without a wireless AP -- an edge device -- for example, wireless clients wouldn't be able to access resources on the wired network.

- Device management. One of the primary challenges of operating edge devices is device management. An edge device can enable numerous additional devices to access a network. These devices might not have been registered onto the network, and they could be running operating systems that differ from those of devices that have traditionally been used on the network. As such, organizations must consider how best to register and manage these devices.

- Security. Although many edge devices include native security capabilities, organizations must consider how best to implement these security features. In addition, they need a strategy for keeping edge devices up to date to prevent the device itself from being exploited.

- Bottlenecks. An edge device can become a network traffic bottleneck if it fails to provide enough throughput to handle the required network traffic.

- Artificial intelligence (AI) and machine learning (ML). The edge computing performed in an IoT context frees up processing resources in cloud-based systems to facilitate complex data analytics. It also extends the reach of AI and ML beyond abstract problem-solving and into the realm of real-world predictions, such as equipment failure.

- Improved regulatory compliance. Edge devices and edge computing enable more accurate and efficient maintenance, enhancing an organization's regulatory compliance.

Edge device hardware and technology

Initially, an edge device was defined simply as a piece of hardware that enables communications between two networks. Over time, though, edge devices have evolved, with new types of edge devices being introduced. The most notable of these additions is the IoT edge device.

IoT devices are commonly defined as nontraditional, internet-enabled devices that are connected to a network or to the internet. In industrial settings, sensors often make up the bulk of the IoT devices in use. These might include things such as temperature sensors, moisture sensors or radio-frequency identification scanners. Although these types of devices aren't edge devices, they're commonly connected to an edge gateway.

The idea behind this architecture is that because IoT devices generate data, they must be placed as close as possible to the systems that use them. Hence, IoT devices commonly send data through a gateway -- an edge device -- which then passes the data to the computing infrastructure that ultimately stores, analyzes or processes data.

One of the problems that has long faced IoT devices is that they can generate massive amounts of data. This data must be analyzed if it's to be of use. This can be especially problematic if the data must be uploaded to a cloud service, as doing so incurs direct costs related to the uploading, processing and storage of the data. Depending on the volume of data to be uploaded, the availability of internet bandwidth might also be an issue. Intelligent edge devices, which are sometimes referred to as IoT edge devices, can help with these problems.

An intelligent edge device is a sophisticated IoT device that performs some degree of data processing within the device itself. For example, an intelligent industrial sensor might use AI to determine whether a part is defective. Other examples of intelligent edge devices include computer vision systems and some speech recognition devices.

With the global edge computing market predicted to increase significantly each year, numerous vendors have introduced devices, platforms and services to help data centers as they build edge ecosystems. Learn which vendors provide offerings in the edge computing marketplace.