Sergey - stock.adobe.com

Review latest investments to AWS' machine learning platform

Improved deep learning capabilities lead this machine learning roundup. Learn how AWS is targeting both machine-learning-savvy developers and data scientists.

AWS is always adding services and features, but it's been particularly active with its AI services of late.

For example, AWS rolled out 13 machine learning products at re:Invent 2018 alone. AWS' massive investment in product development is good for users, but this pace of change makes it difficult for IT professionals to keep up.

The Deep Learning AMIs and Containers target developers who build sophisticated custom models with the AWS machine learning platform and DevOps teams charged with deploying them on cloud infrastructure.

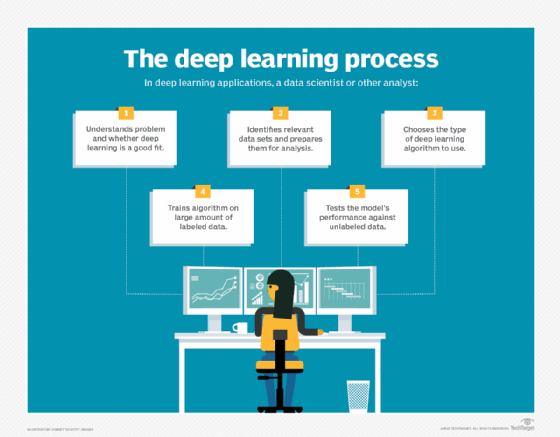

In contrast, the managed services, which join similar products for image recognition, speech transcription and interactive chatbots, are designed for data scientists and non-specialists who want to analyze large and complicated data sets using techniques that are more advanced than standard statistical analysis.

In this breakdown, we'll highlight the recent updates to the AWS machine learning platform that users need to know.

AWS Deep Learning Containers

Historically, creating a programming and testing environment for deep learning models has been complicated and time-consuming. Data scientists had to navigate several source code repositories and dealt with many dependencies and configuration nuances because it was a DIY effort.

NVIDIA, whose GPU hardware accelerates deep learning workloads, was among the first companies to address this problem with NVIDIA GPU Cloud (NGC). NGC has a registry of ready-to-use containers that include all the elements required to develop deep learning applications for NVIDIA environments.

AWS took a page from NVIDIA's strategy and added Deep Learning Containers, a set of Docker images for the most popular deep learning development frameworks. Deep Learning Containers can run on:

- Amazon Elastic Kubernetes Service;

- Amazon Elastic Container Service;

- Amazon SageMaker;

- Individual EC2 instances; and

- Self-managed Kubernetes clusters using EC2 instances.

Deep Learning Containers support TensorFlow version 1.14.0, MXNet version 1.4.1 and the NVIDIA CUDA toolkit version 10.0. The SageMaker support is helpful for users who want to develop machine learning models without the overhead of infrastructure management. SageMaker provides several built-in machine learning algorithms, but users can supplement those with custom algorithms and containers, too.

Users can install Deep Learning Containers for free from the AWS Marketplace or Amazon Elastic Container Registry (ECR). The containers can be used for both model training and inference with TensorFlow or MXNet, though they can only be used for distributed training with TensorFlow. They are also supported on systems running Ubuntu 16.04 and work with either CPU or GPU instances.

Both frameworks include APIs for Python, a popular scripting language among data scientists. AWS supports distributed training for TensorFlow through Horovod, a training framework initially developed by Uber.

The process for running these containers varies by environment. For a Kubernetes cluster, users can create a pod file configured with one of the Deep Learning Containers and assign it to a cluster using the kubectl command.

Deep learning for smaller projects

In 2019, AWS also bolstered framework support for its pre-built Deep Learning Amazon Machine Images (AMIs). These are useful for developers working on smaller projects that use a few VMs rather than an entire container cluster. AWS has added support for the following environments:

- AWS Deep Learning AMIs support for PyTorch 1.1, Chainer 5.4 and CUDA 10 support for MXNet; and

- AWS Deep Learning AMIs support for Amazon Linux 2, TensorFlow 1.13.1, MXNet 1.4.0 and Chainer 5.3.0.

AWS also added support for TensorFlow 1.12, with a new EIPredictor Python API to the Elastic Inference service. This enables users to attach GPU accelerators to any SageMaker or EC2 instance to significantly improve the performance of deep learning inferencing.

AWS AI services wrap-up

Amazon has also spent the last eight months putting the finishing touches on AI services first announced at re:Invent 2018. The following services are now generally available.

Amazon Forecast: This is a managed service that provides time series forecasts using technology Amazon initially developed for internal use. Users provide historical data and relevant metadata and the system will automatically build a predictive machine learning model.

Amazon Personalize: This managed service provides a personalization and recommendation engine that relies on models Amazon uses in its retail business. Again, users merely provide the data set, which could entail page views, purchases or liked videos, along with the inventory of items from which to make recommendations. The Personalize system handles the infrastructure and machine learning models.

Amazon ML Insights for QuickSight: This machine learning module for the QuickSight interactive BI service simplifies analysis of massive datasets and the creation of visualizations. ML Insights is a set of machine learning and natural language parsing features designed for data forecasting and anomaly detection, and it can generate textual narratives describing the results and context.