Docker Swarm

What is Docker Swarm?

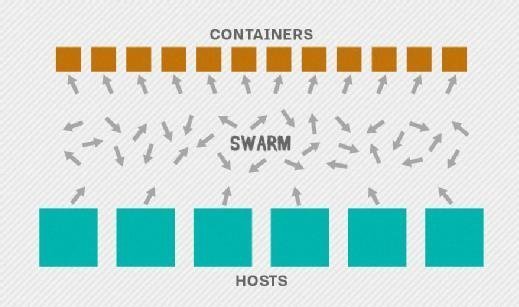

Docker Swarm is a container orchestration tool for clustering and scheduling Docker containers. With Swarm, IT administrators and developers can establish and manage a cluster of Docker nodes as a single virtual system.

Docker Swarm lets developers join multiple physical or virtual machines into a cluster. These individual machines are known as nodes or daemons.

Docker Swarm also lets admins and developers launch Docker containers, connect containers to multiple hosts, handle each node's resources and improve application availability throughout a system.

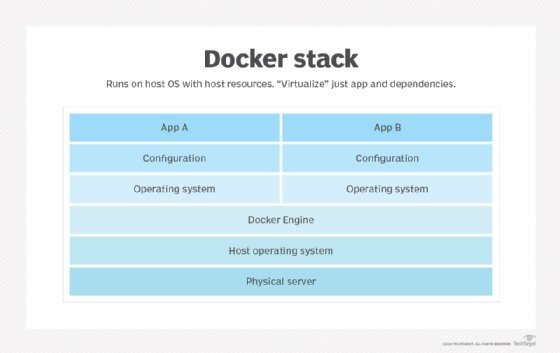

Docker Engine, the layer between the operating system and container images, natively uses Swarm mode. Swarm mode integrates the orchestration capabilities of Docker Swarm into Docker Engine 1.12 and subsequent releases. Docker Swarm uses the standard Docker API to interface with other Docker tools, such as Docker Machine.

What does Docker Swarm do?

The Docker platform includes a variety of tools, services, content and automations that help developers build, ship and run applications without configuring or managing the underlying development environment. With Docker, developers can package and run applications in lightweight containers -- loosely isolated environments that enable an application to run efficiently and seamlessly in many different conditions.

With Docker Swarm, containers can connect to multiple hosts. Each node in the cluster can then easily deploy and access any containers within that swarm. Docker Swarm includes multiple worker nodes and at least one manager node to control the cluster's activities and ensure its efficient operations.

Clustering is an important feature for container technology. It creates a cooperative group of systems that provide redundancy, enabling Docker Swarm failover if one or more nodes experience an outage. A Docker Swarm cluster also enables administrators and developers to add or subtract container iterations as computing demands change.

Docker Swarm manager node and worker nodes

An IT administrator controls Swarm through a swarm manager, which orchestrates and schedules containers within the swarm. The swarm manager, which is also a Docker node, lets a user create a primary manager instance and multiple replica instances in case the primary instance fails.

The manager node assigns tasks to the swarm's worker nodes and manages the swarm's activities. The worker nodes receive and execute the tasks assigned by the swarm manager when they have the resources to do so. In Docker Engine's swarm mode, a user can deploy manager and worker nodes using the swarm manager at runtime. A single swarm can have up to seven manager nodes.

Docker Swarm automated load balancing

Docker Swarm schedules tasks to ensure sufficient resources for all distributed containers in the swarm. It assigns containers to underlying nodes and optimizes resources by automatically scheduling container workloads to run on the most appropriate host. Such orchestration ensures containers are only launched on systems with adequate resources to maintain necessary performance and efficiency levels for containerized application workloads.

Swarm uses three different strategies to determine on which nodes each container should run:

- Spread. Spread acts as the default setting and balances containers across the nodes in a cluster based on the nodes' available central processing unit and random access memory as well as the number of containers each node currently runs. The benefit of the Spread strategy is that even if a node fails, only a few containers are lost.

- BinPack. BinPack schedules containers to ensure full use of each node. Once a node is full, it moves on to the next in the cluster. BinPack uses a smaller amount of infrastructure and leaves more space for larger containers on unused machines.

- Random. The Random setting assigns containers to nodes at random.

Docker Swarm services

In Docker Swarm, users can deploy services in two ways: replicated and global.

Replicated services in swarm mode require the administrator to specify how many identical "replica" tasks must be assigned to available nodes by the swarm manager. By contrast, global services monitor all containers that run on a node; the swarm manager schedules only one task to each available node.

Docker Swarm filters

Swarm has five filters for scheduling containers:

- Constraint. Also known as node tags, constraints are key-value pairs associated with particular nodes. A user can select a subset of nodes when building a container and specify one or multiple key-value pairs.

- Affinity. To ensure containers run on the same network node, the affinity filter tells one container to run next to another based on an identifier, image or label.

- Port. Ports represent a unique resource. When a container tries to run on a port that's already occupied, it will move to the next node in the cluster.

- Dependency. When containers depend on each other, this filter schedules them on the same node.

- Health. If a node is not functioning properly, this filter will prevent scheduling containers on it.

Read about important Docker container backup best practices, and learn how to keep Docker secrets secret to improve security. Explore the differences between a Docker container vs. Docker image and how they interact as well as why and how to use Docker image tags. Prepare for successful container adoption with these tips, and see how to choose the best Docker image for the job at hand.