kentoh - Fotolia

NVMe and SCM: Implications for the future of storage

Explore the various ways NVMe technology vendors are preparing the way for organizations to transition to storage class memory in their computing environments.

NVMe has taken hold in the enterprise, driven by high-performance-demanding applications, such as AI, machine learning and analytics, and their need for high-speed, low-latency access to storage media. Now, storage class memory is making inroads, providing low-latency storage media.

This article looks at how NVMe is preparing the way for organizations to transition to storage class memory (SCM). As NVMe and SCM drives become mainstream, focus will shift from the storage media being the bottleneck to areas such as the CPU, interconnect, storage software stack, drivers and protocols. Let's explore how NVMe and SCM are spurring new ways to create faster storage at lower costs.

A quick review

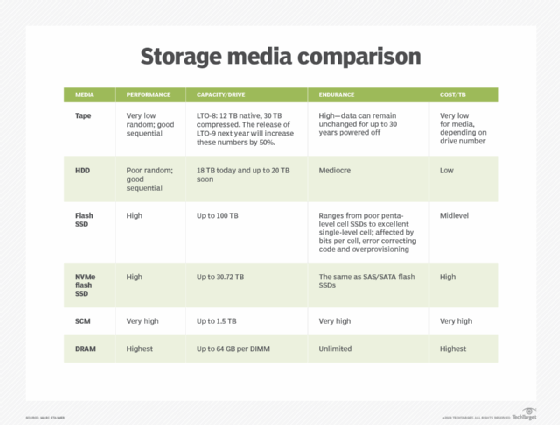

Storage has always been about tradeoffs among performance, capacity and cost. The highest performance, such as that provided by dynamic RAM (DRAM), has relatively low capacities and very high costs per terabyte.

At the other extreme is tape. Tape delivers very low random performance, good sequential performance and high capacities at a very low cost. In between these performance, capacity and cost extremes are flash SSDs and HDDs.

SSDs have excellent read and write performance that's substantially lower than DRAM and good to high capacities -- as high as 100 TB in a 3.5-inch drive -- but at a relatively high price compared to HDDs or tape. Price, performance, capacity and durability for flash will vary depending on the NAND flash bits per cell.

HDDs deliver relatively poor random reads and writes when compared to DRAM or SSDs, with high capacities, good sequential performance, mediocre endurance and a low cost per terabyte, comparatively.

But what if there's a need for higher performance than usual for common flash SSDs at a lower cost than DRAM? There are always workloads -- e-commerce, online transaction processing, data warehouses, AI analytics, machine learning and deep machine learning -- that demand the higher performance of lower latencies and more IOPS or throughput. However, it's difficult to justify the cost and limited capacity of DRAM. To fill this product gap, vendors created NVMe flash SSDs and NVMe SCM, marketed as Optane SSDs by Intel.

NVMe flash SSDs

NVMe flash SSDs are designed to reduce flash SSD latencies. Latency is the most important factor in transactions and messaging as it is the time it takes to access or write the first byte. It is also the time it takes for the last byte to be accessed or synchronized for tail latency. Tail latency is extremely important for applications using the message passing interface protocol that is prevalent in high-performance computing.

NVMe is the open source standard specification that addresses nonvolatile media, such as NAND flash and 3D XPoint -- the technology behind Optane -- across the PCIe bus. NVMe eliminates a significant latency bottleneck in the SAS or SATA controller. All controllers add latency because they sit between the PCIe controller and the drives. Eliminating that controller significantly reduces end-to-end latency. NVMe flash drives typically reduce SSD latency by two-thirds or more to approximately 30 microseconds or .03 milliseconds. That's a significant latency reduction.

NVMe also improves throughput and IOPS. PCIe Gen 4 -- which has twice the amount of bandwidth of PCIe Gen 3 -- is needed to get the full performance benefit. Most current storage systems are PCIe Gen 3.

NVMe drives run directly on the PCIe bus. But there are a limited number of PCIe slots available per socket, depending on the CPU. This results in fewer NVMe drives per storage controller. That's the primary reason so many NVMe flash storage systems have 24 or fewer NVMe drives.

Startup vendor Pavilion Data Systems gets around this limitation by implementing architectures with more controllers -- up to 20 controllers and 72 NVMe flash drives in 4RU. Fungible, another startup, overcomes the PCIe slot limitation with internal infrastructure switches via its data processing unit application-specific integrated circuit. Most mainstream storage vendors, such as Dell EMC, Hewlett Packard Enterprise, Hitachi Vantara and NetApp, use scale-out clustering technology to get more controllers and drives within a storage system. This approach also consumes more rack space.

As long as the tradeoff between the controllers and rack space is acceptable and the interconnect between the storage controller nodes does not add much latency, NVMe noticeably reduces latency and increases performance. SCM goes further.

Storage class memory

Intel's Optane SCM is based on 3D XPoint. It is faster than flash and has lower latencies, higher throughput, more IOPS and greater durability. SCM drives are NVMe-based. However, SCM should not be confused with Intel's Optane Persistent Memory (PMEM) DIMMs that slot in the DDR4 (Double Data Rate 4) memory slots of a server or controller.

PMEM can be accessed in two ways. The first is using nonpersistent Memory Mode that makes PMEM look like slower, less-expensive DRAM. The second way is using persistent memory App Direct Mode that requires modification to the application and file systems to make PMEM look like storage. It is not something most IT organizations can take advantage of, but there are exceptions. Vendors such as Formulus Black and MemVerge can mask that requirement from the applications and file systems. Oracle utilizes App Direct Mode in its Exadata system for Oracle Database. SAP S/4HANA also utilizes App Direct Mode for its database. There are four variations of App Direct Mode: raw device access, memory access, file system and NVM-aware file system.

For most storage implementations and storage systems, SCM is the Optane implementation of choice. It's simple and requires no modifications to the storage stack. It's simply a faster, lower-latency drive. SCM is generally three to five times faster than NVMe flash SSDs; however, it's also three to five times more expensive. PMEM DIMMs are even faster and more costly per terabyte.

NVMe and SCM storage implications

Storage vendors are already adapting to NVMe and SCM. There are few primary storage system vendors that do not provide NVMe flash SSD storage options at this time.

What is happening is the return of tiered or cached storage. These are not the storage systems of a few years ago that matched flash SSDs with HDDs. Those systems used flash SSDs as the primary read/write tier or as read/write cache and then moved the data to lower-cost, lower-performance HDDs over time.

Vast Data and StorONE have pioneered a new variation of hybrid solid-state systems. They use NVMe flash SSDs or, more recently, SCM as the primary read/write tier, with lower-cost, high-capacity SAS/SATA quad-level cell (QLC) or 4 bits per cell flash SSDs as the secondary tier for cool and cold data. QLC flash drives deliver much greater capacities at significantly lower costs than other flash drives. The technology trades off lower performance and endurance to gain more capacity at a lower cost. Nimbus Data has announced a 3.5-inch form factor 64 TB QLC flash drive ideal for these hybrid systems.

While SCM has much faster performance and higher endurance than flash SSDs, regardless of the bits per cell, QLC has lower performance and endurance than single-level cell, multi-level cell and triple-level cell flash SSDs. Using NVMe flash or SCM drives as the primary write tier or cache enables much better system performance, while increasing the endurance of the much lower-cost QLC flash SSDs.

These combination NVMe or SCM and QLC hybrid systems are 100% solid-state with much better all-around performance versus previous hybrid or even many current all-flash systems. More importantly, they cost less than current all-flash systems. The economics and cost/performance metrics simply make sense as the next mainstream general-purpose systems.

But those all-NVMe flash or SCM systems that are dedicated to extreme performance also have a place in the market. Not only do they use NVMe flash and SCM drives, but they capitalize on the NVMe-oF interconnect protocol that minimizes latencies to and from the storage system.