WavebreakMediaMicro - Fotolia

Consider these key microservices caching strategies

Data caching is a key part of ensuring microservices have easy access to the data they need. We review a few key caching strategies.

Data caching reduces the number of trips a microservice needs to make to a database server and avoid redundant calls to other microservices. Caching can also improve availability, as you can still get data from the cache if a service is down.

However, there are challenges involved with how you manage your cache. There are certain considerations, particularly when it comes to what and how much data you cache. Here are some microservices caching strategies to avoid overloading a cache with stale or redundant data and maintain high microservices performance.

Determine what to cache

There are two types of caches: preloaded and lazy loaded. In preloaded caches, data is populated ahead of the start of a service and is ready before a service requests it. In lazy-loaded caches, data is kept on hold until a service requests it. Data in the cache populates on demand the first time a service requests its data, and all subsequent requests for the same piece of data are served up from the cache.

You'll need to decide which of these microservices caching strategies is best for a particular application. As an example, an e-commerce application might need to preload frequently used items in the cache so that a new order is processed using an item residing in the cache. However, applications that don't need to access data in this manner may best be left to lazy-loaded caching in order to avoid data overloads.

Consider shared caching

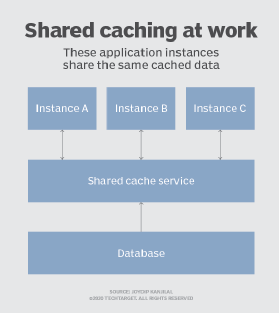

When teams cache data within the memory of a service instance, each instance maintains its own copy of that data to use as soon as it needs it. But while this type of caching is fast, you are still constrained since storage is limited to the memory directly available to the service.

If storage for those services is limited, teams can use shared caching in which data is shared among application instances and all services access the same cache. This approach is easily scalable, since you can add more and more servers as needed. Typically, this cache is hosted as a separate service, as shown in Figure 1.

Decide how long to keep data

If any data changes, you need to ensure the data residing in the cache is in sync with current data stores. The cache data can go stale over periods of time, so you need to decide the length of time you would allow the data in the cache to remain stale.

Consider setting a timed lifespan that invalidates data and removes it from the cache after a determined limit to prevent it from becoming stale. Hence, a request for expired data will result in a failed cache request, but this is acceptable as long as the failed requests don't outnumber the successful requests.