cache server

What is a cache server?

A cache server is a dedicated network server or service acting as a server that saves webpages or other internet content locally. By placing previously requested information in temporary storage -- or cache -- a cache server both speeds up access to data and reduces demand on an enterprise's bandwidth. Cache servers also enable users to access content offline, including rich media files or other documents. A cache server is sometimes called a cache engine.

A proxy server is often also a cache server, as it represents users by intercepting their internet requests and managing them. Typically, these enterprise resources are being protected by a firewall server. That server enables outgoing requests to go out, but screens all incoming traffic.

Because a proxy server helps match incoming messages with outgoing requests, it's perfectly placed to also cache the files that are received for later recall by any user. A proxy server that's also a cache server is often called a caching proxy. The dual function it performs is sometimes referred to as web proxy caching.

To the user, web proxy caching is invisible -- all internet requests and the responses appear to be coming from the addressed place on the internet. But the proxy isn't entirely invisible; its Internet Protocol address must be specified as a configuration option to the browser or other protocol program.

What is caching?

Caching is as old as computing. The first use of this concept was to keep the data that a central processing unit (CPU) is most likely to use in the near term in faster memory. This faster, more expensive memory is often called the cache, a term derived from the older meaning: a place where weapons or other items are hidden.

When the CPU requests data in the cache and that data is found, it's called a cache hit. When the data is not found, it's called a cache miss. When a cache miss occurs, the data must be fetched from the source -- or from another level of cache, if the system has more than one level. Cache misses are overhead, and when they occur too often, they defeat the purpose of caching. A good caching algorithm -- one that might even be tailored to the application -- is needed to minimize the number of cache misses.

Another key concept of caching is that data can become stale. That is, data gets updated at the source, but the cached version of the same data doesn't. In many cases, this isn't a concern because the data never changes. But in other cases, the user needs to see the changes right away or within a reasonable delay. Sometimes the user can specifically request an update, which is what happens when you click the refresh button on your browser. A good caching algorithm manages stale data in a way that's appropriate to the application.

The concept of caching is still very much alive and well in the connected world. Similar techniques are used to store web content on a local computer for quicker access or on servers that are closer to where the content is likely to be consumed next. Cache servers run algorithms that predict which content is needed in which location and determine when source content has changed to update the cache. The best algorithms give users quicker access to content without them knowing they're using a copy of the content.

Content delivery networks (CDNs) rely heavily on caching to provide optimal service to applications, such as video streaming services. A streaming service provider might use an application programming interface to access the services of a CDN, which uses caching algorithms to minimize delays and optimize the use of bandwidth.

What are the different types of caching algorithms?

There are different strategies for caching, each with its own set of advantages. The strategy depends on the type of content, service and usage patterns. For example, the content might be videos and the service a streaming service. The caching strategy would make predictions about usage patterns for a given geographic region and then store video content as close as possible to the users who are most likely to request the content.

Caching algorithms take two things into account. The first consideration is which data to evict when the cache is full. The following are the four types of cache eviction algorithms:

- First in, first out (FIFO). The oldest content is evicted first, without considering how often the data is used. A variation of FIFO is last in, first out, which removes the newest data first.

- Least recently used (LRU). The least recently accessed content is removed first.

- Least frequently used (LFU). The least frequently used content is removed first.

- LFU and LRU combined. The least frequently used content is removed first. When two pieces of content have been used the same number of times, the least recently used content of the two is evicted first.

The second consideration is what to do about stale data. The process of removing stale data from the cache is called cache invalidation. The following are two commonly used techniques for cache data invalidation:

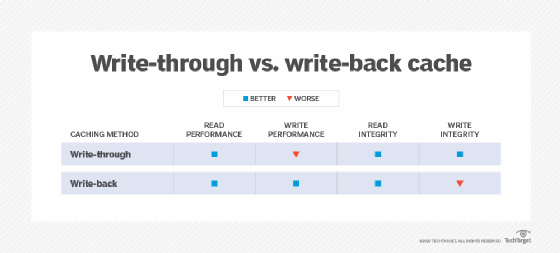

- Write-through cache. The software that updates the data writes the update to the cache first and then immediately to the source. This technique is used when there aren't a lot of updates around the same time.

- Write-back cache. The software that updates the data writes the update to the cache first and then updates the source, but not immediately. It writes to the source only periodically to post several updates at the same time.

Learn how cloud storage caching works and the different types of caching appliances.