Getty Images

The role of sidecars in microservices architecture

Sidecars can do a lot for microservices when it comes to communication with distributed application components, though they also present some precarious management challenges.

In a typical microservices architecture, a multitude of dynamic, distributed services swarm across application systems. These services must often communicate both internally with each other and externally with third-party components. Unfortunately, controlling these numerous and continuous interactions can prove to be an intimidating challenge.

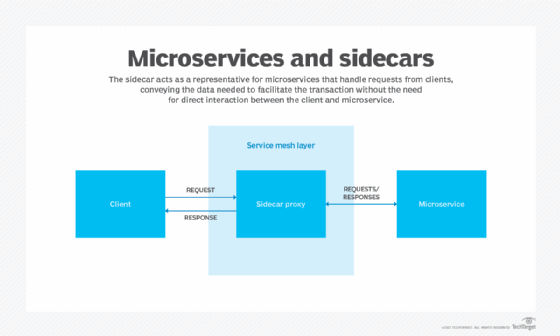

The service mesh pattern, an increasingly popular method of managing distributed services and bringing order to component communications, uses a proxy instance that intercepts and manages interservice communication. In most development shops, this proxy mechanism is referred to as a sidecar, though it is also referred to simply as a sidecar proxy or, in some cases, a sidecar container.

This article will run through some of the basics involved with implementing sidecars in a microservices architecture, including how it works and the benefits they provide. We'll also review some of the pitfalls and some best practices both developers and architects should remain mindful of when working with sidecars in a microservices environment.

Service mesh and sidecars

Sidecars are directly associated with the service mesh pattern, which is a low-latency infrastructure layer that manages large volumes of communication between application services. At its core, the service mesh pattern simplifies cross-cutting concerns like routing, resiliency, security and observability. Some of the most well-known proprietary service mesh implementations include Istio, Consul, Nginx Service Mesh and AWS App Mesh.

The sidecar attaches itself to each service within a distributed application and can establish connections to each virtual machine or container instance required to perform routine operations. Sidecars are adept at handling routine tasks that are better off abstracted from individual services, such as monitoring systems and security protocol checks.

The benefits of sidecars for microservices

Service mesh and sidecars have become an integral part of microservices architectures because they can control service-to-service communication, handle request routing, provide fault tolerance and help maintain high availability. Sidecars can also provide direct support for essential microservices management tasks, such as service discovery and distributed tracing.

The sidecar also enables components to access services from any location or using any language. As a communication proxy mechanism, the sidecar can also act as a translator for cross-language dependency management. This is not only beneficial for distributed applications with particularly complex integration requirements, but for application systems that rely heavily on external business integrations, management services and development tooling.

Best practices for sidecar implementations

The use of sidecars in microservices architectures brings plenty of benefits, but the technology isn't easy to set up and maintain. When not managed properly, the addition of these abstracted components has the potential to add latency to network communications, rather than streamline them.

In particular, the addition of sidecars to already complex environments can make application development and management more difficult. Developers and operations teams must become familiar with the new service layer, including how it works and how it will potentially change application behavior. This also requires clear understanding of container management in a distributed microservices system, considering that service mesh is often deployed on top of container orchestrators like Kubernetes.

Sidecars also carry a measure of risk when it comes to retry mechanisms for failed transactions. Software architecture teams will often imbue services with the ability retry failed transactions to avoid unnecessarily halting the associated application processes. However, many proprietary service mesh providers also equip sidecars with the same ability. When multiple retry mechanisms run alongside each other, it may eventually result in duplicated transactions that drain vital resources and drag overall application performance down to problematic levels.

To get the most out of sidecars in microservices scenarios, and avoid some of the pitfalls noted above, apply these best practices from the get-go:

- Only use sidecars when it's needed. If an application only contains a few basic services that aren't subject to frequent communication failures, the management complexity associated with service mesh and sidecars might not be the right choice, as it will likely add unnecessary management overhead.

- Create centralized monitoring and request tracing dashboards. Because sidecars are usually involved with large volumes of distributed traffic, it's imperative to provide development and operations teams centralized dashboards that allow them to stay on top of observability, perform efficient request tracing and easily access error logs.

- Consider adding extra network security measures. Most service mesh products come with a basic set of security features, such as certificate management, authentication and authorization tools. However, it may be prudent to add and enforce additional network restrictions on sidecars that limit communication between sensitive services that reside in certain containers.