NYC's controversial AI bias audit law gets new hearing

New York City's new automated employment decision tool law, designed to root out AI bias in hiring, is having trouble getting off the ground.

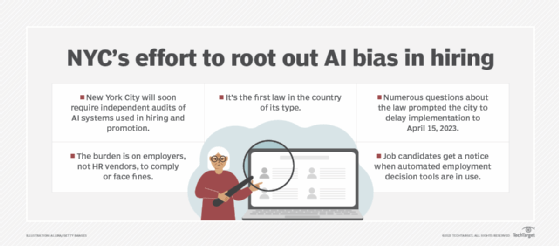

Businesses using artificial intelligence to rank and sort job candidates will have to audit their hiring tools for AI bias, according to a pending New York City law. But controversy over the law's requirements prompted the city to delay its Jan. 1 launch to April 15.

The city has scheduled a new hearing on Jan. 23 to consider revisions to the automated employment decision tools (AEDT) rule. It is intended to ensure protected classes, including gender, race and ethnicity, don't face discrimination if employers use AEDT systems to make hiring decisions.

The law, which was passed in 2021 and is the first law in the U.S. of its type, stipulates that employers using AI hiring tools must hire an independent auditor to check for AI bias and notify job seekers that they are using an AEDT system in hiring.

The AI bias law "really does place the ultimate burden on the employer to make sure they are complying with the law," said Matthew Kissling, an employment attorney at partner at Thompson Hine LLP in Cleveland, Ohio.

But some vendors believe the law is too broad and are still determining how it works and whether it even applies to their products.

Criteria Corp. provides testing and structured interview tools, where all candidates are asked the same questions in the same order. It doesn't use AI, but its processes could still be subject to the law, said Brad Schneider, vice president of strategic consulting at the Los Angeles-based company.

Because of how the law is written, it may cover "potentially every test in the world." All scoring uses an algorithm and may be subject to the broad language of the law, whether it uses AI or not, Schneider said.

He believes the New York City law is reacting to video-based hiring that bases scoring on emotion or affect recognition or "trivial and non-predictive things," he said.

Hefty fines for non-compliance

Kissling said the law imposes some hefty penalties, ranging from $500 to $1,500, for a violation. New York considers a violation each day the tool is in use.

Kissling believes the law will increase employers' scrutiny of AI-enabled HR products, which could be a positive thing for the industry.

But for now, there is much uncertainty about what the law requires.

Joseph Abraham, vice president of assessment solutions at Talogy, a talent management company in Glendale, Calif., has raised questions about the law's practical implications. In a comment to the city, he asked how a system's data is weighted, such as a hiring score that's based 90% on a structured panel interview rating and 10% on a tool that uses machine learning.

"Many ambiguities remain in the updated draft of regulations," Abraham said in an email.

Vendors are still interpreting this law and argue that even systems that use AI in hiring may not be subject to it if the hiring process isn't fully automated.

One vendor that uses AI technologies is HiredScore, which believes its technology is unaffected by the law because it doesn't use automated employment decision-making. Human decision-making remains at the heart of the process, according to the company.

Athena Karp, CEO of the New York-based company, said an employer defines job qualifications for the role, candidates submit their information, and then HiredScore's system reads the data and produces a match score.

When recruiters work manually to review job applications, they typically only have time to review less than half of the candidates, "meaning a majority will never get the chance for the recruiter to know they are qualified for the role," Karp said. But HiredScore's system will generate a score for each candidate and anyone who is qualified will be visible to the recruiter, she said. The score is generated in a consistent way, she said.

"There are no automated decisions," Karp said.

But Karp said many tools fully automate the decision process in the hiring, "such as an assessment test, where if you don't pass a certain score, you are never seen by the company."

Patrick Thibodeau covers HCM and ERP technologies for TechTarget Editorial. He's worked for more than two decades as an enterprise IT reporter.