SFIO CRACHO - stock.adobe.com

3 IT and DevOps trends to watch in the second half of 2023

From managing cloud costs in an uncertain economy to embracing AI and other emerging technologies, IT leaders face major challenges as they head into the second half of 2023.

The first half of 2023 has already seen significant uncertainty and transformation in software and IT.

Heading into the second half of the year, financial concerns remain top of mind for organizations. But despite widespread layoffs and an economic slowdown, IT budgets remain strong: Half of organizations are increasing IT spending in 2023, according to recent research from TechTarget's Enterprise Strategy Group (ESG).

In an uncertain economic climate, businesses are balancing the need to manage costs with the potential competitive advantages of adopting emerging tech. In particular, FinOps, platform teams and AI have emerged as key trends this year in the changing landscape of DevOps and IT.

Mixed economic indicators pose challenges for business decisions

The current economic slowdown, although projected to continue into the second half of 2023, isn't a clear-cut recession. Despite slow economic growth and high inflation, the labor market and consumer spending remain strong, and the ongoing AI boom has prompted a recent surge in AI-related tech stocks such as Nvidia and Oracle.

After a cautious start to the year, organizations are beginning to slowly ramp back up, said Paul Nashawaty, principal analyst at ESG: "The first quarter of 2023 slowed down, [but] I see organizations adjusting budgets to start spending." He attributes this to technology's critical role in nearly all industries; as businesses become increasingly reliant on tech, there are limits to how much they can cut IT spending without compromising operations.

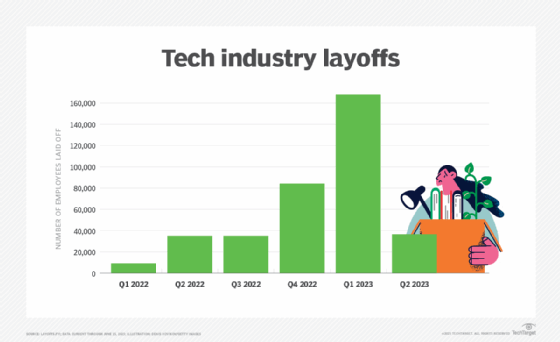

At the workforce level, the widespread tech layoffs that began in 2022 have worsened in 2023. As of mid-June, more than 206,000 tech workers had been laid off -- more than in all of 2022, according to data from tech layoffs tracker Layoffs.fyi. However, the pace of layoffs seems to be slowing, with a significant drop between the first and second quarters of 2023.

Overall, experts echoed ESG analyst Bill Lundell's recommendation at the end of last year: Seize opportunities during lean times to ensure a better position once the economy rebounds. For this reason, layoffs -- while perhaps unsurprising as a response to a slow economy -- could prove unwise in the long term. Eliminating staff without a plan to maintain output impairs a company's ability to innovate and retain tech talent, a serious risk in a still-competitive job market.

"If you control your costs, that's good, but if you don't have a plan to maintain or increase your potential throughput, then it doesn't matter that you can lower that cost," said Justin Reock, field CTO at Gradle, a build automation tool vendor, in reference to the recent spate of tech layoffs. "It's not going to have a long-term positive, net positive effect on the health of the business."

Rising cloud costs lead to increased interest in FinOps

As cloud becomes a larger part of enterprise infrastructure footprints, managing spiraling cloud costs is top of mind for many IT organizations. After cybersecurity, cloud initiatives are the top driver of spend in 2023, according to the previously mentioned ESG report. And cloud's growing price tag means associated interest in cloud cost management.

"There's enormous concern about cloud sprawl, because all of a sudden, people are starting to realize that their cloud budgets are unsustainable," said Charles Betz, vice president and research director at Forrester Research. "There's definitely a lot of interest in at least rationalizing the cloud spend, getting more transparency into it."

At this point, cloud is sufficiently well established that most businesses aren't looking at repatriation. Instead, the focus is on examining cloud contracts and building expertise in cost management methodologies such as FinOps.

Areas such as IT service management have historically been able to count on steady, regular cadences of spend, said Tracy Woo, senior analyst at Forrester. But cloud's dynamic nature means less predictability, requiring a more hands-on approach. "Cost management is trying to shift more and more left," she said. "It's trying to be more and more proactive."

In turn, cloud providers are feeling the pressure to offer more appealing contracts, especially for big-dollar customers. Woo said she's seen "pretty incredible" discounts in 2023 compared with previous years, a change she attributes to slowed growth among the big cloud providers. "While they're still profitable, while their revenue is still growing, it's not growing in these crazy numbers they were in the past," she said.

Amid increasing IT complexity, platform teams are on the rise

Just as organizations are paying closer attention to cloud costs and contracts than they have in the past, IT leaders are beginning to realize the effects of tool sprawl and technical debt on productivity and spending.

"[If] any product team can deploy any cloud they want, they can use any database they want, they can use any monitoring tool they want," Betz said, "pretty soon, you've got a massive, fragmented, insecure environment with spiraling technical debt and non-value-add variation."

Using multiple tools with similar functionalities within the same organization can lead to interoperability, cost and security issues. Betz gave the example of using both Dynatrace and New Relic across different teams within the same organization. Although either monitoring tool might fit the organization's needs, "there's not a business case for running both," he said.

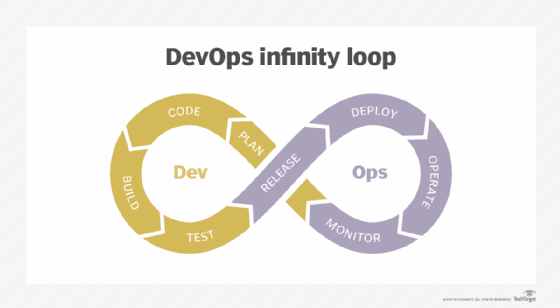

Consequently, organizations are reducing how many tools they use and standardizing their development processes. The emerging role of platform engineering teams is to design and build organized groups of resources and services called platforms, which eliminate the need to manage the underlying infrastructure and tools.

Abstracting away portions of the development environment through self-service capabilities can improve productivity and streamline the experience of DevOps users. A more automated, standardized environment is particularly useful for routine tasks that shouldn't require specialized tooling.

"If I'm building Python code or I'm building Node.js code, using whatever current framework, I don't need to be building that stuff from scratch," Betz said. "I should have defined patterns and ultimately, preferably, a defined platform. And my developers should just be able to onboard and start creating value-adding software right away."

In addition to its productivity benefits, the rise in platform teams is also driven by growing awareness of the importance of developer experience among IT leaders. Enabling standardized self-service capabilities through platforms can reduce wasted time and frustration, leading to a better employee experience.

"I think that this all comes back to ... more awareness around the importance of feedback cycles and the importance of developer happiness, and how that relates directly to innovation," Reock said. "You've had more leadership awareness of the importance of developer experience."

Organizations are exploring the potential of AI in DevOps workflows

AI is emerging as a pathway to improving the efficiency of software delivery as well as developer experience. Although experts expressed concerns about AI's current limitations when it comes to applications in DevOps and IT, they were also confident in its transformative long-term potential.

"What's happening right now [with AI] is huge," Woo said. "It is epochal in terms of the level of change it will bring to the organization."

In the broader societal conversation about AI, generative models such as ChatGPT have enjoyed much of the limelight so far. But some of the most promising areas for DevOps, software engineering and IT involve predictive AI, as well as big data analytics and automation. Down the line, combining generative AI with those capabilities could significantly accelerate the pace of software delivery by reducing wasted time, errors and security vulnerabilities.

In a FinOps context, for example, AI could help companies understand spending patterns and predict future resource use, with insights delivered in a human-readable format. For companies that have managed millions of dollars in cloud spend, "that's a ton of data that you can sift through and you can use to build and potentially leverage a large language model [LLM]," Woo said.

That analysis could inform a business's cost management decisions: identifying tasks that could be performed more efficiently, generating forward-looking recommendations based on previous patterns or automatically performing certain actions to cut costs. For example, a cloud team could write a loop that finds unused instances and then generates code to shut them down using existing AI tools -- although that code would still require human review.

"You probably wouldn't be able to implement it right away," Woo said. "You'd probably have to look at it and review the code. But that's probably something that could happen right now."

Integrating AI into the DevOps lifecycle

Waiting on builds and tests is one of the most time-consuming activities in development workflows, second only to writing code, according to a recent GitHub survey. And the amount of useful information gained isn't always proportional to the time invested.

In many cases, the majority of tests simply succeed -- "which is good, but also a waste of time," Reock said. "It's validation, but the developers sat there and had to wait. If they had some way of predicting whether a test was likely to provide valuable feedback on this run without having to run it ... then you can save a ton of time."

AI is making inroads in an area of automated testing known as predictive test selection, which analyzes historical changes in a codebase to assess the probability that a particular test will provide valuable feedback. This helps testers prioritize only those tests that are likely to be fruitful, avoiding wasted time and effort.

Similarly, AI could assist humans in security testing when combined with fuzzing, a technique that involves feeding invalid inputs to a software program. Those malformed and otherwise unexpected inputs can expose vulnerabilities that might escape traditional testing approaches. The problem, however, is that many of those inputs don't actually result in odd system behavior.

"You end up with 99.99999% totally useless bits of corpus, and maybe that one that does something unexpected," Reock said. "And even if it does something unexpected, you don't know that's bad. ... [The program] reacted to this, but does that mean this could be exploited in any way? Is this actually a vulnerability?"

As with predictive test selection, AI techniques such as deep learning could help improve classification and corpus generation in fuzz testing. "When you see something like this mature, the vision for this is pretty radical," Reock said. "You could literally start testing your code without ever writing any unit tests."

Combined with LLMs, this could make for a much more automated, user-friendly DevOps process. The open source tool OpenRewrite, for example, automatically identifies and remediates exploitable code patterns. When OpenRewrite auto-patches vulnerabilities at scale, the resulting builds sometimes fail. Identifying patterns in thousands of error messages is overwhelming and time-consuming -- but an LLM could classify and interpret failure messages, and then provide output in a human-readable format.

Consequently, as opposed to a developer putting in a ticket with the CI team, an AI assistant could provide the developer with a natural language explanation of the most likely reason their build failed, saving time for everyone involved. That scenario exemplifies what many in the industry see as the ideal role for AI in software and IT: a way to reduce tedium and mitigate pressure on overloaded employees.

"What we're really talking about is reduction of toil, right?" Reock said. "I think what we can really start doing -- and we don't do enough of this as an industry, period -- is start paying attention to the work that we're doing day to day. ... I think that if we do this, we'll find a ton of room for deep learning and other types of AI models to eliminate that toil so that we can focus on the higher-level work."