Container-native infrastructure: The missing link between DevOps and enterprise scale

Configuration drift breaks containers in production. RHEL 10 image mode applies container principles to Linux systems for uniform OS environments across fleets with minimal risk.

Despite the rapid adoption of application containers, the "but it works in the development environment" syndrome is still prevalent due to OS upgrades relying on sequentially executed deployment procedures taking place on each server individually.

Application containers depend on multiple host OS components for reliable operation. These include the kernel, container runtime, network stack, storage subsystem, system services and host libraries.

Flawless container performance requires consistent configuration across all these critical Linux components. Inconsistent configuration can lead to the same application running fine on one server node, while throwing errors, degrading in performance or even exposing security vulnerabilities on another. This introduces significant operational risk that prevents organizations from optimally benefiting from the reliability, scalability and consistent performance they expect from modern application environments. The manual labor required to address this risk and debug applications as needed absorbs important staff hours that otherwise could be dedicated to producing better products.

There is one key culprit causing this dilemma: configuration drift.

Automated configuration management is not enough

It is critical to manage OSes at the fleet level instead of individually to ensure absolute consistency between them. Every time an administrator touches the OS on one specific server, this server risks becoming a "snowflake."

While administrators typically no longer make manual configuration changes, they instead leverage automation scripts based on Ansible or Terraform. However, executing these automation workflows on individual servers separately opens the door for creating differences in OS configuration through several mechanisms:

- Use of different versions of the same playbook.

- Task execution failures or partial failures.

- Environment-specific variable configurations that introduce unintended variations across server roles.

- Timing-based execution where automation runs complete successfully on some nodes, while network connectivity issues or resource constraints cause incomplete deployments on others.

The negative business impact of configuration drift

Configuration drift lowers the organization's ability to productively develop and deliver the best possible software to customers by introducing hidden risk that is hard to quantify. This risk can quickly spoil new software releases and negatively impact the resilience, compliance and security of the currently running software. These risks include the following:

- Application code breaks. The same application code might run on one Linux node but not on another. This is a particularly insidious issue in a Kubernetes context, where applications can often be scheduled across different nodes.

- Restoration from backup not working. A lack of coordination between system changes and backup jobs often prevents the restoration of the application stack from backup when needed.

- Inconsistent observability and monitoring. Monitoring agents, logging configurations and observability tools drift across nodes with different versions, collection intervals and custom configurations. This inconsistency creates blind spots in system visibility that mask performance issues and security incidents until they escalate to business-critical failures.

- Hidden security vulnerabilities. Security patches are inconsistently applied across the infrastructure, leaving some nodes protected, while others remain vulnerable to known exploits. This creates an unpredictable attack surface that security teams cannot accurately assess or remediate systematically.

- Lack of a complete audit trail. Manual system modifications bypass change management processes and leave no documentation trail. This causes compliance audits to fail when auditors discover undocumented configurations that cannot be traced to authorized changes or business justifications.

- Inconsistent performance characteristics. System performance varies unpredictably between nodes due to different kernel parameters, resource limits and optimization settings. This makes capacity planning impossible and causes application performance to degrade randomly as workloads migrate across the infrastructure.

Immutability is critical for scalability: RHEL 10 image mode brings a declarative paradigm shift

Immutability (statelessness) is a big part of application containers' claim to fame. Once deployed, these containers should only be updated by a CI/CD process pulling a new stateless image from the container registry. Just like directly editing individual application containers is a big no-no, the same should be the case for making changes to individual OS instances.

RHEL 10 image mode is not just another new feature to incrementally increase the feature range of Red Hat's flagship OS. Image mode is the basis for a paradigm shift in managing OSes. Instead of fighting the uphill battle of separately remediating the same issues on large numbers of servers, image mode offers declarative OS management following GitOps principles:

- Universal source of truth. Instead of delivering the OS to the server in the form of a bootable OS image that looks different for every server role, RHEL image mode centrally defines all configurations and binaries needed for the entire corporate server fleet in a single Containerfile.

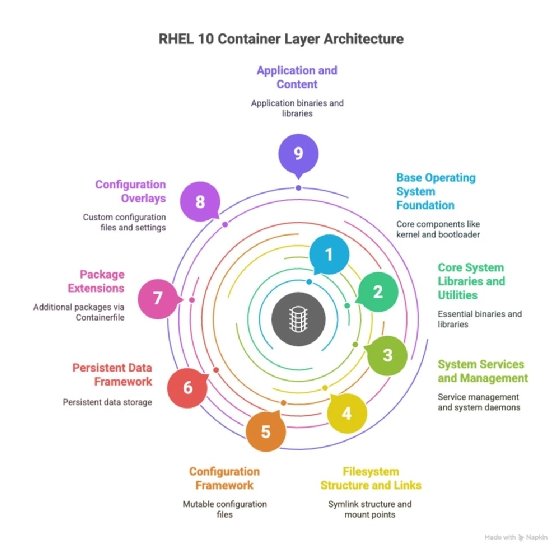

- Policy-driven dynamic adjustments. Depending on the server role, only the required container layers are deployed, instead of having to execute automation scripts for role-specific installation.

- Security hardening. When changes occur, security policies are embedded in container layers and automatically rolled out across the entire fleet. Traditionally, administrators needed to use configuration management systems to install new policies.

- Network and storage configuration. Traditionally, the network interface had to be configured, storage mounted and firewall rules defined. In the new declarative approach (image mode), the network configuration is included as image layers, which are automatically distributed by the container orchestration platform -- typically Kubernetes.

- Package deployment. The traditional deployment approach relies on sequentially installing role-specific application and system packages, while image mode allows the parallel download of only the required layers.

- System optimization. The traditional approach requires the configuration of kernel parameters, resource limits and performance settings to be applied. In contrast, image mode defines these parameters in a declarative manner in a Containerfile that is then tested and validated in the CI/CD pipeline, eliminating the need to make direct changes to the server.

- Testing. Traditionally, each freshly configured server node must be tested, security scanned and performance validated. In a declarative approach, automated testing is part of the deployment pipeline.

While the complete deployment process contains multiple additional steps, these examples illustrate the fundamental difference between the two approaches. The traditional approach relies on the sequential automation of the deployment process that happens on the server. In contrast, the container image-based approach uses one central, immutable and version-controlled container image directly pulled from a central registry.

The business impact of total OS consistency

Red Hat's declarative approach toward OS management, by definition, creates perfectly consistent server nodes. While this is important for the reliability of traditional enterprise applications, it is crucial for Kubernetes-based environments. For organizations to take advantage of the ability to rapidly deploy, scale, move and terminate their applications on Kubernetes, they need to rely on a consistent state of the OS to prevent loading up on unpredictable operational risk.

In a nutshell, image mode simplifies OS management, while guaranteeing a fully consistent corporate Linux fleet. This declarative approach comes with the nice side effect of a complete audit trail, which can be crucial for meeting regulatory requirements. At the same time, it conclusively resolves the "but it runs in our development environment" problem.

Torsten Volk is principal analyst at Enterprise Strategy Group, now part of Omdia, covering application modernization, cloud-native applications, DevOps, hybrid cloud and observability.

Enterprise Strategy Group is part of Omdia. Its analysts have business relationships with technology providers.