James Thew - Fotolia

3 options to use Kubernetes and containers for edge computing

While every deployment is unique, these general guidelines can help IT teams determine whether -- and how -- to incorporate Kubernetes and containers into an edge computing strategy.

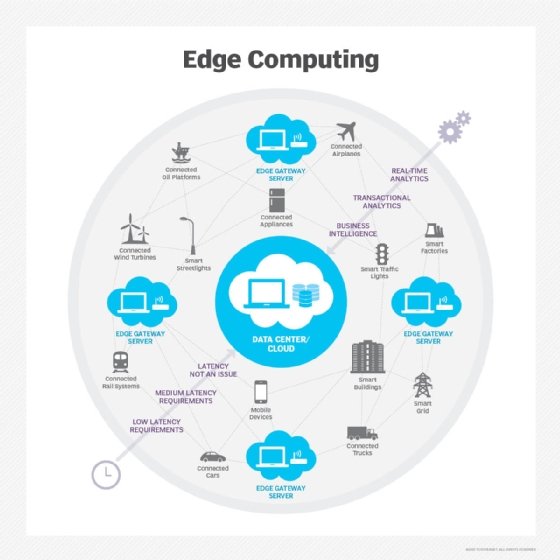

Enterprises have different views of edge computing, but few rule out the possibility they'll deploy application components to the edge in the future, particularly for IoT and other low-latency applications.

Many organizations would also view Kubernetes as the ideal mechanism to run containers in edge computing environments -- particularly those who have already adopted the container orchestration system for their cloud and data center needs.

There are three general rules to use Kubernetes in edge computing:

- Avoid edge models that lack resource pools, as Kubernetes will offer no real benefit in these environments. This is because Kubernetes is specifically designed to manage container deployment in clusters -- a form of resource pool.

- Think of edge Kubernetes as a special use case within a broader Kubernetes deployment.

- Avoid specialized Kubernetes tools designed for edge hosting, unless there is a significant edge resource pool.

In addition to these three general rules, there are three primary deployment options to run Kubernetes and containers in edge environments.

Option 1: The public cloud

In this model, a public cloud provider hosts the edge environment, or the environment is an extension of public cloud services.

The typical use case for this option is to enhance interactivity in cloud front ends. In this scenario, the edge is an extension of the public cloud, and an organization's Kubernetes deployment practices should fit the cloud provider's offerings. A cloud provider's support for edge computing can involve on-premises edge devices, such as Amazon Snowball, that integrate with the provider's public cloud services.

Public cloud edge hosting is almost always supported by an extension of one of the cloud's hosting options -- VMs, containers or serverless functions -- to the edge, which means that Kubernetes doesn't see the edge as a separate cluster. This approach is fairly easy to implement, but might require Kubernetes hosting policies -- such as affinities and taints and tolerations -- to direct edge components to edge resources. Be careful; if the goal with edge computing is to reduce latency, don't let edge resources spread too far from the elements they control.

Option 2: Server facilities outside the data center

This approach involves an edge deployment in a server facility -- or facilities -- outside an organization's own data center.

The dominant use case for this edge model is industrial IoT, where there are significant edge processing requirements -- at least enough to justify placing servers at locations such as factories and warehouses. In this case, there are two options: Consider each edge hosting point as a separate cluster or consider edge hosting as simply a part of the main data center cluster.

Where edge hosting supports a variety of applications -- meaning each edge site is truly a resource pool -- consider a specialized Kubernetes distribution such as KubeEdge, which is optimized for edge-centric missions. Determine whether your edge applications are tightly coupled with data center Kubernetes deployments, and whether the edge and data center would, in some cases, back up the other.

In many edge deployments, the edge acts almost as a client that runs only specialized applications, rather than a resource pool. In this case, it might not be necessary to integrate the Kubernetes clusters. Otherwise, consider Kubernetes federation as a means to unify edge and data center policies for deployment.

Option 3: Specialized appliances

In this case, the edge model consists of a set of specialized appliances designed to be in a plant or processing facility.

Many specialized edge devices are based on ARM microprocessors, rather than on server-centric Intel or AMD chips. In many cases, these devices are tied tightly to IoT devices, meaning that each edge device has its own community of sensors and controllers to manage. Applications here are not variable, and so there's less benefit to either containerization, in general, or to Kubernetes deployment specifically. The most common use case for this model is smart buildings.

Non-server edge devices are often associated with a version of Kubernetes designed for a small device footprint, such as K3s. However, some specialized edge devices might not require orchestration at all. If the device can run multiple applications -- concurrently or separately -- or if a set of these devices hosts cooperative application components, then consider K3s to orchestrate deployments. If neither of these conditions are true, then simply load applications onto the devices with local or network storage, as needed.

In some cases, the edge components of applications are tightly coupled with application components run in the data center. This might require admins to deploy edge and data center components in synchrony, with a common orchestration model over both. In this situation, either incorporate the edge elements into the primary data center cluster and use policies to direct edge component hosting to the right place, or make the edge a separate cluster and deploy and orchestrate it through federation.

Finalize a strategy

Containers and orchestration are tools to efficiently use a resource pool. For enterprises whose edge computing model creates small server farms at the edge, Kubernetes and edge computing make good partners, and a separate, edge-specific Kubernetes strategy, federated with the primary Kubernetes deployment, is a good idea.

If the edge environment is more specialized, but still must be deployed and managed in combination with primary application hosting resources -- whether in the cloud or data center -- fit the edge into an existing Kubernetes deployment as a type of host. Where the edge is specialized and largely independent, consider not using containers in edge computing at all.