artemegorov - stock.adobe.com

Combine containers and serverless to optimize app environments

Rather than pick a side in the debate around containers vs. serverless computing, DevOps shops would benefit from putting both of these complementary technologies to use.

IT professionals are sometimes quick to view every new technology not only as an improvement, but as a replacement for all comparable tools or platforms that came before it. This has happened with the comparison of serverless functions to other deployment environments, such as VMs and containers.

The debate around serverless computing vs. containers is often framed in either-or terms when, in reality, each has its place in DevOps environments. The drawbacks of containers, such as infrastructure management overhead and networking complexity, are abstracted away by serverless functions.

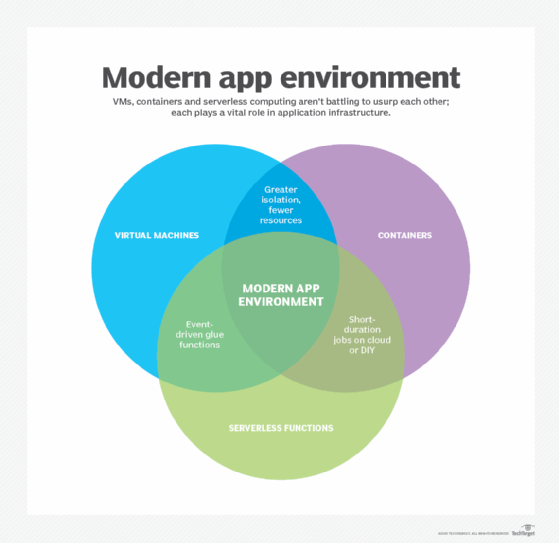

VMs, containers and serverless platforms aren't mutually exclusive, and modern application designs can exploit all three (as illustrated in the diagram below). It's up to DevOps professionals and application developers to choose the right tool for the job.

Compared to containers, VMs provide greater isolation and compatibility with legacy applications. Containers, however, deliver faster startup times and lower resource usage. Serverless eliminates fussing with infrastructure, but event-driven functions are only appropriate for short-duration jobs, which makes them ideal for glue functions -- or small functions that connect different system components -- to persistent applications and modules that run on VMs or containers.

How containers and serverless work

Containers are isolated virtual packages that share a base OS and are used to deploy and run applications in a DevOps environment. There are six primary components of an operational container ecosystem: the runtime engine; application images and image repository; a cluster manager and workload orchestrator; image registry and service discovery; a virtual network overlay, load balancer and distributor; and system hardware.

Together, these provide isolated application execution environments with a shared OS kernel to dispatch, scale and manage multiple applications on a shared cluster. Containers provide a virtual application environment with enough isolation for most operating situations, without the overhead of a full guest VM.

Serverless is a new and misleading label for an old concept: run applications or scripts on demand without provisioning the runtime infrastructure beforehand. SaaS apps, such as Google Docs, might be considered serverless; when users create a document, they don't have to provision the back-end system that runs the application. Serverless takes this concept to application code, which is abstracted from its various infrastructure services, such as storage, databases, machine learning systems and streaming data processing. Google Cloud emphasizes that serverless functions aren't limited to event-driven code execution, but rather include many of its IaaS and PaaS products that instantiate and terminate on demand and don't require prior setup.

On cloud serverless platforms, like AWS Lambda and Azure Functions, functions run code in response to an event trigger, such as an event on a message queue or notification service, and are typically used for short-duration jobs that handle tasks such as data acquisition, filtering and transformation, application integration and user input.

Although the implementations of serverless products vary, they all use containers as the underlying runtime environment, invisible to users.

Pros and cons of containers and serverless

Despite the advantages of container environments compared to VMs -- such as faster startup times and lower resource utilization -- they aren't without some drawbacks.

Chief drawbacks of containers include:

- Infrastructure and management overhead is still high for a nontrivial implementation that includes a cluster of servers; cluster management and orchestration software, such as Kubernetes; and associated image repositories and registries.

- It's often complex to integrate the container virtual networks with the physical data center network and any virtual network overlays an enterprise might use.

- There's a significant administrative learning curve, particularly for on-premises, DIY installations, but also when using cloud services, such as Amazon Elastic Container Service for Kubernetes.

- Admins must incorporate and integrate persistent storage with inherently stateless container technology to accommodate applications that require access to previously stored data.

- Admins must also integrate network and user security policies with container management systems, which requires careful planning.

Serverless services -- whether event-driven functions, such as AWS Lambda, or on-demand cloud services, like Aurora Serverless -- eliminate the administrative overhead of running the underlying infrastructure. Unlike many cloud services, serverless offerings target application developers instead of IT architects and administrators.

Serverless platforms scale automatically to meet the needs of an application. Cloud-based serverless platforms also have built-in redundancy and availability, because the provider provisions, scales and distributes the infrastructure across multiple data centers. Additionally, serverless functions have built-in support for a wide variety of event triggers and provision runtime environments automatically in an IT admin's supported code language of choice. And serverless functions are generally less expensive than containers for short-duration jobs.

Serverless platforms are well-suited for granular, loosely coupled microservices application designs; event-driven functions provide the glue logic that binds microservices together.

There are downsides to serverless that make the technology unsuitable for certain situations. For example, serverless functions are only ideal for short jobs; AWS Lambda limits functions to 15 minutes of execution time. Continuous processes or long-term jobs are a better fit for containers.

IT admins are also unable to specify the runtime environment -- such as Python versions -- nor can they control system capacity. Azure Functions has an App Service plan that enables IT admins to run functions on a dedicated VM with configurable sizing, but this mitigates many of the benefits of serverless and should be used sparingly for particular situations, such as part of an app running on Azure App Service PaaS.

Vendor lock-in is especially prevalent in serverless cloud services; while the function code itself is portable, the implementation and integration to other cloud services and microservices components will not be.

Finally, serverless computing can actually cost more money than containerization when applied to products like databases and analytics services, if the application racks up a lot of usage time and resource capacity.

One alternative for organizations that need more control over a serverless environment yet want to provide developers with serverless features is to run an on-premises, open source serverless environment, such as Apache OpenWhisk, Kubeless -- which builds on Kubernetes and containers -- OpenFaaS or Fission, another serverless framework for Kubernetes.