zhu difeng - Fotolia

Enforce container ecosystem consistency in 4 steps

Lightweight apps are easy to containerize, but monoliths need more work. Expert admins can bring those heavyweight apps into the fold, whether they were made for containers or not.

In IT operations, nonstandard tools lead to operationally inefficient and siloed activities that waste capital equipment and staff time. Containerization leads to standardization, which resolves those silo and inefficiency problems.

Containers create an ecosystem that acts as a virtual computer with distributed resources, which admins program and operate almost like a real one. Every resource in the container ecosystem looks the same, and every application deployment works the same, which standardizes operations.

Get all applications containerized

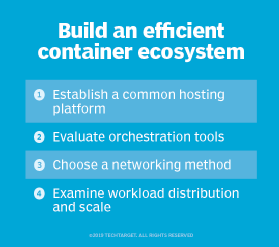

There are four elements to a container ecosystem: hosting, orchestration, connectivity and work distribution. Use these steps to standardize each ecosystem element to get the most from containerization across applications.

Step 1. Address hosting, the compatibility of hardware and software platforms with application requirements. Set aside applications that require specialized hardware or can't run on Linux for the moment.

Linux applications might not share the same container hosting requirements, such as the specific Linux distribution or middleware. Define the minimum number of platform configurations, including OS distro and middleware, necessary to run the whole spectrum of Linux applications. These will be the platforms upon which you standardize all applications.

Check platform dependencies for each application, and group them by their specific needs. Then, choose the most common and useful platform of that mix, and adapt applications with different needs to fit that platform. To adapt the outlier applications, change middleware tools to a common version, or alter operating parameters.

Set up additional platforms for groups of applications that don't fit the common standard, but try not to end up with more than four platform types. These chosen platforms will define the container clusters, so assign servers according to the number of applications that will run on each cluster and the workload that those applications generate.

Step 2. Consider orchestration, which is the process by which applications are deployed to run on containers. There are several tools available, both open source and paid, but Kubernetes is the de facto standard tool for container orchestration. There are many packaged distributions of Kubernetes, as well as open source software; the best option is a provider that is already represented in your organization's hosting environment, such as Red Hat, VMware, Hewlett Packard Enterprise or IBM. Don't make a final decision until other container ecosystem elements are settled.

Step 3. A container ecosystem requires some form of virtual network to connect the applications to users, as well as to connect intra-application components and carry the container orchestrator's management and control data. There are numerous open source and commercial virtual networking tools that integrate with Kubernetes, so review all applications' network requirements carefully before tool selection. Ensure access control policy strategies and any firewalls within the virtual private network are compatible with the tool.

Virtual networking is particularly critical to deploy containers in a hybrid or multi-cloud configurations. Container virtual networking features must harmonize with the network features for each cloud in use. Other tools, such as a software-defined WAN (SD-WAN), provide direct access to cloud-hosted components or applications.

Containers in hybrid or multi-cloud deployments often require a mechanism to ensure management and control connectivity for orchestration elements, such as Kubernetes. This process creates a multi-cloud control plane for orchestration. For example, Stackpoint.io, a tool from NetApp, is a common add-on to Kubernetes that helps unify hybrid and multi-cloud orchestration. Ensure especially that mission-critical applications receive management and control overhead in containers as they would in another ecosystem.

Step 4. Review how work is distributed across the container ecosystem, including load balancing and scaling. Application execution, load balancing and scaling affect deployment and redeployment of components in the container ecosystem. One approach is to use container load balancing -- through Kubernetes or other tools -- on a per-application basis, but that can be operationally complex for heavyweight applications with a lot of components. Alternatively, IT organizations should consider a kind of super-load balancer in the form of Istio or another work fabric with Kubernetes or create an independent resource abstraction layer across all hosting options and clouds.

Istio and other service mesh projects provide a container ecosystem with a distributed, scalable mechanism for directing work to instances of the processes/components that will handle them. The more complex hosting decisions are -- including deployment, scaling and redeployment -- the more Istio or another service mesh benefits containerization of complex applications.

The other approach to work management is via composable infrastructure, such as from HTBase, which Juniper Networks acquired at the end of 2018. HTBase creates a single abstract version of all hosting and connection resources that extends across all clouds and data centers. This abstraction looks like a virtual resource pool with a single set of features that can be orchestrated as a unit. The unit operates without integration steps necessary to harmonize applications to a particular cloud provider or data center.

An infrastructure abstraction tool enables admins to standardize orchestration on different hosting configurations or applications that can't run in containers at all. This tool accommodates applications that don't fit easily into containers.

Application environment standardization is a tradeoff between individual performance optimization and uniform IT operations practices and efficient resource pools. Don't rule out the option to alter applications to harmonize them with a standardized container ecosystem. The efforts pay for themselves over time.