JNT Visual - Fotolia

Evaluate key service mesh benefits and architecture limitations

Service mesh technologies can provide request routing, authentication and even health checks. IT organizations that handle container and microservices deployments should plan to maximize the benefits of service mesh, and address implementation concerns, before going all-in.

Container-savvy developers and operators face the vexing challenge of installing and managing communications for multiple application services that run on the same container cluster. To design each service module to discover and communicate with adjacent services accumulates performance overhead quickly. This led to the creation of service mesh technologies, which broker and control interservice connections.

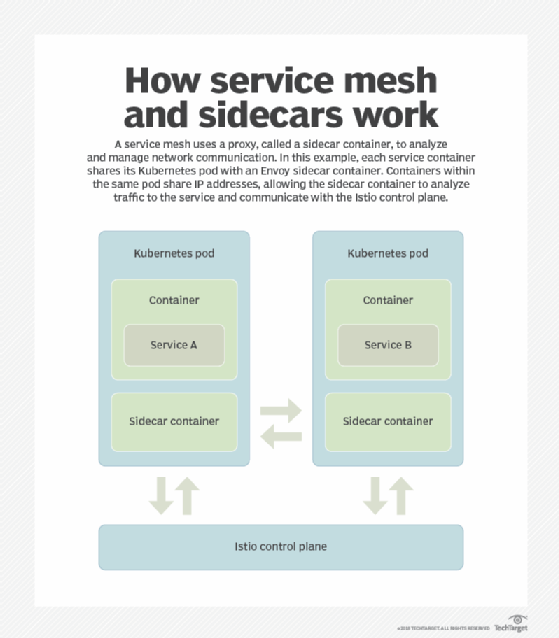

A service mesh provides a software abstraction layer for a set of network services that collectively facilitates reliable, secure communications between microservices. A mesh typically runs as an application layer (Open Systems Interconnection Layer 7) proxy, known as a sidecar proxy, which runs parallel to the individual microservices as a separate container.

Service mesh technologies include open source projects such as Linkerd, Envoy, Istio and Kong, as well as offerings from cloud vendors such as AWS, Microsoft Azure and Google.

As containerization and its management via Kubernetes spread into mainstream enterprise IT, interest in service mesh has exploded. It will continue to grow as organizations refactor applications from monolithic to distributed architectures and develop new ones in cloud-native form with sharable components. Developers and IT pros should evaluate all angles of service mesh features, benefits and drawbacks before they add an open source or proprietary service mesh to their IT portfolio.

Service mesh benefits

A service mesh proxy provides several critical functions, which include:

- service discovery of other service instances within the mesh's sphere of operation, which typically covers one or more clusters;

- load balancing, routing and connecting service requests to the appropriate cluster and instance;

- authentication, authorization and encryption to validate incoming service requests and secure the communication channel;

- health checks and circuit breakers to monitor the status and availability of service instances and terminate connections if the service endpoint fails or violates performance parameters;

- operational monitoring, also referred to as observability, to provide event and performance telemetry to external management systems; and

- a management UI that operators use to set global system configurations, security and routing policies, as well as performance parameters.

Service mesh technologies resemble other Layer 7 network services such as load balancers, application delivery controllers, next-generation firewalls and content delivery networks, providing a shared infrastructure for all the services. With service mesh technologies involved in application operation, developers avoid embedding a library with the same functions into each application.

While the most significant overarching benefit of a service mesh is that it provides a set of shared services with consistent policies to each microservice, there are other considerable arguments in favor of a service mesh implementation. It provides a standard feature set and set of APIs for microservice communications, which enables developers to focus on service-specific business logic rather than networking.

Service meshes suit implementations of truly componentized, decoupled applications made up of many independent microservices. Developers can work in their language of choice, without considering whether they have libraries that provide the requisite network services, such as service discovery, authentication and routing.

A service mesh also can enforce policies and encrypt traffic between services to improve security. The consistent interface and set of logging APIs can yield insights into application events and performance.

Why a service mesh isn't for everybody

The glaring drawback of a service mesh is the technology's immaturity. Various service mesh projects, such as those listed above, compete for application developers and IT adopters, which results in a confusing market for those who evaluate the software. In addition, there are technological barriers to adoption.

A service mesh increases the complexity of a container environment. The required service mesh infrastructure consists of data and control planes that operate alongside the containers that run application services. Additionally, a service mesh might strain the IT budget, as it adds compute overhead to run.

Some service mesh users encounter increased latency for container-to-container communications, as each callout to another service must go through the sidecar proxy and load balancer. Latency could increase if the callout encounters a circuit breaker should the remote service not provide a timely response.

There are networking drawbacks associated with service meshes such as Istio and Envoy. External traffic is a point of debate, as developers are divided on whether a service mesh should extend to external traffic in and out of a cluster or only apply to the communications within it. Ingress traffic is particularly problematic for service mesh technologies, and some teams find they must add ingress controllers for Kubernetes to make the deployment work.

Where to start with service mesh technologies

Most organizations haven't adopted fine-grained microservices and, given the relative novelty of containers in the enterprise, it's doubtful they will need a service mesh for their current workloads. For organizations that use containers as alternatives to VMs for traditional, monolithic workloads, the API gateway features in container orchestrators, such as Kubernetes, Docker Enterprise and Cloud Foundry, suffice.

Organizations that want to experiment with microservices see benefits when they pair a containers-as-a-service choice with a cloud-based container management service. AWS, Azure and Google's public cloud offerings include some form of service mesh.

For example, organizations can deploy the AWS Fargate container platform with Amazon Kubernetes Service and take advantage of AWS App Mesh. Alternatively, a build on Azure Container Instances with Azure Kubernetes Service fits with Azure Service Fabric Mesh. Google Kubernetes Engine works with Google Cloud Istio.

Together, these services provide all the components to develop, test, debug and configure microservices applications and infrastructures.