pixeltrap - Fotolia

Manage a multi-cluster Kubernetes deployment effectively

To reap the benefits of a multi-cluster Kubernetes deployment, IT admins need to understand its intricate levels of management and if their deployments warrant the strategy.

Kubernetes is the industry's de facto containerization strategy, but there are several deployment models available for a variety of missions.

A Kubernetes cluster consists of a master node and a series of worker nodes. These clusters contain the resources and Kubernetes elements necessary to orchestrate container deployment. Often -- particularly in hybrid and multi-cloud applications -- IT teams must deploy containers across multiple clusters and thus need a multi-cluster Kubernetes deployment.

True multi-cluster Kubernetes presumes that many clusters must act as a single, coordinated resource pool. But if the clusters don't support a deployment of common applications -- or only require minimal connectivity between cluster components -- then IT teams will need a different management strategy. The first variable to address is whether multi-cluster Kubernetes is even necessary for a given scenario.

What does multi-cluster Kubernetes look like?

In Kubernetes, a multiple-cluster deployment that acts as a bridge to coordinate master nodes is called a federation. Each cluster has its own specific mission and manages its own deployments. Examples include the data center or cloud side of a hybrid cloud ecosystem, or individual public clouds within a multi-cloud ecosystem. But where IT organizations need cross-deployment, the federation layer provides it. Nonetheless, the IT team must provide some networking support to permit elements of all the clusters to intercommunicate. Google Anthos, for example, is an increasingly popular multi-cluster Kubernetes tool.

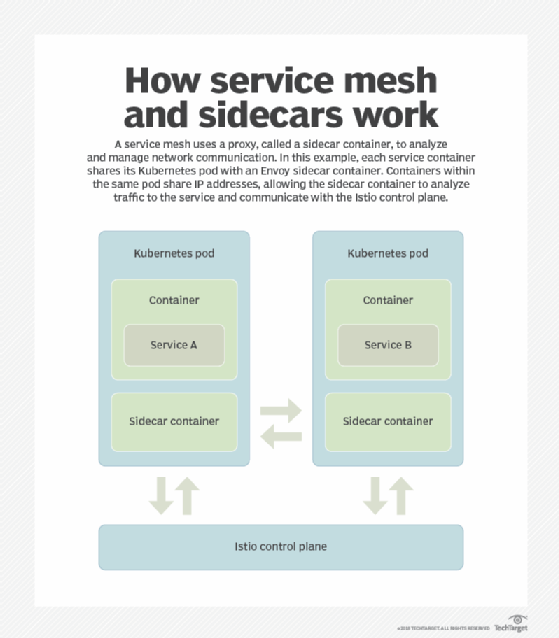

A service mesh is one alternative to Kubernetes federation. Because it's a communications layer above Kubernetes and containers, a service mesh unifies user access to -- and service deployment through -- multiple cluster resources. Service mesh also links workflows between deployed components on any, or all, clusters. Furthermore, Istio -- an open source service mesh -- includes load balancing features that cross cluster boundaries.

Let's address some key multi-cluster management issues regarding each of these deployment approaches.

Connectivity management

Generally, any strategy for multi-cluster Kubernetes deployments recommends all clusters share the same IP address space to ensure all components are addressable. With a service mesh such as Istio, its Gateway configuration model can link clusters where a common IP address space isn't desirable. But the Istio control plane must connect to the Kubernetes API Servers in each cluster.

Where IT teams use Kubernetes federation, users manage the gateway process between clusters themselves, so a single address space is preferred.

Deployment and redeployment

A service mesh, provided it can access all clusters and connect workflows, largely avoids this management issue. The service mesh calls on Kubernetes for deployment and redeployment. Without a service mesh, the federation tool -- such as Anthos, Microsoft Azure Arc or another cloud provider's federation tool -- provides cross-cluster deployment and redeployment. For one or more clusters hosted in the public cloud, ensure that the multi-cluster management tool supports all the cloud providers in question.

Load balancing and fault recovery

Multi-cluster Kubernetes strategies that rely on a service mesh include load balancing across the entire set of clusters, as such approaches provide the user on-ramp and manage inter-component workflows. With Anthos or public cloud multi-cluster tools, IT organizations must deploy a load balancer and expose it as a service. This becomes the destination for the implementation components deployed in the multi-cluster environment.

Any given strategy will depend on the specific cloud or multi-cluster tool in use, as well as the cluster in which it's deployed. For each strategy, validate the load balancer's performance and latency, because different tools have significant variations.

Management policies for security and compliance

The implementation of multi-cluster Kubernetes determines which security and compliance tools and practices an IT team must install and enact. However, the team must create per-cluster policies defined for security and compliance when protecting key resources or application APIs. Use namespaces in both service mesh and multi-cluster federation tools to set security and compliance policies. Service meshes, such as Istio, also provide service-level access policy control for asset protection.

Persistent storage

Numerous multi-cluster components cross the management issues noted above. But persistent storage is the most important, and used by most containerized applications. However, persistent storage can be an issue, particularly when it's shared by several applications or components.

In multi-cluster Kubernetes configurations, it's easy to ignore the impact -- which can be considerable. Storage shared across a multi-cluster deployment generates inter-cloud traffic, which increases costs significantly when one or more public clouds are involved. Additionally, many companies think of security and compliance as application issues, rather than database issues, so policies might differ among clusters, which might result in problems for linked clusters.

Try the simplest approaches to multiple clusters before embarking on a true multi-cluster journey. The closer a cluster's application domains cooperate, the more likely it will be that multi-cluster Kubernetes management is an impending necessity.