Streamline Kubernetes deployments by using a service mesh

Implementing a service mesh could be well worth the time and effort for organizations looking for ways to manage their Kubernetes and cloud-native application deployments.

You don't strictly need a service mesh -- but it could be the missing piece that gets all your Kubernetes pods working in harmony.

There's still a lot of confusion, however, about what a service mesh does and whether it's worth the trouble. Let's look at the options and review the research on how service meshes fit into Kubernetes deployments.

Application communications

Communication between applications is increasingly important and can be done with or without a service mesh. API calls are used to establish this communication but are not always consistent. Vendors have different ways to address these API incompatibilities, with many taking multifaceted approaches.

For example, Calisti by the Emerging Tech Group at Cisco -- formerly Service Mesh Manager -- builds upon upstream Istio by adding a UI, API, CLI and tooling to manage complex multi-cluster applications and services across any cloud, from anywhere.

Kong ensures that developers are sending API traffic to the right endpoints and know what the APIs are. Kong Konnect's Service Hub offers a universal service catalog that enables service owners to document and publish API specs and manage the version lifecycle of their APIs. They can also share and manage access to APIs on a per-person or per-team basis for maximum API discoverability and reuse.

For Solo.io, not all API gateways and service meshes are created equal, even if some start with the same open source technology. Solo.io Gloo Gateway offers Open Policy Agent authorization, federated role-based access control, vulnerability scanning and extensible authentication with API keys, JSON Web Token, Lightweight Directory Access Protocol (LDAP), OAuth, OpenID Connect or a custom tool. Gloo Mesh federates security policies for consistency, according to the Federal Information Processing Standards.

Regardless of the approach, communication across applications is a requirement within a pod or across multiple distributed clouds. But communication is part of the challenge. Service meshes also play an important role in policy enforcement and canary rollouts. This can be completed through end-to-end observability with open standards like OpenTracing and OpenTelemetry to make it easier for service owners to get visibility into issues for new versions of their APIs.

For external developers, there are nuances in approaches across the vendor landscape. Kong offers a customizable developer portal that surfaces the same consumer-focused documentation and API specs done in a service hub. This is designed to lift the burden of managing access control and API communications. Layered on top of that, Kong can manage API credentials and access control lists using industry standards like Dynamic Client Registration.

Calisti by the Emerging Tech Group at Cisco -- which is built on top of Istio and inherits its open source functionality -- focuses on the modernization of applications moving to the cloud and microservices. As an enterprise Istio platform for DevOps and site-reliability engineers, Calisti focuses on automating lifecycle management, simplifying connectivity and providing security and observability for microservices-based applications. Calisti mesh management also supports canary deployment, circuit breaking and Kafka on Kubernetes.

Integration across the organization's environment requires an understanding of the tools and options. The ecosystem is also a factor with service mesh deployments. Vendors provide an ecosystem of API plugins with integrations created by the open source community. This community adoption also provides buy-in from developers to influence deployment options.

But to address organizations' unique needs, it is important to provide flexibility for customers to decide the order in which their plugins will run. Setting reasonable defaults out of the box is preferred, but if a customer wants to change the order in which APIs are executed, all it should take is one additional configuration step.

As an example, if a customer wants to run rate limiting before authentication, the service mesh should be configurable so that they are free to do so. These capabilities make it easy to use GitOps or AIOps workflows to manage the full lifecycle of new API products, from initial release to versioning, through sunsetting or end of life.

Container adoption

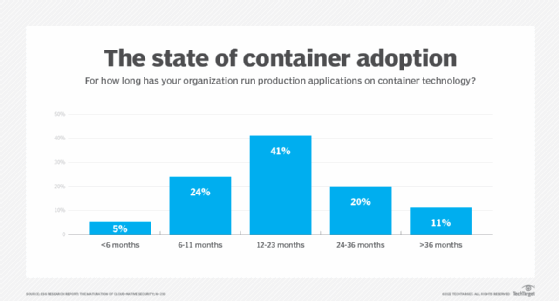

When it comes to container adoption, research indicates it is all in the vendor's approach, and management requires appropriate insights into the container configuration. Industry vendors provide help with container adoption -- and growth is not slowing down.

One challenge is that cloud-driven containerization can create scaling and complexity issues. To solve this problem, Linkerd offers an approach that helps address container scaling and has strong growth adoption. This is mainly due to its focus on simplicity of deployment. A service mesh for large-scale deployments and multi-cluster management does not necessarily have to be complex, and ease of use is the preferred approach for organizations often met with new deployments.

Alternatively, Istio provides an extensible and customizable WebAssembly approach to build advanced filters and policies that assist with container growth. The Istio control plane addresses the challenge of achieving consistency in policies and security across single or multiple clusters, whether located on premises, in the private or public cloud, or in any location.

Most often, Istio is in an Envoy Proxy open source sidecar within each Kubernetes pod. This enables organizations to add applications and deploy clusters rapidly, with granular levels of security, resilience and scalability. While Istio is Kubernetes native, it can also handle server clusters, VMs and other endpoints outside of Kubernetes while supporting legacy or heritage apps -- such as those running on Simple Object Access Protocol (SOAP) -- that haven't yet been modernized.

Another approach is using Kong as a platform with an API gateway and Kong Kubernetes Ingress Controller. Kong can be deployed as a front-door API gateway and scaled horizontally in Kubernetes and container environments. It can be driven through declarative configuration, either with or without Kubernetes-native custom resource definitions.

Other approaches are also valid ways to address this scale and complexity, but organizations must also consider whether to build a service mesh or work with a proven vendor.

Namespace management

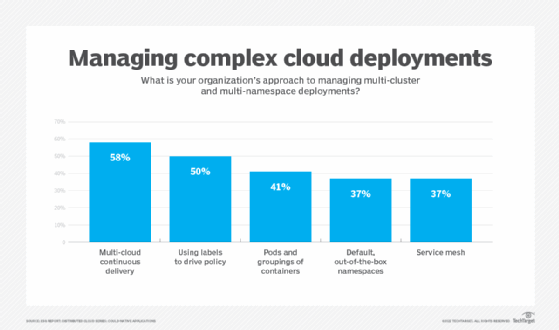

Another challenge organizations run into is namespace management. This often tends to be a challenge when organizations are adopting cloud-native approaches. Research from Enterprise Strategy Group (ESG) shows that using a service mesh is one way to overcome these challenges -- or, as the Mandalorian says, "This is the way."

Kubernetes namespace management addresses many underlying issues organizations face regarding cloud, cluster and application team sprawl. Many deployments are operating in multi-cloud and multi-cluster environments, and a service mesh should support connections between zones and meshes as a deployment strategy.

When ESG asked organizations which approaches they employ to manage multi-cluster and multi-namespace deployments, 37% reported using a service mesh. Namespace complexities must be taken into consideration when organizations are delivering cloud-native deployments.

The takeaway

Service meshes do not have to be complex. ESG's research shows that the advantages of using a service mesh outweigh the challenges of deployment, configuration and setup -- and as distributed clouds continue to grow, application communications across Kubernetes clusters will only increase. Using a service mesh provides a streamlined approach to delivering this type of deployment.

Whether you roll your own service mesh or work with a vendor is up to you. But overall, service meshes provide business value to cloud-native application deployments that would otherwise be lost.

ESG is a division of TechTarget.