everythingpossible - Fotolia

5 steps to troubleshoot a virtual machine problem in Azure

If you're wrestling with a virtual machine problem, like a faulty connection issue, be sure to check the domain name system and network traffic routing.

Moving server workloads to the cloud presents new and old network troubleshooting challenges. That said, the fundamentals of troubleshooting IP networks remain the same: Start at one end of the OSI stack and work your way to the other.

The new part is you must also consider the cloud provider's stack, and it's mostly invisible to you. Once the frame leaves the guest's virtual interface, you have little control over what happens next.

Furthermore, there is no last resort of using Wireshark on a switched port analyzer to work out what's going on. One has to trust that cloud providers know what they are doing and that most traffic will arrive at its destination. That still does not preclude misconfiguration, congestion or good old-fashioned cable faults.

For example, consider this Azure scenario: You have a newly deployed virtual machine (VM) on an existing virtual network and new subnet. You can remotely administer it, but you have trouble connecting to other VMs in your resource group. How can you quickly troubleshoot this virtual machine problem in this public cloud-based network?

Check the domain name system

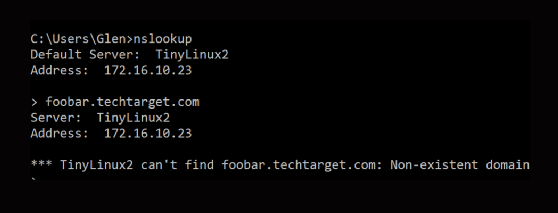

The domain name system (DNS) sits somewhere in the fuzzy top three layers of the OSI stack, but a single command can tell you a lot as you start to diagnose this virtual machine problem. For example, running nslookup www.techtarget.com has three probable outcomes:

- DNS timeout. When you first run nslookup or dig, it shows the default DNS. If the default is wrong, that is your first thing to fix. If it is right but unreachable, there is a problem further down the stack.

- Nonexistent domain. This tells us the DNS is reachable, but it could not find the droid -- or host -- you are looking for. This tells us more useful things:

- Your guest can reach the top of the stack of another host and that host is sufficiently aware to give you an answer.

- That host is connected to the internet or your internal network via ExpressRoute.

- The query returns the correct answer. This tells you that your target is resolvable by the DNS server and the immediate routing is correct.

Open a socket to it

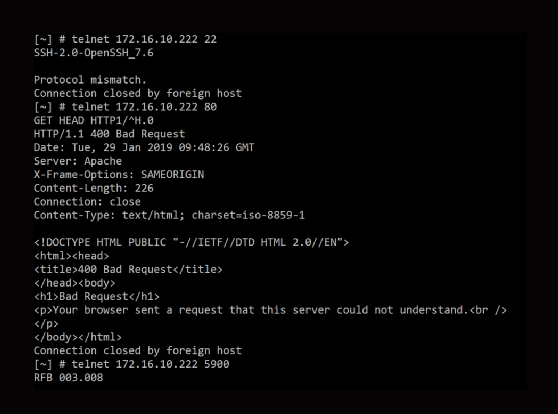

In my view, given the number of virtual hops between devices, Ping's usefulness is often overstated. Ping can tell you the Internet Control Message Protocol is open between two points, but that is the wrong Layer 4 protocol. It probably tells you nothing about the actual communication path.

You can open a TCP socket in various ways, but a telnet client -- such as PuTTY -- is a fast and easy option. The Simple Mail Transfer Protocol, Secure Shell and Hypertext Transfer Protocol (HTTP) return recognizable server headers. If the socket opens, your problem is likely at the top of the stack; perhaps some application-level access control list (ACL) is in play.

You must also ensure the headers make sense. For example, if your Apache server responds with Internet Information Services headers or those of a load balancer, something is fundamentally amiss. User Datagram Protocol (UDP) makes this more difficult. While it is possible to approximate a UDP connection using Nmap and other tools, they are unlikely to be in your standard image.

Alternatively, Ping may be useful here. If that fails, try a TCP connection to the same host as most of the same routes and ACLs and network configuration will apply.

So DNS works, and apparently the rest of the infrastructure isn't borked. Where to next as you tackle this virtual machine problem?

Check user-defined routes and effective routes

Azure can pull some fancy tricks on the virtual wire and bend traffic. At the bottom of the virtual machine's networking interface pane in the Support + troubleshooting section is effective routes. This shows which virtual network appliance or other device it will send the traffic to after it leaves the interface.

The fact that a virtual machine's routing table is almost irrelevant is part of the weirdness of public cloud networking. User-defined routes (UDRs) can swoop in and override it. This is especially confusing when troubleshooting a virtual network appliance that is supposed to be making forwarding decisions. Fortunately, this is easy enough to check and verify.

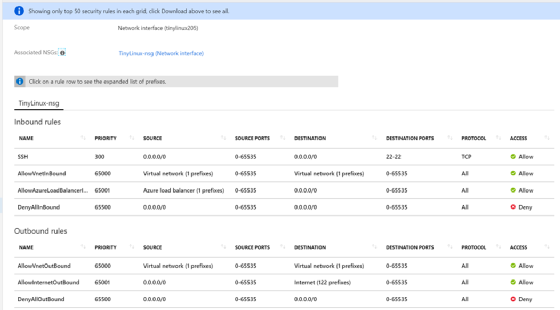

Check the network security groups

Network security groups (NSGs) are Layer 2 ACLs and they provide powerful security features. They are also a complete pain.

Adding each application to an NSG by port can be a tedious process. Realizing you moved the server HTTP port to 8080 and didn't update the NSG attached to the interface is likely to elicit some frustration, and typos in NSGs are to be expected.

Try the oldest trick in the book.

Have you turned it off and on again? Amazingly, this still works, sometimes. Merely rebooting does not flush out all the cloud state associated with a guest. Shutting the host down followed by a cold boot forces a reinitialization of the underlying communication paths and probably moves you to a new host. I have known cold booting to fix transient performance or packet loss gremlins. Problems that can be fixed in this way are rare, but it's worth ruling out.

Most of the above also applies to other cloud providers -- except UDRs -- and they have their own foibles. The good news is the so-called old skills are still useful in the public cloud. However, you should consider other network functions in the apparent Layer 2 and below.