alex_aldo - Fotolia

How to ensure a scalable SASE architecture

Don't assume all SASE architecture is the same, especially when it comes to scalability. Enterprises should consider distance to vendor PoPs, office locations and agent onboarding.

We are in an era where our traditional perimeter -- office, firewalls, VPN concentrators, switches, routers and high availability -- is slowly dissolving and shifting toward remote workforces and a work-from-home status quo forced by the COVID-19 pandemic and an accelerated transition to the gig economy.

We face a tectonic shift in the IT and enterprise security space, and enterprises need an alternative, trusted and scalable way to secure access to digital assets in the workplace. These circumstances create an opportunity to fill an increasing market gap with lightweight, innovative options.

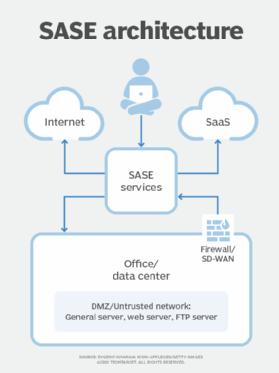

This is where Secure Access Service Edge (SASE) comes in. First, let's set some groundwork for SASE architecture.

Because SASE is all about cloud transformation and as-a-service models, customers need to trust the provider and delegate the operation, security, service-level agreements (SLAs), redundancy and scalability to the vendor's platform, including compliance with any regulations they might follow.

SASE platforms comprise the four major parts below:

- cloud access security broker (CASB) or data leak prevention;

- cloud web gateway or firewall as a service for outbound traffic control;

- zero-trust network access for remote access; and

- software-defined WAN (SD-WAN) that, in many cases, replaces the physical link with a virtual one.

The first three parts of SASE architecture are services, while the last one, SD-WAN, is responsible for bringing the traffic to those services.

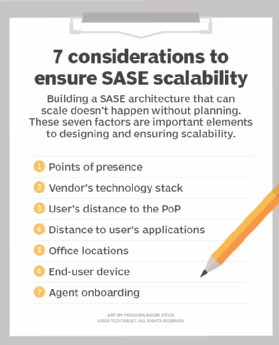

Building scalable SASE architecture

But how do vendors build SASE platforms to achieve the required scalability? Let's review seven factors that support scalable SASE architecture.

1. Points of presence

Vendors use points of presence to establish a geographical distribution of data centers wherever the PoPs are located. Vendors can own private PoPs or use public cloud services, such as AWS, Azure and Google Cloud Platform. Each type provides different benefits. For example, in a private data center, the SASE vendor decides on computing, hardware, virtualization technology, cost and SLAs. In a public cloud, the SASE vendor is more bound to public cloud SLAs and the provider's type of virtualization technology, as well as limited by the provider's locations.

2. Vendor's technology stack

In most cases, SASE vendors use two mainstream technology stacks:

- cloud VMs, which can scale both vertically using larger instance sizes of compute and horizontally via load sharing of multiple smaller instances; and

- containers to scale per customer.

SASE vendors that play in the CASB space and need to issue API connections to SaaS vendors are likely to use the container method.

Vendors route the traffic from their data center, using a backbone or forwarding it to the target after inspection. Certain vendors use their global architecture backbone to route the traffic across the web and optimize the routing, similar to the way ISPs use their internet backbones. With this method, the vendor handles the traffic from the moment a user hits the closest PoP. This approach may decrease the latency and improve the speed of certain operations, such as file transfers -- depending on traffic destination -- but it could also complicate routing and scalability of that functionality.

Each vendor provides its own SLAs and speed, and it is the customers' responsibility to review and make sure the SLA is aligned with their applicative requirements.

3. User's distance to the PoP

In traditional network connectivity for outbound browsing, a user's traffic is routed directly to the destination after it traverses the firewall or web gateway on company premises. With a cloud-based service, a user's traffic is first forwarded to the SASE vendor's data center -- aka PoP -- and only then proceeds to the destination. Therefore, the location of these PoPs in network proximity to the user's location is essential for the best latency and speed. The factor of routing optimization as described in the previous section can also improve latency and speed.

4. Distance to user's applications

One of the popular use cases for SASE is access to Microsoft 365. Many SASE providers locate their backbone infrastructure in the same physical data centers as the big SaaS companies, such as Microsoft, Salesforce and others. This strategy enables SASE providers to offer faster performance by reducing the number of network hops and optimizing the routing paths to SaaS locations from their PoPs. This process routes traffic from a user's computer to the vendor's closest PoP and then forwards it directly to the SASE vendor's backbone that is in close proximity to the service.

5. Office locations

Customers can connect an office to a SASE provider in a number of ways. We are going to focus on the main three.

- Generic Routing Encapsulation and IPsec tunnels from existing routers and firewalls. These tunnels and devices will connect two SASE PoP locations to achieve high availability. Automatic failover of tunnels or the number of simultaneous tunnels will depend on local hardware capabilities.

- SD-WAN hardware devices provided to the customer by SASE vendors for better connectivity and availability. Some SASE vendors that don't have their own dedicated hardware will form partnerships with SD-WAN providers and let the users decide which hardware they want to use.

- Proxy chaining. This mode is used when the customer already has a web proxy service and prefers to forward the traffic to the SASE vendor. Proxy chaining might be used in environments where internal routing has different requirements and complexities involved.

6. End-user device

Customers have several options by which an end user can connect to the SASE architecture, including the following three approaches:

- Proxy auto-configuration (PAC). PAC determines whether web browser requests go directly to the destination or whether the traffic is forwarded to a SASE vendor.

- An agent. Agents are a common method these days, as they provide better traffic control options, support multiple protocols -- therefore providing better scalability -- and offer a more generic approach. An agent can also incorporate several other controls, such as device health and compliance before connectivity, logging and reporting, and some vendors use the agent cache for speed optimization.

- Remote access. Remote access architecture uses a similar mechanism to outbound browsing by using reverse proxy or an agent for the connectivity. More options are available that don't require an agent to be installed, using a browser's native capabilities to connect. In this case, end users log in to the SASE portal and can choose the application they want to access after going through the authentication and authorization process.

7. Agent onboarding

To simplify the distribution process of an agent to remote endpoints, customer can use their existing patch management tools, such as mobile device management, Endpoint Manager, Group Policy and others. The majority of SASE providers will provide an agent that can be installed remotely, silently and preferably with no reboot required.

One option customers can take is to invite users to download the app via a link, email or other message systems. Another popular approach is to have the agents installed as part of a golden image. For PAC files, onboarding will be done mainly via configuration management systems.