What is Ceph?

Ceph is an open source software solution that provides a unified system for enterprise-level object, block- and file-based storage. The Ceph platform offers a highly scalable architecture along with reliable storage for organizations' growing data storage needs.

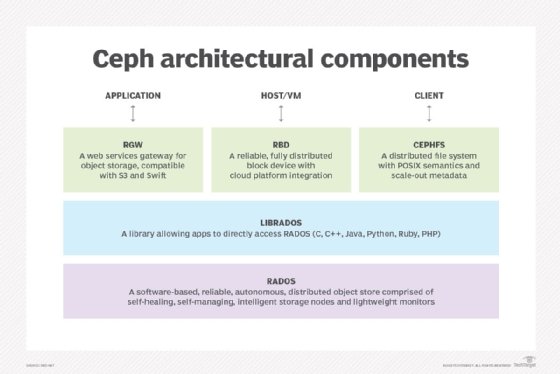

Storage types supported by Ceph

Ceph delivers object, block and file storage from a single, unified platform.

Ceph object storage is accessible through Amazon Simple Storage Service (S3) and APIs based on OpenStack Swift Representational State Transfer (REST), and a native API for integration with software applications. The service includes a robust set of security and tiering features. Additionally, the simple object storage interface in a storage cluster has asynchronous communication capability, which enables direct access to the objects in the cluster.

Ceph RADOS Block Device (RBD) provides high scalability and performance by distributing data and workload across all available devices. To enable such distributions, the RBD stripes virtual disks over objects within a Ceph storage cluster. With striping, the block devices deliver better performance than a single server. The disk images can be asynchronously mirrored to remote Ceph clusters to enable disaster recovery or backup, making RBD suitable for virtualization and public or private cloud computing environments.

Ceph File System (CephFS) is a file system as a service compliant with Portable Operating System Interface that stores data in a Ceph storage cluster like Ceph block storage and Ceph object storage. With CephFS, file metadata is stored in a separate RADOS pool from file data and served by means of a resizable cluster of the metadata servers (MDS), ensuring high-performance services without over-burdening the storage cluster. The Ceph filesystem provides high availability and high scalability.

Ceph architecture and work

Ceph is built on top of Reliable Autonomic Distributed Object Store (RADOS) storage nodes described as intelligent, self-healing and self-managing. These nodes communicate with each other to dynamically replicate and redistribute data. RADOS uses intelligent nodes to secure stored data and provide data to clients, which are external programs that use a Ceph cluster to store and replicate data. A Ceph storage cluster based on RADOS might contain thousands of storage nodes.

This intelligent distributed solution provides a flexible, scalable and reliable way to store and access data. Enterprise users can distribute data throughout a cluster as needed using Ceph regardless of the data storage type since Ceph manipulates storage as objects within logical storage pools in RADOS.

Ceph storage clusters

A Ceph storage cluster is a collection of Ceph monitors, Ceph Managers, Ceph MDS and object storage daemons (OSDs). These elements work together to store and replicate data used by applications, Ceph users and Ceph clients. The clusters are designed to run on commodity hardware, using an algorithm named Controlled Replication Under Scalable Hashing (CRUSH). The algorithm ensures data is evenly distributed across the cluster and that all cluster nodes can retrieve data quickly without any centralized bottlenecks.

It is also used by Ceph OSD daemons and cluster clients to compute information about data locations. Since clients don't have to use a central lookup table to find this information, bottlenecks are avoided.

A Ceph storage cluster receives data from Ceph clients in one of four ways:

- Through a Ceph block device.

- Through a Ceph OSD.

- Through CephFS.

- Via a custom implementation.

To write data to or read data from the cluster, clients must contact a Ceph monitor and obtain a current copy of the cluster map. Regardless of how the data comes in, it is stored as a RADOS OSD. OSDs control read, write and replication operations on the storage drive, while the clients determine the semantics of the object data.

Each Ceph cluster consists of many types of daemons, including the following:

- Ceph monitor. It maintains a map of the cluster state, or cluster map, which itself consists of five maps: monitor map, OSD map, placement group (PG) map, MDS map and CRUSH map. The Monitor daemon also provides the cluster state map to Ceph clients, keeps track of failed and active cluster nodes, and manages authentication. A Ceph cluster can function with a single monitor. However, this also creates a single point of failure that can prevent the Ceph clients from reading data from or writing data to the cluster.

- Ceph OSD. It stores data as objects in a flat namespace rather than in a hierarchy of directories. It also checks its own state and the state of other OSDs and sends this information back to Ceph monitors.

- Ceph manager. It acts as the endpoint for monitoring, including external monitoring; orchestration; and plug-in modules. It also maintains cluster runtime metrics and enables dashboarding.

- MDS. It manages file metadata when the CephFS is used to provide file services.

- RADOS Gateway. Also known as Ceph Object Gateway, it provides a gateway to the Amazon S3 RESTful API and the OpenStack Swift API.

All daemons can run on the same set of servers and users can interact with them directly per their requirements.

Benefits of Ceph

Ceph is a highly scalable software-defined storage solution for modern enterprises. It can satisfy the storage needs of organizations at any data volume, including the petabyte or exabyte level. Ceph also provides advanced fault management capabilities by making it possible to decouple data from a physical storage device using a software abstraction layer. For these reasons, Ceph is highly suitable for microservice- and container-based workloads in the cloud, on Openstack and in Kubernetes as well as for big data applications.

Another advantage of Ceph is that it supports multiple storage types within a unified system. With interfaces for different storage types in one cluster, organizations can minimizes hardware costs and reduce the management burden.

Ceph shares its logical storage pools into PGs, with each PG for storing an object determined by the CRUSH algorithm. This enables efficient and near-limitless scaling as well as seamless cluster rebalancing and fault recovery.

History of Ceph

Sage Weil is credited with the creation of Ceph as part of a doctorate project at the University of California, Santa Cruz. The project was the culmination of years of research by professors and graduate students at UC Santa Cruz. The name Ceph stems from cephalopod, a class of mollusks that includes cuttlefish, octopi and squids. The Ceph open source project started in 2004, and the software became available worldwide under an open source license in 2006.

After completing his doctorate, Weil worked on the open source Ceph project with the support of DreamHost, a Los Angeles-based web hosting company he co-founded, and a small team of engineers. In 2012, they formed a startup named Inktank Storage to provide a commercially supported version of Ceph for enterprise users.

Red Hat acquired Inktank in 2014 for $175 million. Weil assumed the role of Ceph principal architect at Red Hat. Red Hat sells subscription-based, commercially-supported versions of Ceph as Red Hat Ceph Storage. This solution is available as a combined solution with Red Hat OpenStack Platform for private cloud architectures and can be combined with OpenStack. Vendors offering hardware for use with Ceph software include Dell, Cisco, Lenovo, SuperMicro and QCT.

As of 2024, Weil is the CEO of Civic Media, a Wisconsin-based radio network.

Ceph is a scalable distributed storage platform that can evolve as needed. Take a deep dive into this Ceph primer to explore its assorted offerings and how to get around challenges.