AWS re:Invent 2025: Agentic AI leaves the POC graveyard

These five announcements from re:Invent are aimed at helping developers reclaim productivity by streamlining overhead cloud infrastructure and DevOps tasks with AI.

LLMs are a commodity.

The AI race is now all about a vendor’s end-to-end platform becoming the natural choice for enterprises to scale AI agents across business units and hierarchy levels.

AWS re:Invent 2025 was laser focused on addressing the potential roadblocks customers face when rapidly rolling out agentic applications to production. Instead of worrying about their project becoming another addition to the infamous POC graveyard, AWS wants to equip corporate staff with the tools and confidence to identify where AI can help with their daily tasks and benefit the enterprise overall.

AI for absolutely everyone

A baker walks into her bakery at 4 a.m. and wonders how many of each of her baked goods to make on that specific day. Instead of guessing -- and risking wasted food, frustrated customers and lower revenue -- she tasks an AI agent to look at the sales history by weekday, while also probing for the potential effect of the season, the weather, local events and pre-orders. The agent notices that more cars will come past the bakery due to local road closures, but bad weather will lower the share of drivers getting out of the car and walking into the store. From now on, our baker schedules the AI agent to send her an email each morning with the latest demand estimates and asks the agent to also reconcile the estimate with the actual sales number every evening to continuously improve the estimate.

After successfully optimizing her production process, she decides to ask another agent to investigate why certain batches of the bakery’s trademark croissants come out of the oven in a state that makes them more suitable for the trashcan than for the sales display. The agent looks at all imaginable context factors -- oven temperature curves, proofing times, humidity, kneading speed and duration. After four weeks of careful observation, the agent figures out that from a certain level of humidity, the proofing time has to be decreased by 2 minutes.

While this use case might not seem all that significant, it is massively impactful to the baker. She now increases her store revenue while lowering cost simply by making enough of the goods that sell, and by not disappointing customers with certain goods being sold out early or croissants being ‘hit or miss’. While it would not be feasible for the organization to hire consultants or fund custom software development, describing a specific business challenge to an AI agent is simple, and can be highly effective at the same time.

The message from AWS CEO Matt Garman’s opening keynote was clear. AI assistants ignited the initial excitement. Early iterations of AI agents gave us a peek at what is possible, but in the future AI agents will be transformational. AWS re:Invent 2025 was all about showing enterprises how to achieve agent-driven business transformation.

Let’s look at the key announcements from re:Invent and how they help developers and platform teams get AI projects to production in a repeatable and scalable manner.

Top 5 announcements from re:Invent 2025

Software developers are where the rubber meets the road when it comes to responding to the CEO’s call for more AI-enabled capabilities in current and future applications. However, Omdia research shows that, on average, developers spend two thirds of their time on overhead tasks related to complex cloud infrastructure and DevOps workflows. At re:Invent 2025, there were five key announcements aimed at righting this wrong and unleashing the other 65% of developer productivity.

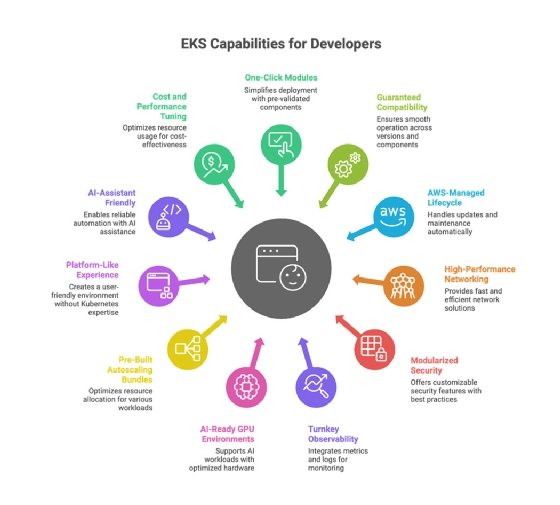

1. Amazon EKS Capabilities: From a DIY toolbox to one-click developer platforms

EKS Capabilities can provision an entire Kubernetes application platform in one click. This includes Argo CD for continuous delivery, AWS Controllers for Kubernetes (ACK) to manage AWS resources directly from inside the cluster, and the new Kubernetes Resource Orchestrator (KRO), which enables platform teams to build and ship reusable resource bundles. These bundles can one-click provision GPU environments, bake in security without touching YAML, deliver full-stack visibility without configuring agents or log backends, and provide standardized ingress and deployment patterns.

In other words, developers no longer need to debug YAML, juggle GPU drivers, understand the nuances of networking and storage interfaces, or manually piece together observability stacks. This is especially important for AI, where infrastructure always comes with messy interlocking dependencies: GPUs, drivers, runtime libraries, storage I/O, batch schedulers and autoscaling logic.

With KRO, platform teams can package the entire AI stack into a single, reliable capability that developers -- or AI agents -- can request declaratively. This finally delivers a true click-and-go platform experience for developers.

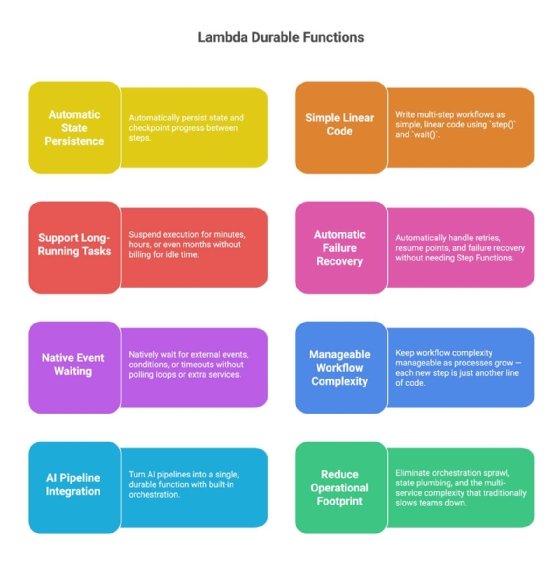

2. Lambda durable functions: Turning Lambda into a serverless workflow engine

Lambda durable functions eliminate the single most significant pain point of serverless programming: trying to manage state inside a platform that is, by design, stateless.

Developers have long been forced to work around this limitation by manually persisting state to a database, writing their own retry and recovery logic, and constantly polling for external events -- all to stitch together multi-step, stateful, long-running workflows in code that was never meant to carry that burden.

Durable functions flips this model on its head. Instead of inventing ever more creative hacks to preserve application state, developers can now write straightforward Lambda code that automatically keeps its state, waits for external events, survives failures and simply resumes where it left off.

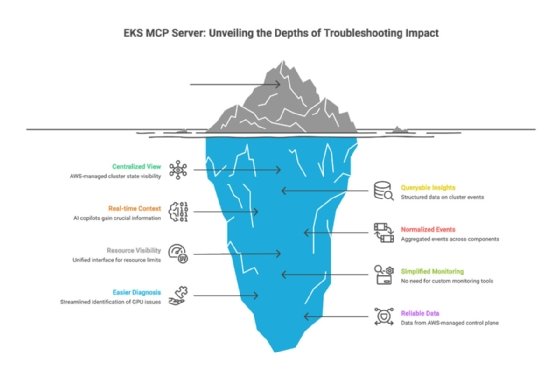

3. Amazon EKS MCP Server: Simplified troubleshooting in real time

EKS’s new managed-control-plane infrastructure -- via the EKS MCP Server -- significantly simplifies troubleshooting and cluster introspection for developers, DevOps tools or AI-based copilots.

With a consistent, AWS-managed control plane, the platform tracks real-time cluster state, resource limits, node/cluster events, health issues and API-server metrics under the hood, enabling diagnostics and root-cause analysis without manual log scraping or guesswork. This means tools -- and developers -- can query a standard interface to see what’s really happening, which pods behaved badly, which node ran out of memory, which API calls spiked, and surface actionable guidance automatically.

For teams building or running AI workloads, that visibility is a game-changer. You no longer need to stitch together monitoring agents, build custom dashboards or fight brittle telemetry setups. You get built-in introspection, resilient control-plane management and simpler triage of both infra and workload issues.

4. Kiro autonomous agent: AI team members

AWS positions the new Kiro autonomous agent as a system that development teams can assign real backlog tasks to. Kiro then figures out how to complete the work end-to-end, guided by company policies, coding standards and each developer’s stated preferences.

Thanks to persistent context, Kiro keeps track of the broader project state instead of treating tasks as isolated prompts. In theory, this means every developer could have one or more AI teammates capable of operating throughout the organization’s specific software development lifecycle. Strategically, this places Kiro several levels above tools like Cursor 2, which focuses on task- and subtask-level execution, and above OpenAI’s code agents that operate within narrower scopes.

This model could address long-standing issues in software delivery, where regression testing, migration validation and security checks are often compressed or skipped under deadline pressure. Kiro could take on dedicated roles, for example, acting as a security engineer who runs continuous audits or as a DevOps engineer that prevents incidents by monitoring infrastructure, identifying root causes and coordinating responses when new code is deployed.

5. AgentCore Evaluations: Trust as the key for agentic AI scalability

AgentCore Evaluations provides enterprises with a structured way to continuously test, validate and harden the behavior of their AI agents before they are allowed to operate on real infrastructure or production code. Instead of hoping that an LLM-powered agent does the right thing, teams can define evaluation suites that simulate realistic scenarios like broken deployments, missing permissions, subtle security vulnerabilities, malformed API responses, partial failures and ambiguous human instructions to see how the agent responds under pressure. This shifts AI agent development from prompt tinkering to an engineering discipline. For example, teams can test whether:

- A DevOps agent correctly rolls back a failed deploy instead of scaling the wrong cluster.

- A security agent escalates only legitimate vulnerabilities rather than generating noise.

- A developer agent can refactor a service without breaking cross-service contracts.

AgentCore Evaluations enables organizations to run these tests repeatedly as models evolve, ensuring that each agent reliably behaves within company guardrails. In short, it gives AI agents the equivalent of unit tests, integration tests, and chaos tests, all before they’re trusted with real work.

AWS needed a bunch of big-time announcements to show that the company can do more than playing catch up with Google and Azure/OpenAI in the AI race. At re:Invent2025, they have certainly delivered the caliber of announcements that can make this happen. AWS has shown its ambition to take the lead in the agentic AI race by delivering developers a suite of near turnkey tools that let them code more and worry less about what is going on within the stack. This is what application development should be all about.

Torsten Volk is principal analyst at Omdia covering application modernization, cloud-native applications, DevOps, hybrid cloud and observability.

Omdia is a division of Informa TechTarget. Its analysts have business relationships with technology vendors.