event stream processing (ESP)

What is event stream processing (ESP)?

Event stream processing (ESP) is a software programming technique designed to process a continuous stream of device data and take action on it in real time.

ESP supports the implementation of event-driven architectures that are used in numerous real-world applications.

The term event stream processing is composed of three individual words. Event refers to a data point in the system that is continuously creating data. This system is the data source. Stream, streaming data or data stream refers to the ongoing delivery of events from that data source.

The third element in ESP, processing, is about analyzing a data stream as it comes from the data source and through the pipeline, and then processing it -- acting on it -- to generate some output. In ESP, the event processing often occurs in real time or near real time to keep up with the increasing quantities of data that modern business systems generate.

More about events, streams and processing

In an ESP pipeline, an event is anything that is created from an event source, recordable and analyzable. The event source could be an enterprise system, a business process, an internet of things (IoT) sensor, a database or a data stream from any electronic device -- for example, a laptop, mobile device, fire alarm or smart device such as a smart car.

An event stream is a set of data points -- specifically, a sequence of events -- occurring in some sequential or chronological order. Business transactions such as invoice generation and customer orders are common event streams in enterprise settings. Other event streams include system-generated data, such as data generated by IoT sensors, and business reports.

Processing is about analyzing and acting on the incoming data stream from the data source. Many kinds of processing actions can be taken on these events:

- Ingestion, where the data point is inserted into a database.

- Aggregations or calculations, such as summation or calculating a mean.

- Analytics, such as predicting a future event.

- Transformation, such as changing the data format.

- Enrichment, such as adding more context to the data point by combining it with other data points.

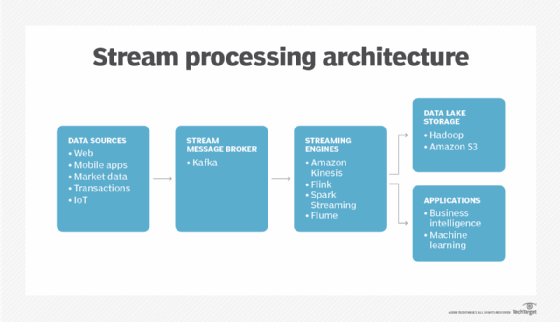

How does event stream processing work?

There are three main elements in ESP:

- Event source.

- Event processor.

- Event consumer.

The event source is a business system that generates events as data points. An event is any change in state within the system, such as a transaction, report or user action -- e.g., a customer placing an order on an e-commerce site. A sequence of such events ordered by time is an event stream.

The event source sends these events to the event processor, which receives the output, often through an application program interface, or API. Once the event has been processed, the processor sends the final output to an event consumer, which could be anything from a database to a dashboard or user report, rather than what is considered a human consumer in common language.

Furthermore, in an environment where some entities produce events, others manage events and still others consume events, ESP might be connected to an event manager. This is a software entity that fields events and decides whether or not to forward them to event consumers for subsequent reactions. These reactions can range from simple logging to triggering a complex collection of error handling, recovery, cleanup and other related routines.

Benefits of event stream processing

ESP helps to make sense of vast amounts of data arriving very quickly from a range of sources. It enables organizations to process data at lightning-fast speeds in order to capture useful insights, determine which processes can be automated and determine which events are important from a business perspective.

By actively tracking and processing event streams, ESP allows organizations to proactively identify business opportunities and risks, including security or performance issues, and optimize business outcomes. It eliminates the problems of slow reaction time and decision latency that are both common in traditional data processing applications. Since it doesn't adopt a store-analyze-act approach with past data, ESP ensures information is quickly captured and processed in a timely manner so that it is most relevant to the business. This allows business systems or humans to take immediate action if necessary.

Other benefits of ESP in the modern business landscape include the following:

- It enables real-time processing so that businesses can respond faster to new opportunities or resolve challenges.

- It enables greater business agility and flexibility.

- Less processing power and memory are required because individual data points are processed rather than large data sets, which is common with batch processing.

- Massive amounts of data from more data sources can be processed, providing more opportunities to capture useful insights.

- It can be scaled up or down depending on data volumes and business needs.

- Patterns and relationships can be detected in data to support insight generation and decision-making.

Applications of event stream processing

ESP is very useful where continuous and/or vast quantities of data streams are generated. The data can come from devices, sensors or people, and from the interaction of one or more of these elements with each other and their environment. ESP is also valuable in applications that have the following conditions:

- Real-time data intelligence is required.

- Traditional data processing cannot meet the demands of business processes or real-time enabled systems.

- Storing information in a database and analyzing it later at some intervals doesn't reveal timely insights.

- There is a need to respond to events in real time or at least as quickly as possible.

- High volumes of fast-moving data are being generated and must be processed at a matching speed to identify new opportunities -- or threats.

Examples of applications that meet the above criteria include the following:

- Fraud detection.

- Cybersecurity threat detection and response.

- Personalized offers and content to e-commerce shoppers.

- Predicting possible supply chain disruptions to enable early action and minimize delays.

Many other applications and use cases also rely on fast data processing and near-immediate responses:

- Anomaly detection.

- Online payments.

- Predictive maintenance.

- IoT analytics.

- Financial trading.

- Risk management.

- Healthcare.

- Banking and financial services.

- Enterprise network monitoring.

Event stream processing tools

Businesses have many choices when it comes to ESP tools. Many of these tools are cloud-based to enable fast adoption and minimize the maintenance burden for organizations. The following are some popular ESP tools:

- IBM Cloud Pak for Integration.

- Amazon Kinesis Data Streams.

- Apache Kafka.

- Aiven for Apache Kafka.

- Red Hat OpenShift Streams for Apache Kafka.

- Confluent.

- Azure Stream Analytics.

- Google Cloud Pub/Sub.

Event stream processing vs. batch processing

The terms event stream processing and batch processing are sometimes used interchangeably, especially in big data environments, because they are both about processing data and generating insights from it. Even so, they are different -- possibly contradictory -- concepts.

ESP is about analyzing and taking action on a constant flow of data. In other words, the processing happens on data in motion. In contrast, batch processing is about processing a batch of static data or data at rest. In situations where action needs to be taken continuously and as soon as possible, ESP is required. This is because, unlike batch processing, ESP happens in real time or near real time. For this reason, ESP environments are known as real-time processing environments.

Also, in batch processing, data is collected over time, stored and then processed. This data is usually not time-sensitive, so it doesn't require real-time processing. In comparison, ESP is meant to process events that occur frequently and to generate insights that are needed immediately. That is why data is collected continuously and processed quickly in a piece-by-piece manner.

See how to choose an event-processing architecture for an application.