data latency

What is data latency?

Data latency is the time it takes for data packets to be stored or retrieved. In business intelligence (BI), data latency is how long it takes for a business user to retrieve source data from a data warehouse or BI dashboard.

In computer networking and internet communications, data latency is the time it takes data to travel from a source to a destination.

Why is data latency important?

As a rule of thumb, the lower the network latency, the higher the speed and performance of a network. For an organization to be agile and quickly respond to changing market conditions, it needs to reduce latency and provide business users with data access to real-time and near-real-time operational data.

The following are a few benefits and use cases of low data latency:

- Efficient business decisions. Reducing data latency enables data-driven organizations with quick decision-making, as employees and data engineers can run ad hoc reports to answer specific business questions.

- Reliable connections. Low latency data levels provide a stable connection that enables websites and apps to load quickly for users. A reliable connection is particularly important for cloud-hosted and mission-critical applications.

- Up-to-date information. Low latency levels promote a balance between supply and demand in the marketplace, as the most current and accurate information is always available.

- Front page optimization. With low levels of data latency, the front page of a news site can stay current and optimized, which is especially important for breaking news stories.

- Improved quality of live streams. Interactive experiences such as live data streams and webinars must have low latency levels to stay synchronous and provide a great user experience. For example, during a live stream, a high data latency of 30 seconds means a user must wait for 30 seconds for their question to be transmitted to the broadcaster.

- Retargeting customers. When shopping online, customers can get distracted by ads and may abandon their shopping carts. Low latency levels can enable businesses to retarget those customers in real time to avoid missed sales opportunities.

- Real-time customer analytics. Low latency connections are imperative for retail customer analytics, as they help determine customer trends in real time. Using internet of things (IoT) devices, analytics data is gathered in real time before the customer exits the shopping experience either online or in the physical store. A high level of latency or delay can slow data processing, resulting in a reduced customer experience and lost sales opportunities.

- Real-time manufacturing with industrial internet of things. IIoT plays an integral role in the manufacturing industry. Real-time IoT sensors, actuators, applications and smart devices are commonly used in factories to access huge amounts of data. For these devices and applications to work in real time, high-capacity and low-latency networks are crucial for ensuring ultra-fast data transfers. Low latency also enables manufacturing facilities to monitor, identify and fix technical failures before they affect operations.

- Improved virtual reality and metaverse experience. Metaverse is a digital space where users can play games, socialize and collaborate across several devices. For users to experience a smooth Metaverse experience, latency levels should be low to ensure faster end-to-end communications.

- Real-time detection of malicious behavior. Low latency levels, along with real-time data analytical tools can aid in the detection and mitigation of malicious activities, such as bank fraud or security incidents. For example, low latency levels can help enable a bank to quickly spot and contain potential fraudulent transactions.

How to measure data latency

Latency is a key performance metric that is typically measured in seconds or milliseconds in round trip time (RTT), which is the total time data takes to arrive at its destination from its source. Another method of measuring latency is time to first byte (TTFB), which records the time it takes from the instant a data packet leaves a point on the network and arrives at its destination. RTT is more popular than TTFB, as it can run from a single point on the network and doesn't require the installation of data collection software at the destination like TTFB does.

Following are some common ways of measuring data latency:

Observing the latest data. By determining how recently the data was stored inside a database or a data warehouse, companies can evaluate any signs of data latency. Alternatively, companies can observe the data stream of a microservice to see how quickly it's being processed. Organizations can use website analytics tools such as Google Analytics to monitor website speed and performance. Also, network monitoring tools, including SolarWinds Network Performance Monitor and Paessler Router Traffic Grapher, can provide a great visual analysis and latency features, such as generation of alerts when network latency reaches above a certain threshold.

Using the Packet Internet Groper utility. Ping is a handy network administration tool used for measuring latency across Internet Protocol (IP) networks, such as the internet. Ping can be used on both Windows and Mac computers. To observe latency across a website from a Windows machine, open a console or a windows terminal and type ping domain name -- domain name can be the URL or the IP address of the destination website -- and hit enter. The ping command will first try to verify if the domain name can be resolved and reported back to its corresponding IP address. Then it will report the minimum, maximum and average round trip latency in milliseconds.

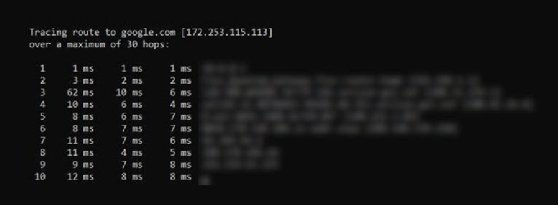

Using traceroute. Another great way to test latency across a network is by doing a traceroute test. Traceroute gives full information about the entire path that data takes to reach its destination. It delivers three packets to each hop -- the time it takes for data to travel from one router to another in the network -- and displays each elapsed time which helps determine the levels of latency at that time. For example, the traceroute from a source computer in Boston to a destination server in San Jose, California will show the entire path complete with each hop and the time it takes to go and come back. To run traceroute on any website or IP address on a Windows machine, open a console or a terminal window and type traceroute IP address or traceroute hostname and press enter. An asterisk in the reply simply means a response wasn't received, which is indicative of packet loss.

What is the difference between bandwidth and latency?

Bandwidth and latency define the speed and performance of a network. They work in conjunction with each other but play separate roles, as bandwidth measures the size or maximum capacity of data that can be transported and latency measures the time it takes the data to arrive at its destination. To understand this better, think of a bus -- bandwidth -- that transports 50 passengers versus a car -- latency -- that transports four passengers to the same destination. The car is faster and reaches the destination before the bus, but the bus transports more passengers. Thus, depending on the requirement, bandwidth and latency can be equally important.

In a nutshell, bandwidth is a measure of the amount of data -- measured in bits per second -- that can move between two nodes over a given time. Alternatively, latency is the measure of speed or the delay that occurs during the transport of that data from one node to another.

High data latency can have a direct effect on the performance of a network. Explore three important steps to reduce network latency.