How AI changes data governance roles and responsibilities

Data governance is a team effort, though recent AI developments have caused data and AI teams to converge. As a result, organizations have created new roles in these teams.

AI, innovations in compliance automation and frequent high-profile cybersecurity incidents are rapidly reshaping data governance roles and responsibilities into something new.

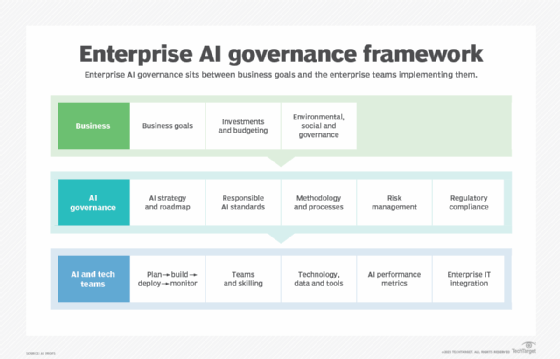

While many existing roles and responsibilities remain, the complexity and urgency of AI adoption have woven data governance into the tapestry of AI governance. Recently, AI sparked interest in updating cybersecurity and other enterprise processes using large language models and generative AI (GenAI) with unstructured data. As AI sweeps across the enterprise, governance is no longer strictly an IT responsibility; it's a boardroom imperative.

"AI has drawn the eye of the CEO and board to IT and data teams after years of being ignored other than as a place to cut budgets," said Nick Kramer, principal of Applied Solutions at SSA & Company, a global consulting firm advising companies on strategic execution.

Now more than ever, data governance is an enterprise-wide responsibility. As AI capabilities expand in scope and scale, compliance across the enterprise is key to protecting and safely using data assets.

Different data governance imperatives

Data governance has been focused on compliance objectives infused with aspirations for AI. However, new trends have compelled enterprises to evolve their data governance strategies. Kramer found that GenAI's dependence on data -- and therefore data governance -- resulted in two camps:

- A focus on regulatory compliance.

- A focus on data governance as a way to improve operational performance.

The biggest trend Kramer sees in driving data governance is embedding GenAI into existing tools to improve data quality, AI performance and value through higher-quality data more quickly. These tools expand the definition of data governance to include awareness that documents are data. Integrating unstructured data from documents is now a question of "how and when," not "if."

"This challenges the capabilities of current, evolving GenAI patterns for extracting and managing data," Kramer said. "Extending governance structures to support and measure the value creation from unstructured data is crucial."

Rethinking roles

The excitement surrounding AI, especially agentic AI, has prompted data governance teams to rethink their roles. Governance is no longer about reviewing access requests one at a time. Now, it's about building systems that can automatically handle thousands of requests from people and machines.

"This shift has turned governance into more of a design and oversight role, where teams focus on setting rules and ensuring those rules are applied consistently across platforms and user types," said Steve Touw, CTO and co-founder of Immuta, a data provisioning and access governance platform.

These new responsibilities help scale trust through automation, enabling domain experts to manage their own policies within a consistent framework, thereby responding to rising demands for data access while reducing bottlenecks.

"The impact is huge, with companies now provisioning data faster, with less risk and enabling AI innovation without overloading their governance teams," Touw said.

But do enterprises frame data governance as part of AI governance, or vice versa?

Rapid AI development changed the industry, introducing new considerations and risks. Ashley Casovan, managing director of the AI Governance Center at IAPP, said those roles began their evolution in 2018 with the first AI governance requirements. However, agentic AI has changed the landscape again.

The rapid adoption of agentic AI adds complexity to data governance beyond the standard considerations of AI. Himanshu Jain, a partner in strategy and management consulting firm Kearney's Digital and Analytics practice, lists the following changes emerging as data governance and AI governance converge:

- Centralized monolithic governance model shifts to a distributed domain-governance model with central standards.

- IT and data team functions expand to a cross-functional entity spanning legal, ethics, security, product and engineering.

- Governance boundaries expand from focusing on data quality, cataloging, lineage and access control to integrity of AI model inputs and outputs, training data lineage, AI decision traceability and agent behavior monitoring.

- Manual periodic audits for governing static data sets evolve to automated continuous processes for governing dynamic, real time AI-driven data flows. This will use policy-as-code enforcement across pipelines, agents and non-human identities.

- Human identity access controls expand to include non-human agents using zero trust authentication, authorization and monitoring to govern how agents read, write or transform data.

Despite the change in responsibilities, Casovan doesn't necessarily see a shift in roles with agentic AI. "However," she said, "the knowledge required for these roles could change as we start to learn more about the various uses and applications of agentic AI systems."

This includes a growing trend among enterprises of establishing a skilled professional responsible for overseeing their AI systems on data governance teams, according to Casovan. This is because it's critical to understand agentic AI's specific risks and mitigation measures before full adoption.

New seat in the C-suite

Data governance teams are also expanding beyond IT teams to include enterprise executives. Shane Tierney, senior program manager of GRC at Drata, a compliance automation platform, said, "AI has transformed data governance from a back-office compliance activity into a front-line operational and strategic function."

According to the IAPP Organizational Digital Governance Report 2025, organizations now face governance drivers that go far beyond regulatory pressure. Top motivators include reputation (87%), consumer expectations (79%), and technological risk (62%). This shift means governance teams must manage not only how they handle data, but also how AI systems behave, learn, and create downstream risk.

"As a result, governance responsibilities increasingly sit across the entire C-suite rather than within isolated data or IT teams," Tierney said.

These imperatives underscore the importance of including senior legal executives in data governance initiatives. The Association of Corporate Counsel's Chief Legal Officers Survey 2025 reports that 70% of CLOs now oversee at least two additional enterprise functions. These are most commonly compliance, privacy, ethics and risk. As a result, enterprises have a more unified command structure for AI oversight.

Tierney has observed the emergence of new hybrid roles, including AI policy leads, data governance strategists, and chief trust officers, who bridge the technical and regulatory perspectives.

"Overall, the expansion of governance roles has moved organizations toward more integrated leadership structures, more mature oversight capabilities and a stronger foundation for safe AI-driven innovation," Tierney said.

New roles

According to Jain, many enterprises are leveraging compliance automation tooling and AI imperatives to create new roles within data governance teams. Traditionally, governance functions focused on data quality, access controls and privacy compliance. Now they include AI behavior oversight, model risk and digital accountability. Thus, data governance teams must include experts who understand the following:

- Data architecture.

- AI model dynamics.

- Legal requirements.

- Operational risk.

These roles increasingly participate in the AI model lifecycle, agent design, drift monitoring, and bias mitigation. New and expanded roles include:

- Chief Data & AI Officer (CDAO). Combines data governance with AI risk oversight, aligning data policy with AI-system assurance.

- AgentOps Manager/AI Orchestrator. Oversees fleets of autonomous agents, ensuring compliant data access, logging and auditability.

- AI Governance Architect. Encodes policies into technical controls, such as access logic, lineage and fairness metrics.

- Data Product Steward. Manages data contracts, model-training readiness and continuous quality metrics.

- Responsible AI Officer/Ethics Lead. Interprets regulations -- such as the EU AI Act -- and ensures explainability, bias and drift monitoring.

Best practice for evolving data governance

Jain recommends that teams foster federated ownership and maintain centralized standards. Data mesh patterns can guide this approach by establishing domain-oriented ownership, implementing data-as-a-product and self-service platforms. Organizations can then scale safely while avoiding central bottlenecks.

Embedding dynamic access, identity and agent governance into these workflows is essential. This includes implementing finer-grained, context-aware access controls, especially for sensitive data used by AI workflows. Governance must cover non-human agents and continuous access patterns. Teams should explore policy-as-code and automated controls to move approvals from emails and documents to CI/CD systems. Automated lineage and metadata tools can standardize data lineage processes across engines.

Jain also advised taking advantage of platform-native governance tooling. This will help centralize classification, data maps and access workflows to accelerate safe self-service. Many platforms now offer governance playbooks and reference architectures to provide guardrails and oversee data lineage. In tandem, investigate recent AI risk frameworks, such as NIST AI RMF and its 2024 GenAI Profile and ISO standards, to develop a shared control language. Doing so improves collaboration on data suitability, provenance, transparency, human oversight and evaluation.

"Accelerate data governance with well-trodden patterns, not bespoke one-off efforts," Jain said.

Kramer said companies should explore incorporating "product operating model" philosophies and methods to drive closer alignment to business value and outcomes. However, technologists must develop and strengthen this ability within themselves, as they are more accustomed to storytelling for other technologists and struggle to adapt the story to a business audience.

"This creates opportunities for some to whom it comes more naturally, but enterprises need to recognize this skill gap and design training and learning paths to scale the skillset, driven innovation, and ultimately to achieve objectives of AI investment," Kramer said.

George Lawton is a journalist based in London. Over the last 30 years, he has written more than 3,000 stories about computers, communications, knowledge management, business, health and other areas that interest him.