What is automation bias?

Automation bias is an overreliance by human operators on automated systems, such as computer hardware, software and algorithms, to make decisions, even when the machine-generated output is incorrect or contradicts human judgment.

This overreliance can come from knowing how a system works or having used it before. It can also be influenced by cognitive biases, such as authority bias, heavy workloads and tight deadlines. This overestimation of a machine's capabilities, coupled with human complacency, can result in biased outcomes and critical errors.

Automated systems -- propelled by AI's promise of transformative productivity gains across industries -- still require human oversight. High-risk systems in healthcare, financial services, criminal justice and other sectors highlight the dangers of automation bias, which can, in some cases, lead to catastrophic outcomes.

One such example is the British Post Office Horizon scandal, which was first reported by Computer Weekly, now an Informa TechTarget publication. In 1999, the U.K. government started implementing the Horizon accounting system at approximately 14,000 Post Office branches. Unlike previous paper-based accounting methods, the new system did not offer a way to explain financial shortfalls. Based on miscalculations in Horizon and despite contradictory evidence and internal warnings about the software's flaws, the Post Office wrongly prosecuted more than 700 subpostmasters for theft and false accounting between 1999 and 2015.

How does automation bias work?

Automation bias generally manifests in two ways:

- Errors of commission. These errors occur when individuals follow an automated system's recommendations, even when the output appears questionable or contradictory.

- Errors of omission. These errors happen when human operators fail to recognize or act upon an automation failure.

Research on automation bias indicates that human decision-makers often place too much confidence in AI. However, when decisions involve higher stakes, people might become more skeptical about trusting algorithms. This tendency is especially evident in individuals with algorithm aversion, a psychological phenomenon in which people are less likely to trust algorithms, particularly after witnessing them make mistakes.

Early automation systems focused on mechanizing tasks previously performed by hand. With the advent of electronics and computer processing, manual tasks were automated through rules-based systems, programmed by human engineers using strict if-then logic. These early systems relied on deterministic algorithms -- sets of inputs that follow a defined sequence of instructions to solve well-structured problems -- and graphical user interfaces to streamline process automation workflows.

In contrast, machine learning models used in AI apply algorithms and large language models (LLMs) designed to support self-adaptive systems based on new information. These systems learn patterns and apply them to previously unseen data; however, how they arrive at their outputs is often far less transparent.

As some AI providers exhaust available internet data, their models increasingly rely on supervised and unsupervised reinforcement learning to generate outputs. Automated machine learning represents a fusion of automation and machine learning, enabling systems to optimize model development with minimal human intervention.

Why automation bias is dangerous

While it's important for human operators to trust or have confidence that an automated system will help them complete a task, those who are overworked or under time pressures might allow the machine to do the "thinking" for them -- a human tendency known as cognitive offloading. Overreliance on automated decision-making might be more prevalent among inexperienced individuals or those who lack confidence. Some individuals might become so reliant on automated systems that they fail to develop the critical skill sets and human judgment needed to assess high-risk scenarios, such as pilots who need to switch from autopilot to manual controls during extreme weather events.

AI comes with its own set of challenges. The LLMs in generative AI-enabled automation systems can sometimes produce false or made-up outputs, known as AI hallucinations. Increasingly, companies are making employees aware of the dangers of relying on automated decision-making -- especially AI-generated outputs -- for information critical to customers or business performance.

How to overcome automation bias

Mitigation strategies to overcome automation bias should involve the following.

Mandatory human oversight and involvement

Human involvement should be required for any fully automated decision-making processes that might affect individuals' rights, safety or business outcomes. Emphasizing the role of human decisions in machine interactions helps ensure fairness, accountability and quality control.

Focus on education and training

Human operators must maintain a healthy level of skepticism and know when to seek a second opinion or verify algorithmic outputs. Beyond raising awareness among employees and third-party vendors about the dangers of automation bias, management should also track errors and overrides, using that data to retrain both systems and staff.

Document compliance with data governance frameworks and regulations

The European Union's (EU) AI Act, implemented in August 2024, defines the following levels of risk for AI systems:

- Unacceptable.

- High.

- Limited, with transparency obligations.

- Minimal or no risk.

The EU's AI Act requires mechanisms that enable human oversight and compliance documentation with traceability for all high-risk AI systems used in critical infrastructure and other public services, such as healthcare and education. Although chatbots are classified as limited risk in the EU's AI Act, entities that deploy them must inform users they're interacting with a chatbot.

Evaluate data integrity and system-level design

To minimize the potential for bias pitfalls stemming from deficient IT processes, such as data corruption, inadequate security measures, incorrect logic, poor-quality data or automation errors, transparency and traceability are essential. This includes practices like explainable AI to clarify decision-making processes. It's important to ask the following question: How is the LLM trained or fine-tuned? When software engineers code algorithms, it's critical to have testers who can verify that the algorithms function as intended. Comprehensive evaluation processes should be in place to monitor the design, deployment and use of algorithms.

Automation bias vs. machine bias: Key differences

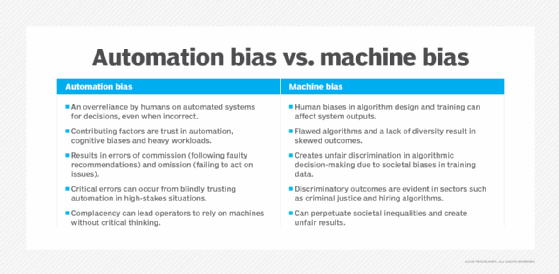

When humans trust a machine's decision-making, even when valid data points to other conclusions, this is known as automation bias. Sometimes, that bias is built into the machine itself based on the system's design, logic or algorithmic bias, and the data it collects. In the case of AI systems, machine learning bias often originates from the training data the system learns from. For that reason, bias audits and other measures to enforce transparency are necessary.

For example, a ProPublica investigation in 2016 uncovered machine bias in Northpointe's Correctional Offender Management Profiling for Alternative Sanctions, or COMPAS, system. Black defendants were more likely to be misclassified as "high risk" by the system, and a higher percentage of white defendants were mislabeled as "low risk." The investigation found that both judges and parole officers relied on the system's automated risk assessments of recidivism to make decisions about sentencing and bail requirements, even when the defendants' criminal records suggested otherwise.

The EU's AI Act requires that high-risk AI systems meet data quality standards, with compliance requirements that are traceable and show that data sets -- including those from suppliers -- are free from bias that can lead to discriminatory outcomes.

Can bias ever be justified?

In some cases, overreliance on the output of automated systems to complete a task is justified. For instance, automated monitoring systems might use historical data and predictive analytics to help security analysts flag unusual behavior, such as a credit card used for a large purchase in a location the cardholder has never visited, which can help detect potential fraud in payment systems and other financial services.

Similarly, in AI systems, training machine learning models and algorithms to identify and remove hate speech or racism can be considered a positive or good bias. This kind of bias is intentionally included to support ethical standards or meet legal requirements.