Kepler project aims to help curb Kubernetes energy waste

Running Kubernetes clusters can lead to high costs and energy use. The researchers behind the Kepler project hope the tool will help IT pros track and curb cluster energy waste.

Sustainability has become a priority in all aspects of a business, and IT operations is no exception. To manage energy efficiency and be climate-conscious, IT ops teams must look closely at where and what is using the most energy -- and one major offender is Kubernetes clusters.

But many teams don't know how to measure pod energy use or overall energy consumption.

At the Linux Foundation Open Source Summit 2022, Huamin Chen, senior principal software engineer at Red Hat, and Chen Wang, research staff member at IBM, led a session on how to approach and implement sustainability in container environments with the Kepler project.

How to understand Kubernetes energy use

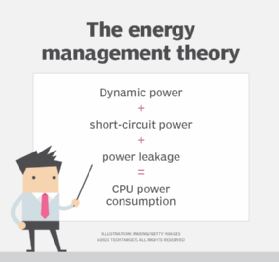

Chen explained that IT ops teams can pinpoint what consumes the most electrical energy using energy management theory, an equation that calculates CPU power consumption by adding together dynamic power, short-circuit power and power leakage.

Dynamic power, which is consumed when the CPU instructions run, is a major CPU energy consumer. Dynamic power contains three factors: switched load capacity, circuit operation frequency, and voltage in real time and in data centers.

In addition, IT ops teams must consider current leakage, which continues to run when the application is dormant.

Kubernetes energy measurement methods

From there, IT ops teams can use the energy measurement methodology to measure circuit frequency, capacitance and execution time. In an ideal world, IT ops teams could monitor each circuit frequency, the number of circuits on and the circuit durations to find where energy is being wasted.

However, as of the time of the seminar, there is no way to measure a CPU's capacitance or execution time -- and energy consumption metrics are only available at node levels.

To bypass these hurdles, IT ops teams must get creative and look at the average frequency over time for approximations, refer to the number of CPU instructions to approximate the capacity and use the CPU cycles for execution time. With these rough measurements in mind, IT ops teams can start to attribute power consumption to processes, containers or pods.

Working with Kepler

To help with this process, Chen and Wang introduced Kepler, or Kubernetes-based Efficient Power Level Exporter, to capture power use metrics. Kepler is an open source project that focuses on reporting, reduction and regression to help curb energy use.

The Kepler tool integrates with Kubernetes to collect information from the CPU, GPU and RAM through eBPF, or extended Berkeley Packet Filter, programs to measure and capture performance. Kepler collects data at the kernel level so that the energy can be attributed to processes with as much transparency as possible.

Once Kepler collects the data, IT ops teams can put it into Prometheus to translate the metrics into readable dashboards to help make energy conservation decisions. In addition, Kepler uses regression and machine learning models to estimate future energy consumption and improve accuracy.

In the future, the Kepler project hopes to make an energy consumption and carbon emissions dashboard to display the carbon footprint per pod. The tool's developers also hope to provide energy-aware pod scheduling and node tuning.