Understand how Docker works in the VM-based IT world

With advantages for microservices architectures, disaster recovery and utilization density, among other areas, containers should step out from virtual machines' shadow.

Docker, a household name in IT, is still far from mainstream adoption, although it has gained a degree of traction in enterprises. With mounting acceptance, your organization can't avoid containers and stick to virtual machines in perpetuity.

In simplest terms, Docker is a means to package and provision code so that it can move across different parts of an IT platform. While it may seem unclear how Docker works, it's used for various reasons in enterprise IT. Application containerization optimizes hybrid cloud setups and provides a flexible and responsive IT platform.

Does that mean Docker is used for the same purposes as VMs? Yes and no. Docker operates differently, and that informs where it is used.

How Docker works in contrast to VMs

The basic VM holds everything necessary for the workload to run, such as the OS, app server, application and any associated databases. That package can transition onto any platform that supports the VM: VMware VMs operate on any platform that has an ESXi hypervisor; Microsoft VMs work on any platform with Hyper-V.

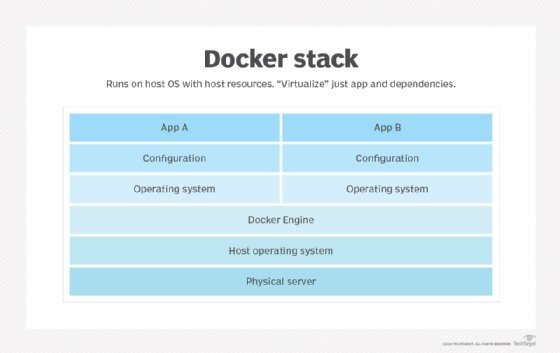

A Docker container works differently. It holds only what the application requires to run above a platform. Docker containers are not used for hardware virtualization or complete application workload hosting. The container, generally, doesn't include the OS, nor do individual containers require an app server -- provided the underlying platform has one installed.

Containers aren't the pinnacle of virtualization

Docker containers are not as portable as VMs are: Docker images are OS-dependent, and in some cases, the container might require a specific version and patch level of the OS, although hard versioning is a bad coding practice.

A long-standing user complaint of Docker was that a poorly written container could pass privileged calls from the container through to the underlying platform. Therefore, if a malicious entity hijacks the container, it could compromise the underlying platform and subsequently bring all Docker images to their knees. Later versions of Docker addressed this security issue.

However, these points prompt container newcomers to ask how Docker works, if VMs are seemingly more platform-independent and more secure?

Containers are more efficient than VMs

The way in which Docker works gives it both obvious and subtle advantages over server virtualization with VMs.

Docker containers require fewer resources, both physical and virtual, than comparable VMs. Because the OS is external and shared among the containers, each instance requires significantly less storage space, whereas each VM needs resources to run its own OS. For every VM that runs on a given platform, Docker can run several containers.

The shared OS means that container maintenance can be easier than with VMs -- but this isn't guaranteed. Admins must touch every single VM to implement an OS patch or upgrade, but with Docker environments, they simply update the one underlying shared OS.

Maintenance is not so easy with high version- and patch-level sensitivity. In a VM, each isolated workload has its own OS at whatever version and with whatever patches it needs to function; in containers, the underlying platform can only support one OS. Some organizations embed containers inside of VMs to circumvent this hurdle, but this isn't a best practice for long-term operations: It introduces unnecessary complexity, along with performance issues.

Additionally, Docker is used for increased granularity in application deployments. It isn't impossible to operate a microservices environment deployed on VMs, but as storage and resource constraints become apparent, organizations find only regret and a thinner wallet. Docker application containerization enables admins to create, deploy and link small functional pieces of code to provide a composite application that is still lightweight.

How Docker works has advantages over VMs when used for business continuity and disaster recovery efforts. New instances of a Docker container can be provisioned on different parts of the IT platform easily and quickly: A container that normally runs on premises can be shot into a public cloud environment and provisioned, or brought from cold storage to a live environment, more rapidly than a VM.

In some cases, VMs are still the right model. For example, a static application environment where the capability to provision it to new hardware is of paramount importance suits VM-based deployment. VMs won't disappear overnight. Generally speaking, however, Docker is for the DevOps age: It can handle continuous development and delivery, and its microservices focus makes it fit for future application architectures. Combine this with either the Docker support ecosystem -- which is technically complex but competent -- or an orchestration and management tool, like Kubernetes, and Docker is ready for prime time.