Gaussian splatting

What is Gaussian splatting?

Gaussian splatting renders extremely high-quality images -- using numerous scans of an object -- that can then be viewed from any angle and explored in real time. The technology can also be trained relatively quickly to create smaller files than the traditional formats used to represent a 3D scene in metaverse, digital twin, spatial computing and virtual reality (VR) applications. While its files are somewhat bigger than those in a neural radiance field (NeRF), Gaussian splats, or Gaussians, have far higher quality and result in a more interactive experience.

The term Gaussian splatting is derived from the following: Gaussian -- from the last name of Carl Friedrich Gauss, who pioneered the discrete probability distribution techniques in the 1800s -- because of the way the technology represents content using blurry clouds rather than the well-defined triangles and precise pixels used in the common 3D rendering techniques found in most modern tools; while splatting is based on the sound a snowball makes as it hits and spreads across a window.

Emerging in August 2023, Gaussian splatting promised a dramatic improvement in the ability to capture reality quickly, cheaply and accurately, and then render it across phones, computers, VR and extended reality devices.

It's still early days, but researchers and vendors are already finding ways to shrink file sizes, capture and render motion, and create dynamic 3D avatars to let users explore, for example, yoga poses from any angle. Companies like Adobe, Apple, Google and Meta are starting to integrate the new technique into their latest enterprise applications. Developers are also quickly rolling out plugins for popular metaverse and spatial computing platforms like Epic Unreal Engine, Nvidia Omniverse and Unity. Consumer apps from Poly (formerly Polycom) and Luma AI let users create Gaussian splats in the cloud using a smartphone or tablet.

History of Gaussian splatting

The core idea behind Gaussian splatting originated in a 1991 doctorate thesis by Lee Alan Westover at the University of North Carolina at Chapel Hill, who conceived of splatting as the sound a snowball makes hitting a brick wall. In his thesis, Westover explained splatting in the following way:

"Consider the input volume to be a stack of snowballs and the image plane to be a brick wall. Image generation is the process of throwing each snowball, one-by-one, at the wall. As each snowball hits the wall, the snowball flattens, makes a noise that sounds like 'splat' and spreads its contribution across the wall. Snowballs thrown later obscure snowballs thrown earlier. This mental picture of the feed-forward rendering process inspired the name splatting. In the actual algorithm, the term splat refers to the process of determining a sample's image-space footprint on the image plane, and adding the sample's effect over that footprint to the image."

However, the algorithms Westover created did not run efficiently on the hardware at the time. So, the computer graphics industry turned to other techniques based on point clouds, voxels and meshes to represent a 3D scene.

An important aspect of all this lies in finding better ways to capture a 3D representation of the world. Researchers began exploring photogrammetry techniques that could interpolate 3D models from overlapping images. But this was computationally expensive and slow to render. Further research in 2006 discovered ways to add details through a process called structure from motion (SfM) that filled in the gaps by generating new intermediate views. However, the resulting models could hallucinate details that did not exist in the original scene.

In 2020, researchers developed NeRFs that could fill in these gaps to generate high-quality images from any angle that could be packed into much smaller files. These use neural networks to create a model of the radiance of light one might view from various angles. However, they were slow to train and only let users navigate these virtual worlds at approximately eight frames per second (FPS). This created considerable interest among researchers and vendors to help address these gaps.

In August 2023, a team of French and German researchers built on some of the core innovations in NeRFs but changed how they store data using Gaussian splatting. They also trained the model using gradient descent. They published their results at SIGGRAPH 2023 in a paper titled "3D Gaussian Splatting for Real-Time Radiance Field Rendering." Their new approach reduced the need to compute for empty spaces, introduced a technique to optimize the creation of Gaussians, and included a new algorithm that is faster to train and render.

However, the approach was also memory-intensive, generated larger file sizes and, like NeRFs, could only render static scenes of a single moment in time. Since then, other researchers have found a way to reduce file sizes, render moving content and improve performance.

How does Gaussian splatting work?

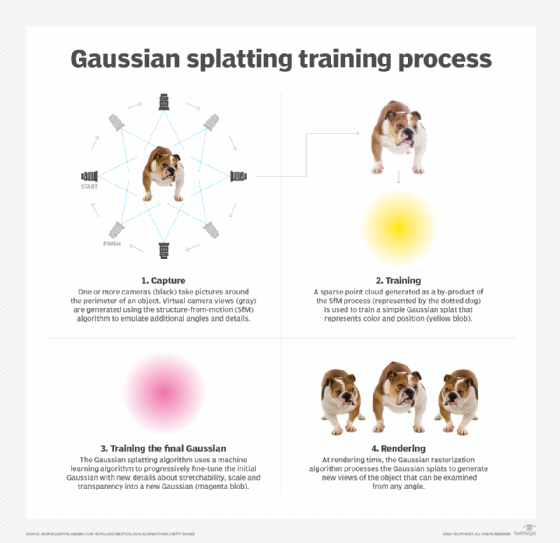

Gaussian splatting starts by taking images or videos of a scene from different angles. SfM techniques then fill in the blanks to estimate a 3D point cloud that models what the scene would look like from all directions. Next, the algorithm converts each point into overlapping Gaussian splats that look like blurry clouds. However, these initial Gaussians only represent position and color.

A follow-on training process cycles through thousands of iterations of calculation using gradient descent to fill in additional details about how the point might be stretched or scaled (covariance) and its transparency. This process creates a file that contains millions of particles representing position, color, covariance and transparency.

At rendering time, a process called Gaussian rasterization transforms each Gaussian particle into the appropriate red, blue and green colored pixels that make up each view. This is related to the rasterization process used to transform raw 3D data in other techniques but using different algorithms. For example, the most common rasterization method is to represent the world using triangles -- colored in with textures -- that are collected and arranged in a mesh. The mesh then takes on the texture of the complete collection of triangles. However, Gaussian splatting replaces the precisely defined triangles with Gaussian blurs that also capture the texture directly.

Why is Gaussian splatting so important?

Gaussian splatting promises to streamline the process of capturing reality more efficiently, which is one of the thorniest challenges in building the industrial metaverse. One common example of this problem is Google Street View requiring viewers to hop 15 feet for a new view. Once Gaussian splatting is integrated into metaverse and spatial computing engines, users will be able to navigate any 3D-captured space smoothly as if they were playing a modern computer game.

Researchers and vendors have traditionally used two techniques to capture 3D worlds: photogrammetry and lidar. With photogrammetry, the algorithms are relatively slow to train, result in large file sizes and are difficult to render for new views. They also struggle with fine details, transparent materials and reflections. Lidar can capture extremely high-fidelity models of the world into point cloud files down to the millimeter level but without any color information. Gaussian splatting promises to capture high-quality, fine-grained details along with their reflective and transparent properties.

Gaussian splatting will help enable enterprises to capture and update 3D models on an ongoing basis to track processes, understand change and facilitate new user experiences. The first use cases focus on improving user experiences. These techniques will eventually be integrated into various AI and machine learning workflows to continuously update digital twins of buildings, factories, physical infrastructure and human processes. Gaussian splatting could also improve world-scale digital twins that combine satellite imagery to enhance vegetation management, quantify natural disasters and facilitate planning.

Some of these workflows might raise new privacy concerns that must be considered. For example, it might be possible to model how people perform a physical process or provide ergonomic feedback. Still, caution will be required if users don't feel comfortable with how 3D surveillance is implemented, and in some cases, new regulatory risks could arise.

Here are some of the various ways enterprises could use Gaussian splatting:

- Automate insurance damage assessments of cars and buildings.

- Streamline progress monitoring in construction and mining.

- Quantify stockpiles of materials as part of enterprise resource planning management processes.

- Enable consumers to view highly realistic models of products in their living rooms.

- Improve virtual clothes try-ons on a realistic representation of consumer avatars.

- Automate road quality assessments to prioritize repairs.

- Support continuously updated city models using consumer-grade dashcams.

What is the difference between NeRF and Gaussian splatting?

Gaussian splatting builds on prior work on NeRF and uses many of the same elements, such as radiance fields and SfM techniques. But there are also some important differences in terms of the training process and their technical characteristics.

NeRF uses neural networks to store the files as neural weights that capture a radiance field using voxels, hashes, grids or points. Gaussian splatting stores files as a collection of Gaussian points trained using gradient descent. Although gradient descent is a machine learning technique often used to train neural networks, the first Gaussian splatting used gradient descent on its own to represent the data using Gaussian splatting rather than weights in a neural network. Other researchers have since used other machine learning techniques, including neural nets, to compress the data.

Second, NeRF uses ray tracing to generate images. Gaussian splatting uses a Gaussian rasterizer that applies a new rasterizing approach to generate pixels that consider the position, how it is stretched or scaled, color and transparency.

There are also numerous technical differences. The training time for Gaussian splatting is approximately 50 times faster than NeRFs for the same or better quality. They can also render images at more than 135 FPS compared to .1 FPS for the highest quality NeRFs and 8 FPS for lower quality but faster NeRFs.

But NeRFs do better when it comes to storage and memory. Early Gaussian splatting files were 50 to 100 times larger and also required more than 24 gigabytes of video random access memory (VRAM) compared to a few gigabytes for NeRFs. VRAM is a special kind of RAM directly connected to a graphics processing unit that is separate from regular RAM, and these levels are only available on the highest specification graphics cards. As a result, many Gaussian splats are trained in the cloud. It is possible to reduce this burden, such as running the training process using a computer's central processing unit with access to larger RAM, but this is much slower.

Researchers are also finding ways to mitigate the various limitations of Gaussian splatting. For example, one team recently found a way to use a separate neural network processing step to shrink Gaussian splats to one-tenth their size. Gaussian splatting is a very active area of research, with more than 80 scientific papers posted within five months. Other techniques will likely be discovered to improve their performance further in the near future.