What to do when a BC/DR plan goes awry

Insufficient testing or not knowing what to expect could result in a failed business continuity plan. Luckily, there are steps you can take if your BC/DR planning goes belly up.

Even in the best of recovery circumstances, there is always the possibility that something will go wrong. No matter how thorough your planning is, or how many scenarios you've imagined, there's no guarantee that everything will go according to plan.

I used to work for a software company in South Florida, right in the middle of "hurricane alley" -- that warm stretch of the Atlantic Ocean where hurricanes form, grow in strength and eventually hit land. The company had a solid business continuity and disaster recovery plan -- the "nuclear football" as we affectionately called it. It was a very detailed BC/DR plan on how the company would recover from loss of data, applications, systems and operations. We were ready for whatever a hurricane could throw at us -- or so we thought.

Then a hurricane hit about a week before a major software launch. The aftermath was such a comedy of errors, our BC/DR plan never stood a chance.

The building lost power. No problem, the building has a generator. Then we found out the building management forgot to get diesel gas for the generator. Okay. We'll go get diesel from a gas company at the port. Turns out the company providing the diesel was out of the unleaded fuel needed to run the diesel pumps to deliver the business-saving fuel we desperately needed. All of South Florida had no power. And at the time, virtualization was very new, so the idea of having VMs replicated to cloud-based infrastructure didn't exist. So -- no power, no infrastructure and a product launch in a week.

We were screwed.

How BC/DR can go wrong

Perhaps you won't face a disaster in the magnitude of the one mentioned above, but there is always the possibility that the recovery steps you believe will bring the organization back into a state of operation won't work for a few reasons:

- A lack of planning. Your BC/DR strategy should include every last recovery detail, process and contingency. If your plan doesn't account for specific disaster scenarios, and provide the recovery steps necessary to get you operational, things may go south for you quickly.

- A lack of testing. I was once told that a BC/DR plan isn't worth the paper it's printed on. Without actually testing the plan, that statement is 100% true. For example, if you plan on recovering to the cloud, you should not only test recovering there, but also operations in a failover state, as well as failback. Without testing, your plan is just a really good untried idea.

- A lack of results. Even with a plan that is tested quarterly, you still don't know for certain if the most recent backups are good or if they're consistent with backups of dependent applications and services. So it's still possible to see recovery efforts end up with an unexpected result.

- Simply not being prepared. Like my hurricane experience, there may be a disaster scenario you simply can't plan for. I'm a big fan of having a BC/DR plan that doesn't just cover how to address the recovery of a given set of systems and applications. I like the idea of having specific plans to address specific disaster scenarios. The recovery from a corrupt OS is vastly different from the process of recovering when the building burns down.

When a BC/DR plan goes to pot

Think of the remainder of this article as a sort of incident response template. That is, in the event your BC/DR plan fails, you have a backup plan (pun somewhat intended) to get you out of the situation you're in.

The following three steps provide a simple overview of where your head needs to be:

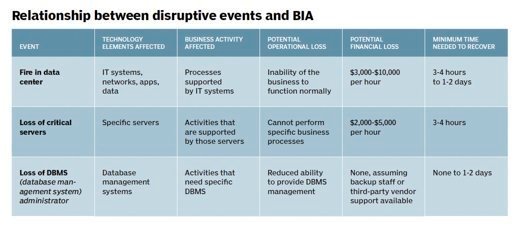

- Prioritize. In theory, you should have this already done. A list of which systems are most critical to the operation in priority order is necessary, so you can focus your efforts on the applications, services, systems and data that will have the greatest positive impact on the business.

- Strategize. Given your current disaster circumstance, you need to determine your options. What's the fastest way to recover? Do you need to work with a recovery partner with cloud-based infrastructure? Do you have an alternate location? Whatever the barrier to recovery, you need to quickly formulate a strategy of how you're going to simply make recovery happen.

- Execute. You're already behind. Your plan failed, you've needed to spend time reprioritizing and figuring out how and where to recover. If you have a new contingency plan that's approved by the executive team, get moving. That same executive team can likely calculate how much revenue the company is losing for every minute longer your recovery takes. Up the pace and work with urgency to recover as quickly as possible.

In the case of my old employer, we needed to pull servers and their drives out of racks; drive them to Orlando about three hours north of us; build an ad hoc data center in a hotel room, complete with CAT5 wiring running down the hallway to a conference room where the dev team was working to make the product launch; and run operations from there -- something that was definitely not in the plan.

As you build, review, update and test your BC/DR strategy, have a fallback plan just in case all hell breaks loose. Doing so will speed up the process of recovery, ensuring your ability to get the business up and operational, even in the worst of circumstances.