How to prevent deepfakes in the era of generative AI

Businesses must be ever vigilant in detecting the increasingly sophisticated nuances of deepfakes by applying security techniques that range from the simple to the complex.

Generative AI is getting more proficient at creating deepfakes that can sound and look realistic. As a result, some of the more sophisticated spoofers have taken social engineering attacks to a more sinister level.

In its early stages of development, deepfake AI was capable of generating a generic representation of a person. More recently, deepfakes have used synthesized voices and videos of specific individuals to launch cyber attacks, create fake news and harm reputations.

How AI deepfake technology works

Deepfakes use deep learning techniques, such as generative adversarial networks, to digitally alter and simulate a real person. Malicious examples have included mimicking a manager's instructions to employees, generating a fake message to a family in distress and distributing false embarrassing photos of individuals.

Cases such as these are mounting as deepfakes become more realistic and harder to detect. And they're easier to generate, thanks to improvements in tools that were created for legitimate purposes. Microsoft, for example, just rolled out a new language translation service that mimics a human's voice in another language. But a big concern is that these tools also make it easier for perpetrators to disrupt business operations.

This article is part of

What is GenAI? Generative AI explained

Fortunately, tools to detect deepfakes are also improving. Deepfake detectors can search for telltale biometric signs within a video, such as a person's heartbeat or a voice generated by human vocal organs rather than a synthesizer. Ironically, the tools used to train and improve these detectors today could eventually be used to train the next generation of deepfakes as well.

Meantime, businesses can take several steps to prepare for the increasing incidence and sophistication of deepfake attacks -- from simply training employees to spot signs of these attacks to more sophisticated authentication and security tools and procedures.

Deepfake attacks can be separated into four general categories, according to Robert Scalise, global managing partner of risk and cyber strategy at Tata Consultancy Services (TCS):

- Misinformation, disinformation and malinformation.

- Intellectual property infringement.

- Defamation.

- Pornography.

Deepfake attack examples

The first serious deepfake attack occurred in 2019, according to Oded Vanunu, head of products vulnerability research at IT security provider Check Point Software Technologies. Hackers impersonated a phone request from a CEO, resulting in a $243,000 bank transfer. That incident forced financial institutions to be more vigilant and take greater precautions, while hackers grew more sophisticated.

In 2021, criminals tricked a bank manager into transferring a whopping $35 million to a fraudulent bank account. "The criminals knew that the company was about to make an acquisition and would need to initiate a wire transfer to purchase the other company," said Gregory Hatcher, founder of cybersecurity consultancy White Knight Labs. "The criminals timed the attack perfectly, and the bank manager transferred the funds."

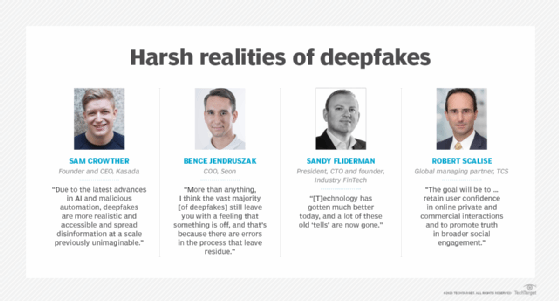

The latest generation of bots are using deepfake technology to evade detection, said Sam Crowther, founder and CEO of bot protection and mitigation software provider Kasada. "Deepfakes, when combined with bots, are becoming an increasing threat to our social, business and political systems," he explained. "Due to the latest advances in AI and malicious automation, deepfakes are more realistic and accessible and spread disinformation at a scale previously unimaginable." The pro-China propaganda organization Spamouflage, for instance, uses bots to create fake accounts, share deepfake videos and spread disinformation through social media platforms.

"Deepfake attacks are no longer a hypothetical threat. They deserve attention from companies right now," warned Bence Jendruszak, COO at fraud prevention tools provider Seon Technologies. "That means teaching staff about what to look out for and just generally raising education around their prevalence."

Best practices to detect deepfake technology

At one time, most online video and audio presentations could be accepted as real. Not anymore. Today, detecting deepfakes can be a combination of art and science.

It's possible for humans to detect irregular vocal cadences or unrealistic shadows around the eyes of an AI-generated person, Jendruszak said. "More than anything," he added, "I think the vast majority [of deepfakes] still leave you with a feeling that something is off, and that's because there are errors in the process that leave residue."

There are several telltale signs that humans can look for when discerning real from fake images, including the following:

- Incongruencies in the skin and parts of the body.

- Shadows around the eyes.

- Unusual blinking patterns.

- Unusual glare on eyeglasses.

- Unrealistic movements of the mouth.

- Unnatural lip coloration compared to the face.

- Facial hair incompatible with the face.

- Unrealistic moles on the face.

Detecting fakes in videos of the past was easier -- shifts in skin tone, unusual blinking patterns or jerky movements, said Sandy Fliderman, president, CTO and founder of financial infrastructure services provider Industry FinTech. "[B]ut technology has gotten much better today, and a lot of these old 'tells' are now gone." Today, telltale signs might appear as anomalies in lighting and shading, which deepfake technology has yet to perfect.

It might be helpful to use forensic analysis, Vanunu suggested, by examining the metadata of the video or audio file to determine manipulation or alteration. Specialized software can also be used for reverse image searches to discover visually similar images used in other contexts. In addition, companies are increasingly using 3D synthetic data to develop more sophisticated facial verification models that use 3D, multisensor and dynamic facial data to conduct liveness detection, said Yashar Behzadi, CEO and founder of synthetic data platform provider Synthesis AI.

As for audio deepfakes, Hatcher recommended that telltale signs can be found in choppy sentences, strange word choices and the speaker's abnormal inflection or tone of voice.

New standards bodies like the Coalition for Content Provenance and Authenticity (C2PA) are creating technical standards for verifying content source, history and origin. The C2PA promotes industry collaboration with companies, including Adobe, Arm, Intel, Microsoft and Truepic. Adobe and Microsoft are also working on content credentials that help verify the authenticity of images and videos.

How to create strong security procedures

Preventing the damage caused by deepfake attacks should be part of an organization's security strategy. Companies should refer to the Cybersecurity and Infrastructure Security Agency for procedures such as the Zero Trust Maturity Model to help mitigate deepfake attacks.

Businesses also can do the following to guard against spoofing attacks:

- Develop a multistep authentication process that includes verbal and internal approval systems.

- Change up processes by reverse-engineering how hackers use deepfakes to penetrate security systems.

- Establish policies and procedures based on industry norms and new standards.

- Stay abreast of the latest tools and technologies to thwart increasingly sophisticated deepfakes.

Future of deepfake attacks

As a natural extension of ransomware as a service, expect the prevalence of deepfakes as a service based on neural network technology that can enable anyone to create a video of anyone. "In the face of such likely proliferation," Scalise warned, "both the public and private sectors will need to collaborate and remain vigilant. The goal will be to … retain user confidence in online private and commercial interactions and to promote truth in broader social engagement."

Deepfake attacks are evolving in lockstep with new technologies designed to detect them. "The future of deepfake attacks is difficult to predict, but it is likely that they will become more prevalent and sophisticated due to AI's progress," Vanunu reasoned. "As the technology behind deepfakes continues to improve, it will become easier for attackers to create convincing deepfakes, making it more difficult to detect them."

Editor's note: This article was updated in July 2024 to improve the reader experience.

George Lawton is a journalist based in London. Over the last 30 years he has written over 3,000 stories for publications about computers, communications, knowledge management, business, health and other areas that interest him.